What Is Emotion Analysis?

Computer Vision can be used to predict human emotions by using deep neural networks to analyze facial expressions. Human emotions or feelings that arise spontaneously rather than through conscious effort are often accompanied by physiological changes in facial muscles.

Deep learning models show quite good accuracy in obtaining information about human emotions based on facial expressions.

Emotion Recognition Features

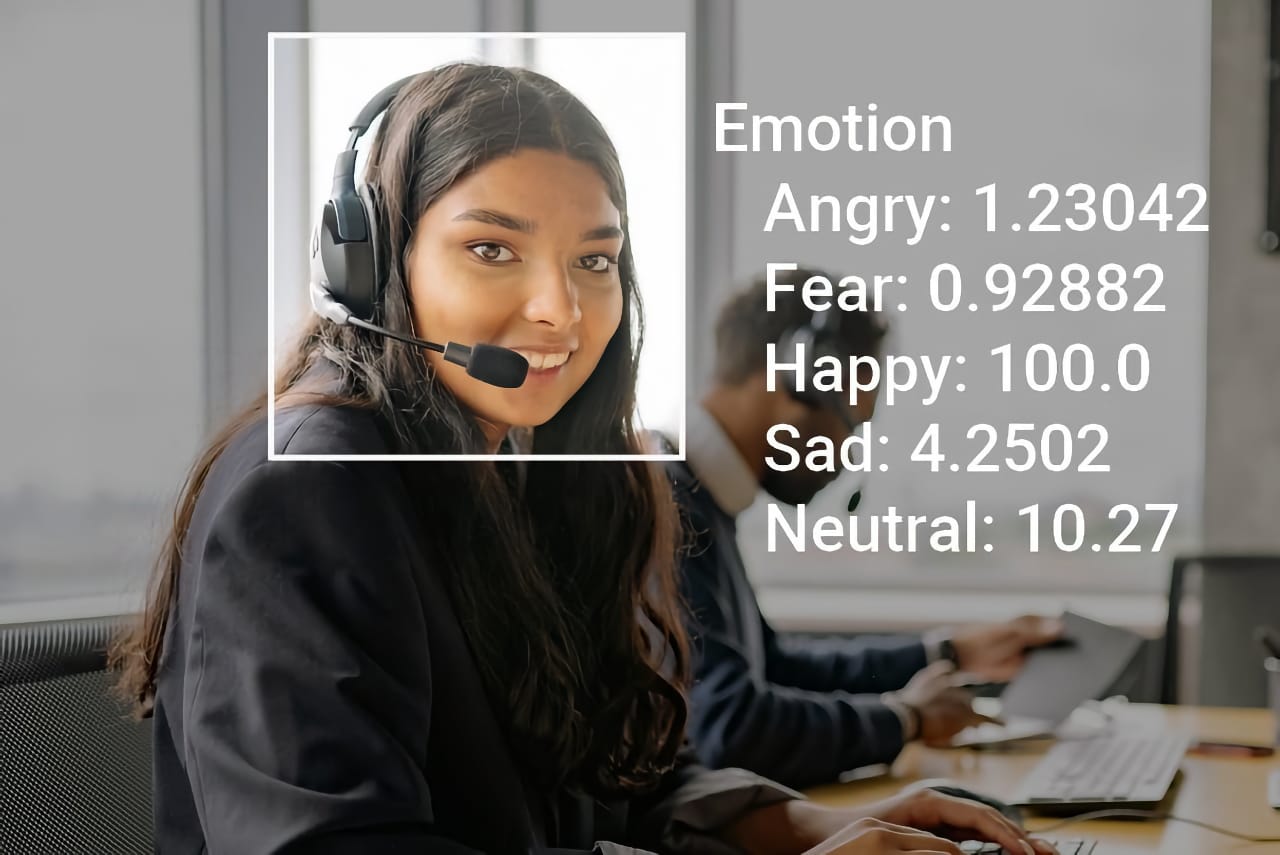

The video stream of cameras can be used to recognize the emotional states of one or multiple individuals by applying a deep learning algorithm to video frames (images). The analysis of emotion recognition using facial expressions that can be classified in pre-determined states:

- Detection of emotional states: sadness, anger, happiness, fear, surprise, and neutral state.

- Changes of emotional states based on specific conditions and events.

- Measure a confidence score for recognized emotions.

- Real-time face emotion analysis with video streams of cameras.

- Edge AI allows privacy-preserving emotion analysis with on-device processing.

The Value of Emotion Recognition

Vision-based facial sentiment analysis and emotion identification is used to perform automated emotion estimation. The use of emotional information is novel and not yet well-exploited in real-world use cases. Emotion recognition has been a hot topic and has been applied in many areas such as safe driving, health care, and social security.

- Automated emotion analysis on workplaces, for example, call center monitoring.

- Measure and increase customer service quality between branches by analyzing the emotions of employees or customers.

- Analyze the impact of marketing campaigns using brand reputation analysis at the point of sales.

- Mental health monitoring in health care where sentiment analysis is used to analyze patient feedback, the patient’s health status, medical conditions, and enhance treatment.

- Enhancing the safety of transport through monitoring of driver emotions and driver fatigue.