From blind spots to full visibility. From “hoping we saw it” to “knowing we did.”

Across manufacturing, construction, logistics, utilities, waste management and retail, HSE leaders share a familiar frustration: they’re accountable for everything that happens on site, including potential hazards and what has passed by unseen.

Near-misses on the night shift. PPE lapses between rounds. Unsafe shortcuts that appear for a few seconds, then disappear without reporting. Traditional safety systems, from inspections, to audits, and CCTV reviews, were never designed to cover every safety hazard, at every moment, in every area, across every shift.

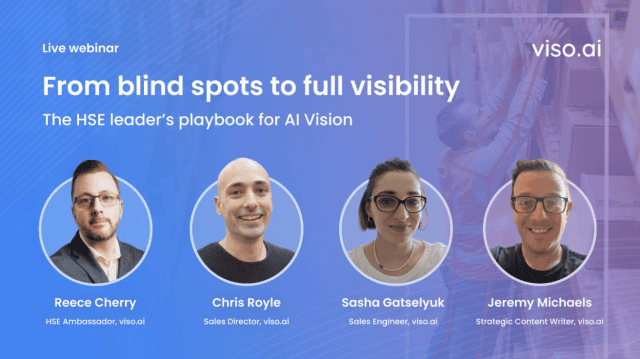

Our recent webinar, “From Blind Spots to Eyes That Never Blink: The HSE Leader’s Playbook for AI Vision”, brought together three experts deeply familiar with this challenge:

- Reece Cherry: HSE Ambassador with 15+ years frontline experience at Kraft Heinz, Reckitt and Yodel

- Chris Royle: Sales Director at viso.ai, supporting enterprise-scale deployments

- Sasha Gatselyuk: Sales Engineer, designing and delivering real-world safety applications

Hosted by Jeremy Michaels, the session explored how AI Vision turns blind spots into continuous visibility, without turning safety into surveillance.

Below, we summarise the key themes, lessons and practical guidance from the discussion.

Safety risks live in the blind spots

For many organisations, injury rates improved significantly over the last decade thanks to training, audits, digital reporting and behaviour-based programmes. But progress has slowed.

It is often the case that teams behave differently when the HSE team is present, control measures are in place, or where they know specific areas are being monitored. As a result, many incidents happen:

- Between shifts, when supervision is lighter

- Between rounds, in areas audits don’t cover

- Between tasks, in the five seconds when someone thinks “just this once”

The path towards zero harm, and maintaining it, can be made more efficient and consistent with AI Vision. Human observation has limits and the next leap in safety performance doesn’t come from more checklists. It comes from visibility.

This is where the business case becomes clear. Major incidents may be rare, but risk is continuous, and the clearest signal sits in near-misses, the hundreds of precursor events that traditional systems barely capture.

In one deployment, AI Vision detected 154 near-misses per month, compared to only a handful that at were self-reported. That gap, between perceived risk and actual risk, is exactly where AI Vision delivers value. It reveals the pattern, not just the aftermath, when it comes to occupational safety and health.

Near-misses, not incidents: the business case that holds up

HSE leaders often agree that AI Vision sounds valuable but struggle to articulate why in a business case. The webinar outlined a simple, credible structure:

- Start with serious harm: infrequent but high-impact events

- Use near-misses as the metric that matters: hundreds of times more common

- Quantify under-reporting: most organisations capture only 10-20% of near-miss reality

With AI Vision automatically logging these events, HSE teams can:

- Identify risk clusters by time, zone or behaviour

- Target interventions and training where they matter

- Prove that interventions reduced risk

- Connect safety improvements to downtime, insurance and operational KPIs

And, as highlighted, there’s a productivity lift too: auto-populated forms, continuous audit data and fewer manual checks mean HSE professionals spend more time on prevention, coaching and improvement, and less on paperwork.

From blind spots to continuous visibility: where to start

A key theme was use-case triage. Not all safety challenges and workplace hazards are equal, and not all are good first steps.

The panel identified three ideal starting points:

1. PPE compliance monitoring

Hard hats, hi-vis, gloves, glasses: frequent events that quickly validate model performance. Useful for early demonstrations of value.

2. Man-machine proximity

People near forklifts, AGVs, cranes and heavy equipment. These are high-severity, high-concern risks and early wins carry cultural weight.

3. Restricted and conditional zone breaches

Under suspended loads, entering crane swing radiuses, or areas that become hazardous only during certain operations. Easy to explain and act on.

These use cases share important characteristics:

- High frequency → rapid signal and measurable performance

- High clarity → easy for frontline teams to understand

- High relevance → already aligned with existing procedures

The panel warned against starting with rare events like slips and trips. When something occurs only a few times a year, it’s almost impossible to validate performance quickly, and momentum can stall.

Start where the signal is strong and the value is immediate.

Architecture that works in the real world

One of the biggest myths is that AI Vision requires ripping out the existing camera estate. In reality the most effective setups are lean, local, and hardware-agnostic.

Key principles:

Use the cameras you already have

If the stream is clear enough, existing CCTV works. When new cameras are needed, they don’t need to be high-end or expensive.

Process at the edge

AI runs on an on-site edge device, not in the cloud. This means:

- No streaming costs

- No latency

- No dependency on external networks

- No need to send video off-site

Integrate into your existing safety ecosystem

AI Vision should feed the systems people already use: sirens, radios, HSE systems, ticketing. Detection is only valuable when it triggers meaningful, timely action.

Once this architecture is proven on one site, it can be replicated rapidly. Many organisations move from pilot to multi-site rollout in months using the same repeatable pattern.

Readiness is more about people and process than technology

The panel agreed that technology is rarely the barrier. Organisational alignment is.

Two questions define early readiness:

- Are HSE, Operations, Facilities and IT aligned from Day One?

- Do they agree on success metrics and alert-handling responsibilities?

If not, deployments stall, not because the model fails, but because nobody knows who should act on what.

Two further essentials were highlighted:

Early involvement of privacy and data governance

Define retention, access, storage and security before deployment, not after.

Clear process ownership

Who reviews alerts? Who validates accuracy? Who escalates? Who closes the loop?

When these questions are answered upfront, organisations achieve smooth 90-day rollouts instead of multi-year delays.

Safety, not surveillance: building trust

Many questions in the Q&A centred not on models or accuracy, but on people:

- Will workers feel monitored or protected?

- Will morale suffer?

- Will supervisors use detections punitively?

The panel drew a clear distinction:

Surveillance watches people to catch them doing wrong.

Safety monitoring watches conditions to prevent harm.

Intent, and communication of that intent, matters.

Effective strategies include:

- Plain-language explanations of what the system watches for (hazards, not individuals)

- Visible materials and briefings explaining data handling, privacy and purpose

- Non-punitive use by design, using detections for coaching and system improvement

When workers understand why AI Vision exists, and see it speeding up response times and reducing intrusive audits, scepticism quickly shifts to advocacy.

Privacy and compliance by design

Trust is maintained not just through communication, but through technical design. “Privacy-by-design” means:

- Local processing at the edge (no cloud streaming)

- Minimal retention, only as long as required

- Restricted access, with full audit trails

- Anonymisation, using blurring and abstracted logs when appropriate

The panel recommended every HSE leader ask three vendor questions:

1. Who owns the data and models?

You should own your streams, your configurations, and any models trained on your data.

2. How is privacy and security assured?

Look for independent certifications and strong governance, not marketing claims.

3. How scalable and future-proof is the platform?

Can it grow with new use cases and new models without breaking architecture or budgets?

A final requirement: auditability, being able to demonstrate who saw what, when, and why. This matters for regulators and for workforce trust.

Beyond detection: towards predictive safety and VGI

Today, most AI Vision deployments focus on detection, identifying events as they happen. The next frontier is prediction: spotting conditions and behaviours that precede incidents.

Early examples already emerging include:

- Higher risk during certain shifts

- Repeating patterns during specific operations

- Correlations with temperature, lighting or workflow stages

By surfacing these patterns, AI Vision can warn supervisors before risk escalates, a step-change for prevention.

Beyond that lies Visual General Intelligence (VGI), systems that don’t just detect objects but interpret scenes and intent. The difference?

Today: “person on ground detected”

Tomorrow: “person appears injured and needs assistance”

The message to organisations was clear:

Don’t wait for perfect technology. The organisations preparing now, building data foundations, choosing flexible platforms, creating workflows and trust, will be first to benefit as VGI becomes mainstream.

Conclusion: from “is this right for us?” to “how do we begin?”

AI Vision is no longer futuristic. It is already deployed across real sites, detecting real risks, and giving HSE leaders something they’ve never had before: eyes that never blink.

Key takeaways:

- Traditional methods have plateaued; the remaining risk is in the gaps humans can’t cover

- AI Vision amplifies safety teams, especially around near-misses

- Early success depends on the right use cases and edge-based architecture

- Trust, privacy and culture are central to lasting adoption

- Starting small, starting smart, and planning for VGI positions organisations for predictive safety

If you’re exploring AI Vision for your sites, the lesson from this session is simple:

You don’t need to see everything at once.

You just need to start seeing more of what matters and build from there