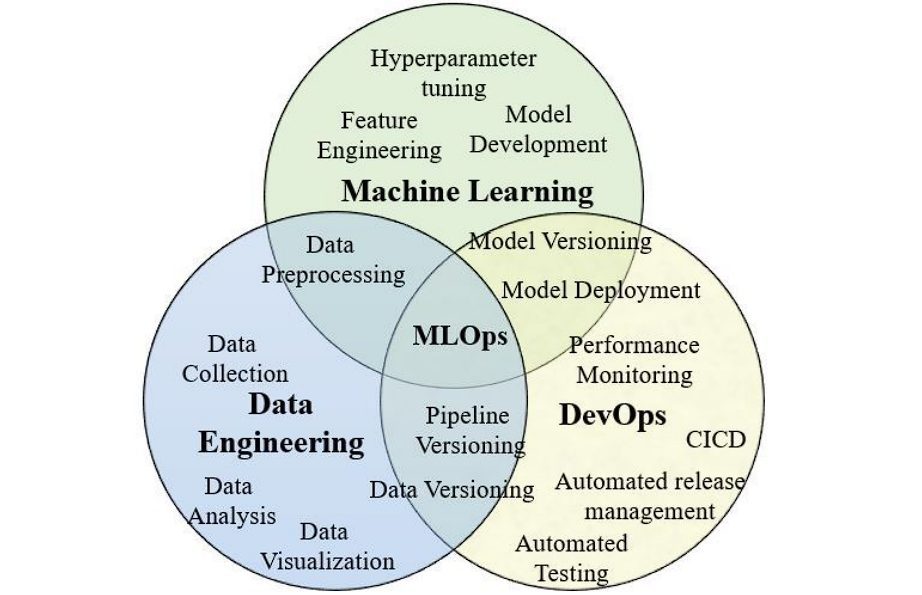

Machine Learning Operations (MLOps) is a set of methods that aim to deploy and maintain machine learning (ML) models in production efficiently and reliably. Hence, MLOps is essential for the lifecycle management of end-to-end machine learning services and applications. It seeks to automate and improve the quality of production ML models while connecting technical and business requirements.

What is MLOps?

Machine learning operations, also known as MLOps or ML Ops, is a set of practices that deliver machine learning (ML) models using repetitive and efficient workflows.

Like how DevOps is necessary for the software development lifecycle (SDLC), MLOps is essential for the continuous delivery of high-performing ML-based apps. It considers the unique needs of ML to define a new life cycle that functions alongside SDLC and CI/CD processes, producing a more successful workflow and more efficient results for ML.

MLOps consists of all the capabilities that IT, data science, and product teams need to apply, run, govern, and protect machine learning models in production at scale. Moreover, MLOps tools are used to address the components listed below, which together result in an automated ML pipeline that significantly increases your ML performance and ROI:

- Infrastructure Management

- Machine Learning Model Serving

- Governance and Security

- Model version control

- Security

- Model service catalog(s) for all models in production

- Monitoring

- Connections with all data sources and high-end tools for model compliance, design, development, deployment, training, and infrastructure

Why Are MLOps Needed?

ML shows its true potential once models reach production. In any case, companies often underestimate the intricacy and issues related to moving ML to production, dedicating most of their resources to ML development while considering ML a standard software tool.

The outcome? Companies fail to see optimal results from their machine learning services and products, leading to revenue losses, wasted resources, and difficulty retaining employees.

Around 87% of companies and businesses find it difficult to manage long model deployment timelines. Additionally, about 64% of companies take longer than a month to deploy a single model. These issues exist even though 86% of companies have increased their ML efforts and budgets for 2021. Find the Report published by DataRobot here.

The truth is that ML isn’t a standard software tool. It needs its special approach, and the code is only a minor aspect of what makes an AI application efficient and successful. Moreover, it depends on an ever-evolving, open-loop set of software tools. Once ML models are deployed, the process has just begun. Models in the production stage must be continuously managed, monitored, and redeployed concerning the changing data signals to ensure maximum performance.

Major Issues that ML Ops Addresses

Managing complex systems at scale is a challenging task, and many things need to be taken care of. Hence, we will look into the major bottlenecks that MLOps engineers have to deal with:

Risk Assessment

There is a lot of discussion going on around the complex nature of systems based on machine learning. Often, ML/AI models tend to function in a way different than they were initially built for. Studying and evaluating the risk and cost of such aspects is a crucial and tedious task. For example, the cost of an irrelevant video recommendation on YouTube would be lower compared to falsely accusing people of committing illegal activities, blocking their accounts, or rejecting their loan applications.

Today, companies struggle to find enough data scientists specializing in building and deploying applications based on emerging tech. The market has opened a new profile for ML engineers who can fulfill the need to bridge the business and technical aspects. It is a sweet spot at the crossroads of DevOps and Data Science.

Communication Holes

The lack of communication between technical and business teams because of a hard-to-find common language becomes the sole reason for the failure of a large-scale machine learning project. Modern ML systems require cross-domain expertise that requires deep knowledge in IoT, Edge, Cloud, and Web development.

Changing Business Goals

Many things need to be considered with the continuously changing data, including maintaining the performance standards of the AI model and ensuring AI governance. It’s challenging to keep track of the evolving business goals, advancing technology, and model training.

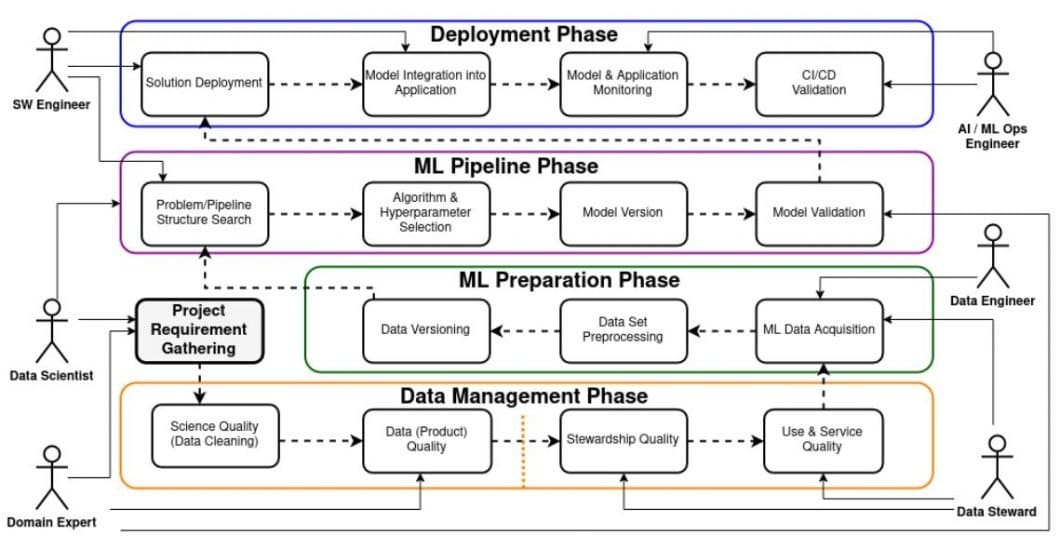

How MLOps Works

MLOps provides the foundation for the ML lifecycle by connecting the machine learning code and all other components needed for ML deployment. This includes:

- Model service catalog(s)

- Training data

- Production data

- Model training

- Model Serving and MLOps Pipeline

- Machine Learning workflow

- Security and encryption

- Model version control

- Model validation

- Infrastructure management

- Explainability and interpretability

- Governance

- Monitoring

- Diagnostics

- Infrastructure management

The meaning of MLOps is to connect the core components, data sources, and tools to achieve compliance, security, and scalability across large-scale machine learning systems and applications.

Benefits of MLOps

Among the many benefits of leveraging ML, a few positive aspects are directly associated with any organization’s ability to stay relevant and grow in this technology and data-driven world. The majority of professionals agree, as underlined by Geniusee, that the MLOps benefits include:

- Easy implementation of high-accuracy AI models in any region

- Machine learning resource management system and control

- Rapid innovation through potential ML lifecycle management

- Efficient management of the entire machine learning lifecycle

- Scalability and development of reproducible models and workflows

From data analysis and processing to monitoring, resiliency, auditing, scalability, and compliance, when performed successfully, MLOps is one of the most critical disciplines an organization or business can have. As a result, software releases will end up with a more value-added impact on end-users; there will be better quality and performance over time.

Wrapping Up — How to Tell If Your Company Needs ML Ops

Wondering if your business needs MLOps? Here are a few evident signs:

- #1 – Complexity: It’s becoming challenging to maintain different languages, software tools, libraries, and frameworks you’re using for data analytics and data science.

- #2 – Model Management: You have a lot of models in your catalog, but cannot monitor them all. The model version is either manual or does not exist.

- #3 – Cloud and Edge AI: You’re working on an ML model in an Edge computing or hybrid cloud environment, including on-premises, multi-cloud, hybrid cloud, or distributed cloud.

- #4 – Time-to-Market: You’ve increased your investment in ML, but the newer models are taking time to deploy to production and roll out to distributed devices.

- #6 – Compliance and Risk Management: You face external legislative requirements for your ML efforts.

- #7 – Multiple Workloads: You’re dealing with different data workloads, ranging from real-time inference and legacy batch to cron jobs.

- #8 – Model Management: Your IT team is asked to address internal needs, including performance metrics, cost controls, and security.

- #9 – Model Management: Your DevOps team is tracking the performance of your regular apps, but not your ML-based apps. Or, they are including ML apps, but it’s not yet advanced.

What’s Next?

If you are looking for a full-stack solution for computer vision applications, check out our end-to-end computer vision platform, Viso Suite. It provides automated ML model deployment at the click of a button, edge device management, and AI model management with an intuitive GUI.

Read more about related topics: