Embedded systems are specialized computer systems— usually a combination of computer processors, memory, and input/output peripheral devices. These devices are designed to perform specialized or dedicated computing operations within a larger mechanical or electronic system. They are widely used in automotive, healthcare, consumer electronics, aerospace, etc. They are the backbone of modern technological advancements, offering precision, efficiency, and real-time capabilities.

Embedded system projects and applications in computer vision

Embedded system projects and applications in computer vision

Viso Suite

From development to deployment, Viso Suite gives enterprises complete control over AI vision infrastructure.

Get a Demo

What are embedded systems?

As discussed earlier, an embedded system is a computer system that is designed to perform a dedicated function within a larger mechanical or electronic system. It combines a processor, memory, and I/O peripherals to control the physical operations of the devices.

Modern embedded systems commonly utilize microcontrollers that combine processors, memory, and interfaces into a single chip. More complex systems may employ microprocessors with separate memory and interfaces.

Technically, the processors used in embedded systems range from general-purpose designs to application-specific or custom-designed architectures. One of the most common types of processors used for dedicated computations is the Digital Signal Processor (DSP).

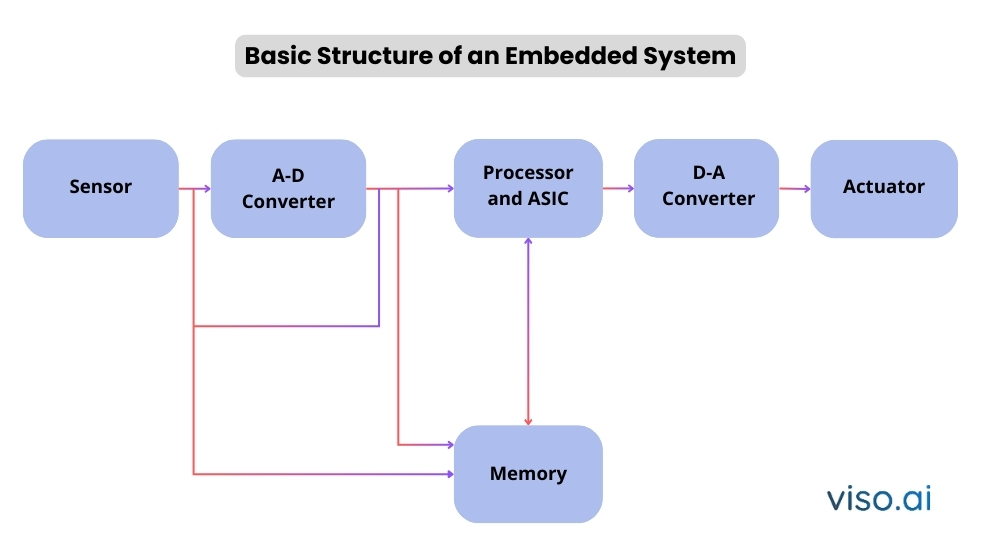

Basic structure of an embedded system

Here is a basic structure or architecture of embedded system projects:

Sensor

A sensor detects and measures changes in the surrounding environment. It takes input from the physical environment and converts it into electrical signals. These electrical signals are then fed into an A-D (or, we can say, analog-to-digital) converter for further processing.

A-D converter

Then, the analog-to-digital converter transforms the analog signal from the sensor into a digital signal for the processor to interpret.

Processors and ASICs

The purpose of a processor is to evaluate the digital signal data. It determines or calculates the output and stores the processed data in the device’s memory. Application-Specific Integrated Circuits (ASICs) are a kind of processor that also perform specialized computations.

D-A converter

The processed digital signal is then fed into the digital-to-analog converter. A digital-to-analog converter converts the processor’s digital data back into analog form.

Actuator

Actuators are devices that convert electrical signals into mechanical motions. It produces force, torque, or displacement when an electrical, pneumatic, or hydraulic input is supplied to it. Technically, an actuator compares the processed output with the desired result and executes the approved action to control the system.

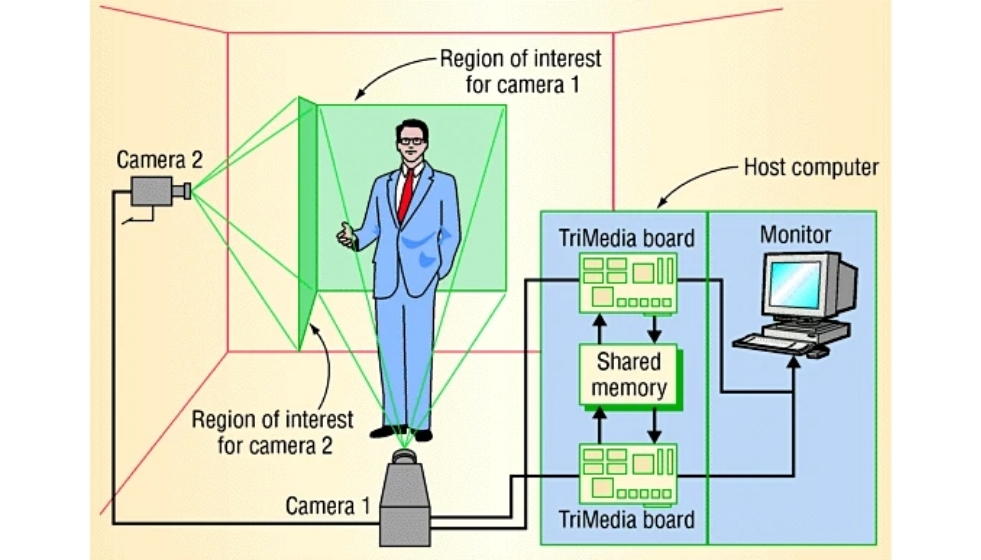

Embedded systems in computer vision: applications and benefits

Computer vision processes large amounts of visual data in real time. Embedded systems excel in such applications because they are optimized for these processing tasks. Embedded systems can ensure efficient image acquisition, data processing, and execution of vision-related algorithms by integrating sensors, cameras, and processing units. The real-time embedded systems processing abilities make them ideal for critical applications in robotics, surveillance, autonomous vehicles, and industrial automation.

For example, embedded systems use hardware accelerators like Graphics Processing Units (GPUs) or Tensor Processing Units (TPUs) that help in executing heavy computational tasks. Generally, GPUs are pretty helpful in executing complex vision algorithms such as convolutional neural networks (CNNs) at a very fast speed.

Another thing, if an embedded system integrates with peripherals such as LiDAR sensors and IMUs (Inertial Measurement Units), it provides great insights into vision data with depth and motion information. These all subsequently help in robust decision-making.

Key components in CV-based embedded system projects

Computer vision heavily relies on specialized hardware and software components to process and interpret visual data. This is where embedded systems came into action in the form of processors, controllers, sensors, and many others.

Here’s a detailed look at the key components:

Hardware components

Cameras and image sensors

These sensors are the primary devices for capturing images and video data. Image sensors convert optical information into electrical signals for processing.

Processors

High-speed processors can run and execute the CV algorithms needed for image analysis and decision-making. Examples include General-Purpose Processors (GPPs), Microcontroller Units (MCUs), or more specialized options like Graphics Processing Units (GPUs) and Field-Programmable Gate Arrays (FPGAs).

Memory

High-speed memory modules store the algorithms and temporary data needed for image processing tasks. This might include RAM for immediate data access and flash memory for longer-term storage.

Connectivity modules

Ethernet and Wi-Fi modules enable the system to communicate with other devices or networks. Connectivity modules are crucial for applications like real-time surveillance or autonomous vehicles.

Power supply

A power supply is an electrical device that supplies electric power to an electrical load. Efficient and reliable power sources are crucial for computer vision models. Especially for systems deployed in remote or autonomous settings. This might include batteries, solar cells (photovoltaic cells), etc.

Software components

Operating system

An operating system (OS) manages the memory and processes of a computer system, as well as all of its software and hardware. For a CV-based embedded system project, real-time operating systems (RTOS) are the best. RTOS runs applications within precise timing.

Computer vision algorithms

Algorithms are set of procedures that enable computer systems to interpret and process visual data. They perform tasks like object detection, classification, object tracking, etc.

Data processing libraries

Libraries are portions of crucial code already built for you. For example, OpenCV provides pre-built functions for various image processing operations. The data library accelerates the processing.

These components work together to allow embedded systems in computer vision projects to perform complex visual tasks. They process data efficiently and react to environmental cues in real time.

Real-world examples of embedded system projects

Smart surveillance cameras

Surveillance cameras send real-time recordings to monitoring stations. Law enforcement and government agencies use intelligent surveillance systems to detect, prevent, and investigate crimes. Also pretty helpful in detecting unauthorized intrusions and monitoring activities in a public, private, or corporate facility. These cameras use real-time video processing to recognize faces, detect motion, and classify objects. They are low-power and highly durable devices.

These cameras leverage YOLO (You Only Look Once) or SSD (Single Shot MultiBox Detector) for object detection. YOLO and SSD are smart computer vision algorithm that needs embedded systems/hardware to process data. Embedded boards such as NVIDIA Jetson Nano process the data of these surveillance cameras efficiently.

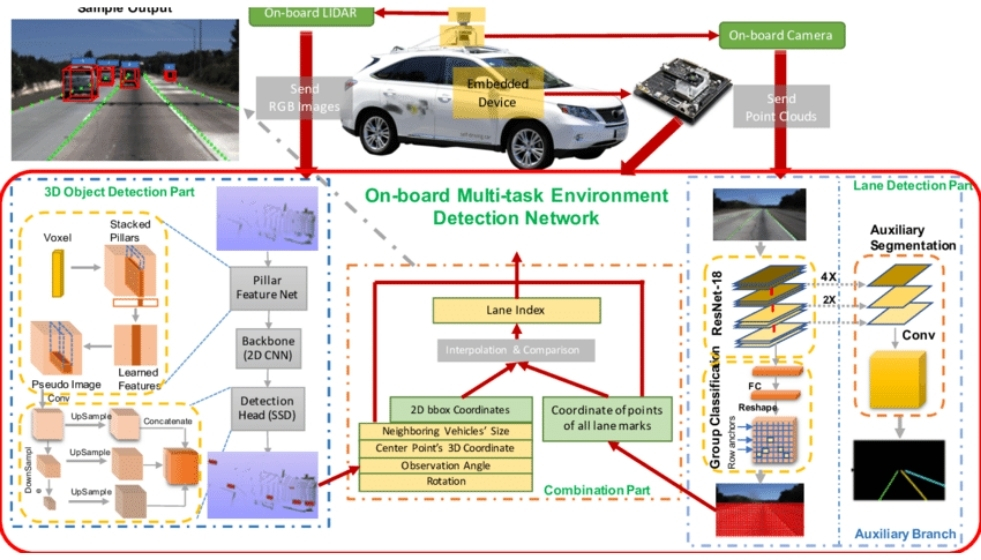

Autonomous vehicle navigation

As you may know, an autonomous vehicle is capable of sensing its environment and making driving decisions without human intervention. Embedded systems help a lot in this regard. These self-driving cars have a set of AI-powered embedded software and hardware like processors, controllers, sensors, etc., for lane detection, obstacle avoidance, traffic sign recognition, and many more. Packed with stereo cameras, LiDAR, and radar sensors for 3D mapping and object localization.

Advanced CV-based embedded platforms like NVIDIA Xavier or Intel Movidius Myriad X enable real-time processing of deep learning algorithms. Faster R-CNN and semantic segmentation models are commonly utilized.

Similarly, high-performance processors like NVIDIA Jetson or Google Coral power these hardware. These embedded processors in autonomous vehicles subsequently enable real-time data processing and decision-making for safe navigation.

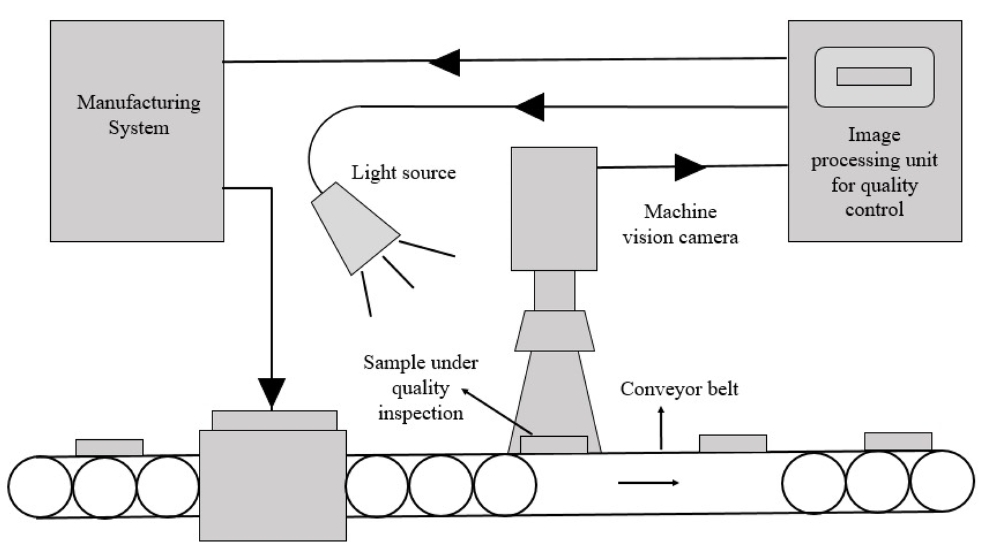

Industrial quality control

Quality control helps a company to meet its consumer demands. Therefore, it is paramount in every industrial facility. Embedded vision systems can help companies inspect their products for defects and inconsistencies. They can capture high-resolution images of their assembly line. Then analyze them for manufacturing defects and nonconformities. Industrial robots are one of the prime examples. They might also help in manufacturing to reduce human errors. Also increases efficiency and productivity.

High-speed cameras paired with industrial-grade FPGAs in industrial robots can process images at millisecond intervals. These systems can also integrate with PLCs (Programmable Logic Controllers) to automate most of their processes.

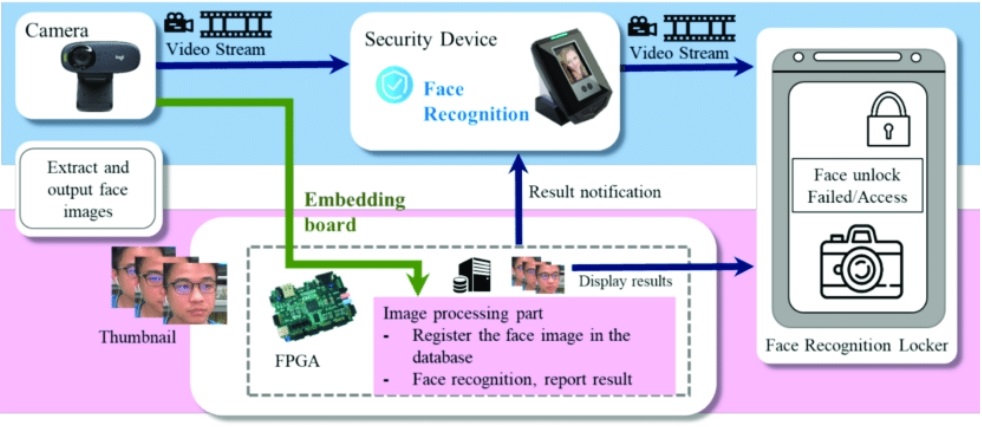

Facial recognition systems

Facial recognition systems in smartphones or access control systems use compact processors, such as ARM Cortex-A53. This is to execute facial feature extraction using Haar-cascades or neural network models. Another paramount application of embedded systems. Variable lighting conditions can make the job much more difficult. Therefore, all facial recognition systems must have intelligent sensors and depth cameras to improve accuracy under variable lighting conditions. It is equally crucial for biometric applications.

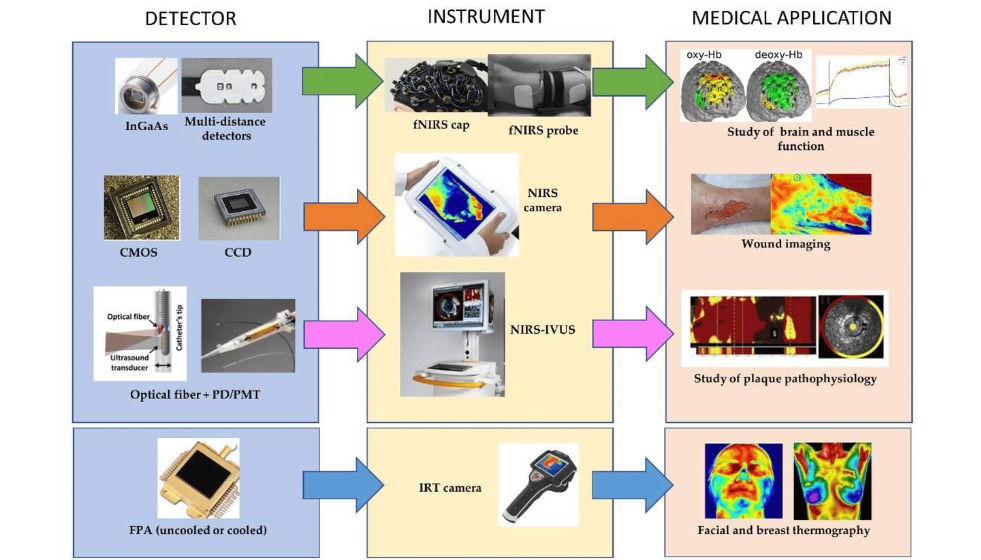

Medical imaging devices

Medical imaging devices are crucial tools in modern medicine. Smart intelligent medical equipment revolutionizes the medical industry. In this context, embedded processors and controllers are the fuel behind these. These devices allow healthcare professionals for clinical analysis and medical intervention.

For example, X-ray, MRI, and CT scan machines use embedded systems to control radiation emissions. They capture images of all the interior body parts precisely and accurately. MRI devices leverage digital signal processors (DSPs) for processing high-frequency signals into detailed images. Embedded systems drive innovation in the medical sector.

Medical imaging embedded devices [Source]

Value offered by embedded systems in computer vision

Embedded systems power computer vision models. When they integrate with a computer vision model, they offer countless values, from increased processing speed to accuracy and reliability.

Let’s discuss some of the key values.

Real-time processing

The number one benefit is real-time processing. Embedded systems process visual data instantaneously. It helps in real-time decision-making in applications like autonomous vehicles, surveillance, etc.

Compact and integrated design

Embedded systems are System-on-a-Chip (SoC). It embeds multiple processors and interfaces in one chip. Embedded systems integrate sensors, processors, and software into a compact unit. This compact design reduces space and hardware requirements. Extremely crucial in drones and mobile devices, where space and weight are limitations.

Energy efficiency

High- or unnecessary energy-consuming devices are unattractive for both buyers and manufacturers. Embedded systems are low-powered devices. They are designed to consume less power. Needs minimal energy to operate, particularly in scenarios where power sources are limited or absent. It also reduces the energy footprint in large-scale deployments.

Customizability

Designers can customize embedded systems to meet the precise requirements of particular applications. From sensor selection to custom software algorithms, all kinds of customization are possible. This customizability allows developers to optimize performance and functionality specific to the task.

Scalability

Embedded systems are scalable. All thanks to its SoS nature. Whether scaling up for industrial automation or scaling down for consumer electronics (small Internet of Things IoT devices). These systems can adapt to a broad range of consumer demands.

Real-world interaction

Embedded sensors and processors enhance real-world interactions. They enable smart devices to perceive, analyze, and respond intelligently to visual inputs. Allow interactive interactions with the physical world. This interaction is fundamental in applications such as autonomous driving, and interactive retail displays and more.

Recommended reads

Did you find this article helpful? We have some more recommendations for you. Do check them out:

- AI Hardware Accelerators: Edge Machine Learning Inference

- Learn about Cameras and Video Processors for Computer Vision Systems

- Explore Edge AI Applications for CV Systems

- The Intersection of Biomimicry and Computer Vision

FAQs

Embedded systems provide the computational platform that processes and analyzes image data. They integrate sensors, such as digital cameras, microcontrollers, or Systems on Chip, which execute real-time computer vision tasks like object detection, face recognition, or edge detection.

Microcontrollers are low-cost and energy-efficient, used for basic tasks involving simple image processing.

Field Programmable Gate Arrays (FPGAs) are highly customizable, provide parallel processing, and are commonly adapted for very compute-intensive jobs, which also include deep learning inference.

Embedded systems make use of hardware optimization, such as GPU, TPU, and FPG,A along with lightweight algorithms to process high-dimensional data efficiently under strict limitations in power and memory.

Some of the challenges in this context are the restraints of resources, including CPU, memory, and power, ensuring real-time performance, and also maintaining the precision of visions while being computationally limited.