With the growing demand for real-time deep learning workloads, today’s standard cloud-based Artificial Intelligence approach is not enough to cover bandwidth, ensure data privacy, or low low-latency applications. Hence, Edge Computing technology helps move AI tasks to the edge. As a result, the recent Edge AI trends drive the need for specific AI hardware for on-device machine learning inference.

Machine Learning Inference at the Edge

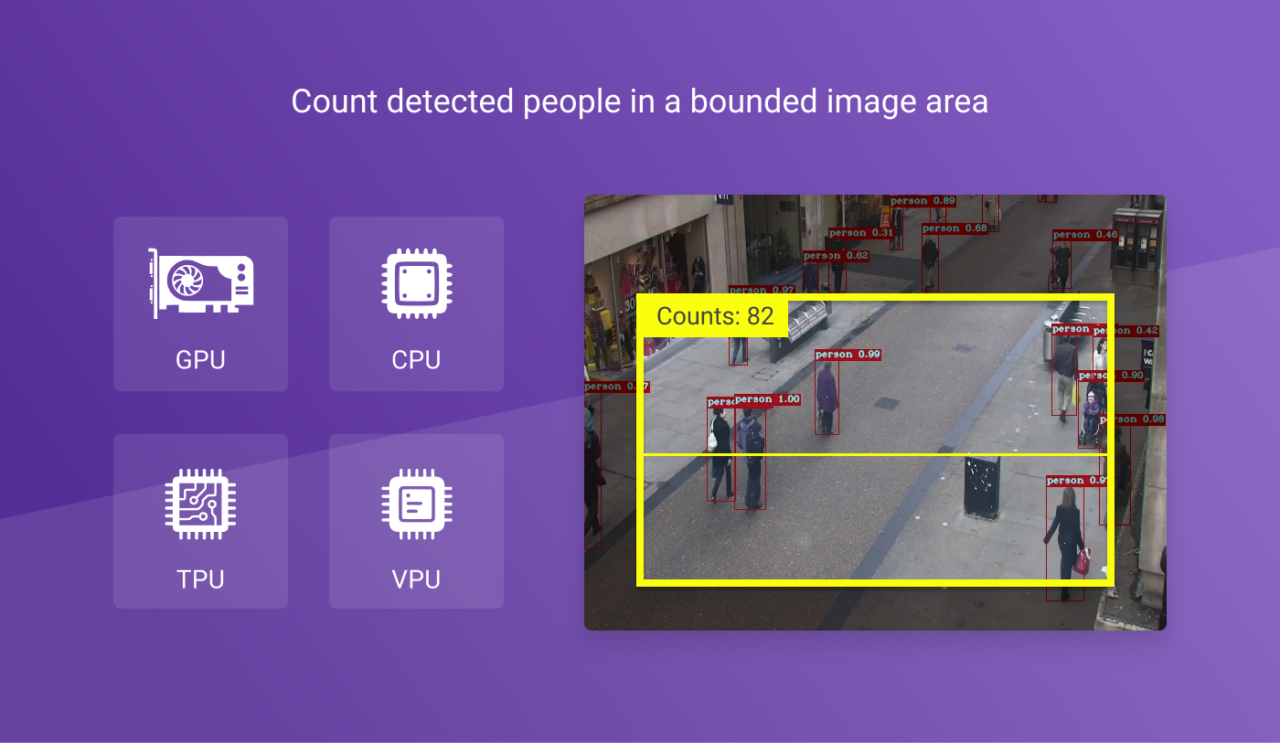

AI inference is the process of taking a Neural Network Model, generally made with deep learning, and then deploying it onto a computing device (Edge Intelligence). This device will then process incoming data (usually images or video) to look for and identify whatever pattern it has been trained to recognize.

While deep learning inference occurs in the cloud, the need for Edge AI grows rapidly due to bandwidth, privacy concerns, or the need for real-time processing.

Installing a low-power computer with an integrated AI inference accelerator close to the source of data results in much faster response times and more efficient computation. In addition, it requires less internet bandwidth and graphics power. Compared to cloud inference, inference at the edge can potentially reduce the time for a result from a few seconds to a fraction of a second.

The Need for Specialized AI Hardware

Today, enterprises extend analytics and business intelligence closer to the points where data generation occurs. Edge intelligence solutions place the computing infrastructure closer to the source of incoming data. This also places them closer to the systems and people who need to make data-driven decisions in real time. In short, the AI model is trained in the cloud and deployed on the edge device.

Computer vision workloads are high, and computing tasks are highly data-intensive.

Edge Device Advantages

- Speed and performance. By processing data closer to the source, edge computing greatly reduces latency. The result is higher speeds, enabling real-time use cases.

- Better security practices. Critical data does not need to be transmitted across different systems. User access to the edge device can be very restricted.

- Scalability. Edge devices are endpoints of an AI system that can grow without performance limitations. This allows starting small and with minimal costs.

- Reliability. Edge computing distributes processing, storage, and applications across various devices, making it difficult for any single disruption to take down the network (cyberattacks, DDoS attacks, power outages, etc.).

- Offline-Capabilities. An Edge-based system can operate even with limited network connectivity, a crucial factor for mission-critical systems.

- Better data management. Fewer bottlenecks through distributed management of edge nodes. Only processed data of high quality is sent to the cloud.

- Privacy. Sensitive data sets can be processed locally and in real time without streaming them to the cloud.

AI accelerators can greatly increase the on-device inference or execution speed of an AI model and can also be used to execute special AI-based tasks that cannot be conducted on a conventional CPU.

Most Popular Edge AI Hardware Accelerators

With AI becoming a key driver of edge computing, the combination of hardware accelerators and software platforms is becoming important to run the models for inference. NVIDIA Jetson, Intel Movidius Myriad X, or Google Coral Edge TPU are popular options available to accelerate AI at the edge.

VPU: Vision Processing Unit

Vision Processing Units allow demanding computer vision and edge computing AI workloads to be conducted with high efficiency. VPUs achieve a balance of power efficiency and compute performance.

One of the most popular examples of a VPU is the Intel Neural Computing Stick 2 (NCS 2), which is based on the Intel Movidius Myriad X VPU. Movidius Myriad X creates an architectural environment that minimizes data movement by running programmable computation strategies in parallel with workload-specific AI hardware acceleration.

The Intel Movidius Myriad X VPU is Intel’s first VPU that features the Neural Compute Engine – a highly intelligent hardware accelerator for deep neural network inference.

The Myriad X VPU is programmable with the Intel Distribution of the OpenVINO Toolkit. Used in conjunction with the Myriad Development Kit (MDK), custom vision, imaging, and deep neural network workloads can be implemented using preloaded development tools, neural network frameworks, and APIs.

GPU: Graphics Processing Unit

A GPU is a specialized chip that can do rapid processing, particularly handling computer graphics and image processing. One example of devices bringing an accelerated AI performance to the Edge in a power-efficient and compact form factor is the NVIDIA Jetson device family.

The NVIDIA Jetson Nano development board, for example, allows neural networks to run using the NVIDIA Jetpack SDK. In addition to a 128-core GPU and Quad-core ARM CPU, it comes with nano-optimized Keras and Tensorflow libraries, allowing most neural network backends and frameworks to run smoothly and with little setup.

With the release of the Xe GPUs (“Xe”), Intel is now also tapping into the market of discrete graphics processors. The Intel GPU Xe is optimized for AI workloads and machine learning tasks while focusing on efficiency. Hence, the different versions of the Intel GPU XE family achieve state-of-the-art performance at lower power consumption.

TPU: Tensor Processing Unit

A TPU is a specialized AI hardware that implements all the necessary control and logic to execute machine learning algorithms, typically by operating on predictive models such as Artificial Neural Networks (ANN).

The Google Coral Edge TPU is Google’s purpose-built ASIC to run AI at the edge. The Google Coral TPU is a toolkit for Edge that enables production with local AI. More specifically, Google Coral TPU onboard device inference capabilities build and power a wide range of on-device AI applications. Core advantages are the very low power consumption, cost-efficiency, and offline capabilities.

Google Coral devices can run machine learning frameworks (such as TensorFlow Lite, YOLO, R-CNN, etc.) for Object Detection to detect objects in video streams from connected cameras and perform Object Tracking tasks.

What’s Next?

Interested in reading more about real-world applications running on high-performance AI hardware accelerators?

- Everything you need to know about Edge AI

- Most popular computer vision applications for AI accelerators

- Read about Vision Processing Units to power Computer Vision Applications

- Google Coral Edge TPU for real-time vision inference

- Learn about the VPU Intel Neural Compute Stick 2