Neuromorphic engineering is an interdisciplinary field that aims to design and develop artificial neural systems. It combines principles from neuroscience, mathematics, computer science, and electrical engineering to create brain-inspired hardware and software. Applications of neuromorphic systems range from advanced artificial intelligence to low-power computing devices.

Intro to Neuromorphic Engineering

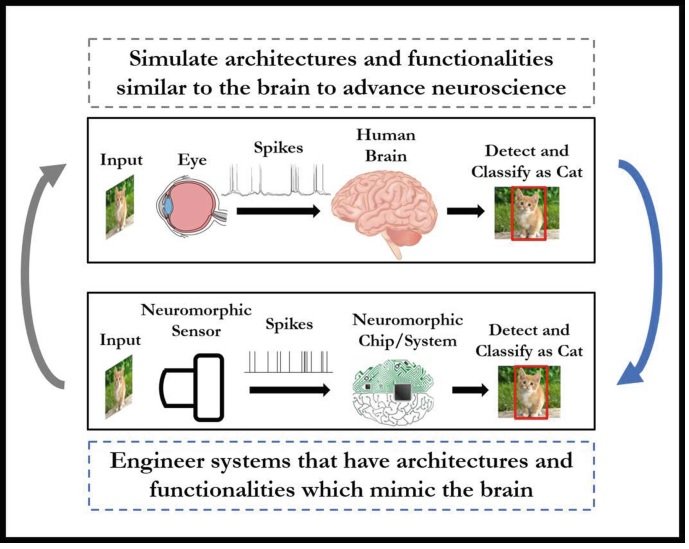

Neuromorphic engineering creates artificial neural systems that mimic biological nervous systems. It aims to design hardware and software that processes information like a human brain. The goal of neuromorphic systems is to create more efficient and adaptable computing systems that can learn from experiences. Thus, these systems can potentially solve complex problems that traditional computing platforms struggle with, such as pattern recognition, sensory processing, and decision-making in uncertain environments.

Many neuromorphic systems use spikes or pulses to communicate between neurons. This method is similar to how biological neurons communicate.

Core Principles of Neuromorphic Engineering

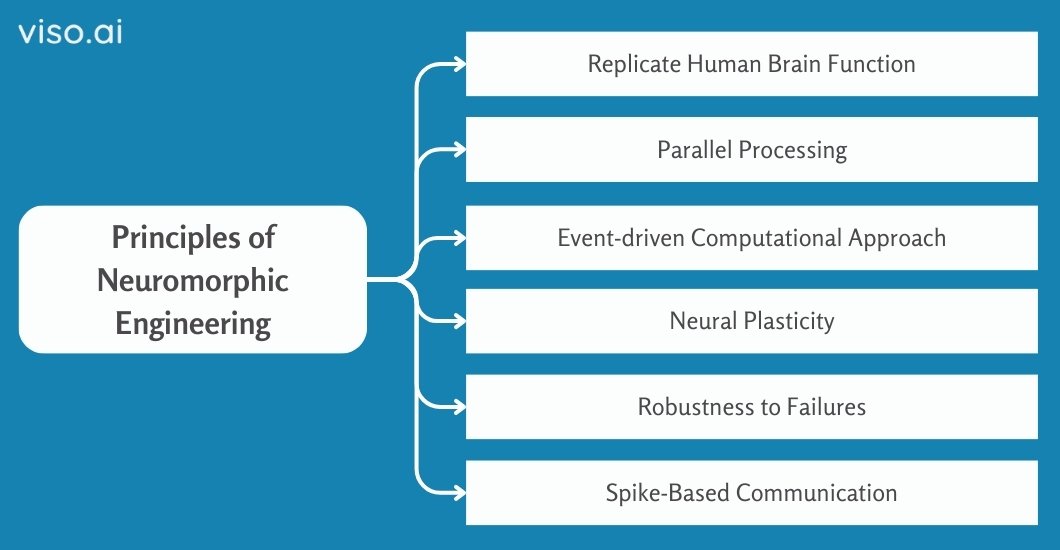

The core principle of neuromorphic engineering is to develop models that replicate the working mechanism of biological neural networks and process information just like a human brain does.

Here are some key aspects of this field:

- It creates systems that emulate neural networks and synaptic connections.

- The neuromorphic architectures and networks perform parallel processing. It means that they can process multiple information simultaneously, similar to how the brain works.

- Along with remarkable intelligence, they are energy-efficient too. They perform computing on low power.

- Neuromorphic machines have built-in fault tolerance capabilities. They can continue their operations even if some components fail.

History & Key Milestones of Development

1943

The first computational model of a neuron was introduced by Warren McCulloch, a neuroscientist, and Walter Pitts, a mathematician, in 1943. They called this artificial neuron “Threshold Logic Unit (TLU)” or “Linear Threshold Unit.” It is the foundation of the idea of brain-inspired computing.

1948

In the paper “Intelligent Machinery,” Alan Turing introduces the concept of artificial neural networks. Turing also developed genetic algorithms and neural networks, which he referred to as “unorganized machines” with learning capacities and reinforced learning.

1970’s

The rise of artificial intelligence and machine learning theories helped advance the comprehension of neural computation.

1989

One of the first applications of neuromorphic engineering was proposed by Carver Mead in the late 1980s. Carver Mead coined the term “Neuromorphic Engineering” and started adopting analog VLSI techniques to emulate neural functionality.

In the year 1989, Mead wrote a paper entitled “Analog VLSI and Neural Systems” that presented the theoretical and practical working framework of neuromorphic engineering.

1991

Lyon and Carver Mead created an early form of the analog neuromorphic chip to mimic the cochlea, part of the auditory nervous system. This has been analogous to an electronic cochlea using CMOS VLSI technology and a micropower approach.

1998

Misha Mahowald and Carver Mead constructed the Silicon Retina. It was an initial vision chip that replicated the functions of the human retina. This silicon chip showed practical uses for neuromorphic engineering in that era.

2003

IBM began the Blue Brain Project, which intended to develop an electronic model of the brain. It was done by simulating the human brain’s functionality with the help of supercomputers. The goal of the Blue Brain Project was to build biologically detailed digital reconstructions and simulations of the mouse brain.

2006

Researchers at Georgia Tech presented a Field Programmable Neural Array. This chip features an array of programmable transistors that mimic the brain’s neurons. It was a pioneering step in silicon-based neural networks.

2014

The first major work in building the chip was the TrueNorth by IBM. It has one million programmable neurons and 256 million synapses.

2017

Intel released the Loihi chip, which is endowed with 130 thousand neurons and 130 million synapses, focusing on energy efficiency as well as real-time learning.

2019

DynapCNN: SynSense released a neuromorphic processor for the spiking neural networks that support ultra-low power computing, which requires only sub-milliwatt computing.

Odin: The ODIN processor was presented by Charlotte Frenkel, exhibiting the high synaptic density and amount of energy it took to perform the synaptic operation.

DYNAP-SE2: The Institute of Neuroinformatics developed a flexible and configurable mixed-signal neuromorphic chip for real-time emulation of spiking neural networks.

2021

Loihi 2: Intel’s Loihi 2 neuromorphic research chip was launched to support dynamics and optimizations for efficiency. It was 10 times more efficient than Loihi-1.

SpiNNaker 2: The University of Dresden released SpiNNaker 2 in 2021. It has a newer architecture that possesses 153 ARM cores with improved abilities in neural simulations.

2022

Akida: BrainChip introduced this ultra-low-power neuromorphic processor for edge AI applications.

BrainScaleS 2: The Universität Heidelberg has presented the improved version of the multi-scale spiking neuromorphic system-on-chip utilizing the accelerated spiking approach to emulate complex neural dynamics.

ReckOn: Charlotte Frenkel showed on-chip learning using an e-prop training algorithm for real-time learning in ultra-low power architectures.

Speck: SynSense introduced a neuromorphic vision System-on-Chip for real-time, low-latency visual processing.

Xylo: SynSense launched a digital spiking neural network inference chip optimized for ultra-low power edge deployment.

2024

Spiking Neural Processor T1: Innatera unveiled a neuromorphic microcontroller System-on-Chip for real-time intelligence in power-limited and latency-critical devices.

Algorithms for Neuromorphic System Development

Spiking Neural Networks (SNNs)

Spiking Neural Networks (SNN) are Deep learning neural network models based on the functions of a biological brain. SNN does not employ continuous numerical values to communicate between neurons, as in normal neural network,s but instead employs spike communication similar to the actual neurons. These networks translate information into the frequency of occurring spikes, enabling higher efficiencies, and at the same time, are biologically realistic.

Some of the key algorithms of Spiking Neural Networks are:

- Leaky Integrate-and-Fire (LIF) model

- Izhikevich model

- Hodgkin-Huxley model

Their design makes them particularly suitable for neuromorphic computing and engineering applications. It is also ideal for tasks that involve temporal data processing or low-power requirements.

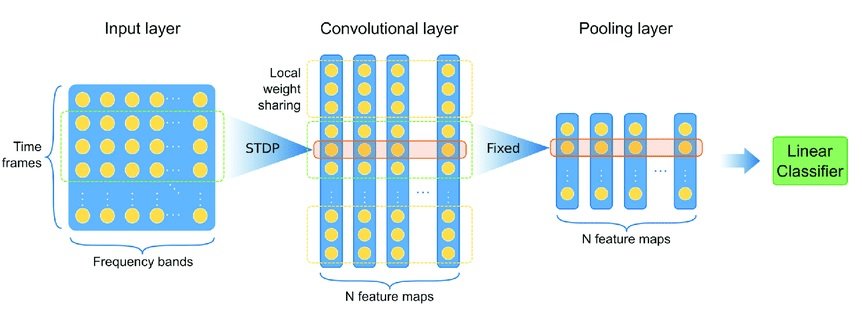

Spike-Timing-Dependent Plasticity (STDP)

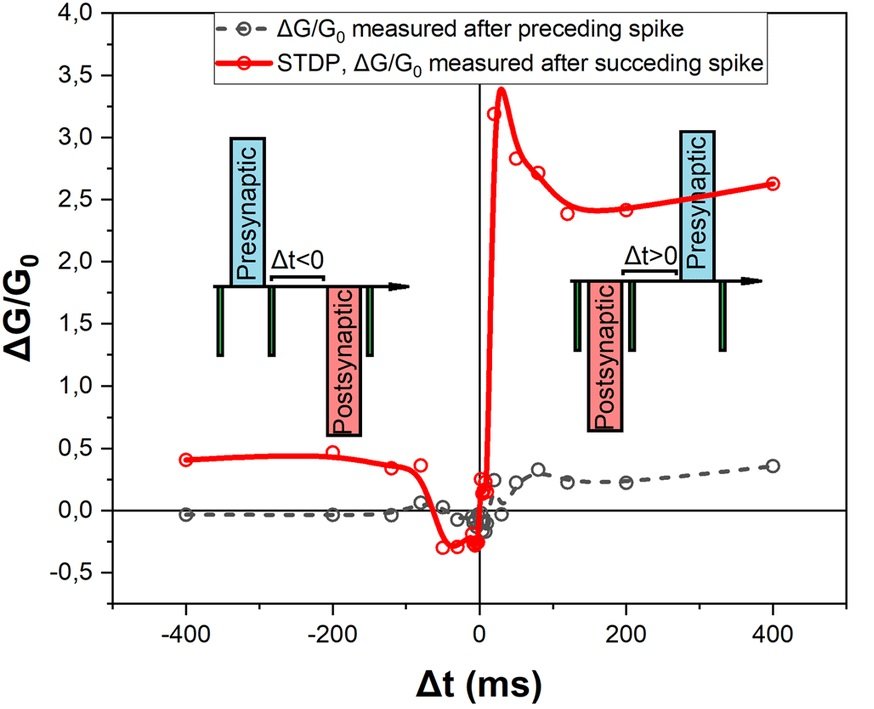

Spike-Timing-Dependent Plasticity (STDP) is a fundamental learning mechanism in biological neural systems. It describes how the strength of connections between neurons changes based on the precise timing of their activity.

In STDP, if a presynaptic (sender) neuron fires shortly before a postsynaptic (receiver) neuron, its connection strengthens. Conversely, their connection weakens if the postsynaptic neuron fires before the presynaptic one. This time-sensitive process occurs within a specific time window. Therefore, the less time between presynaptic and postsynaptic neurons, the stronger the connections of neurons.

Reservoir Computing

Reservoir computing is a computational framework for recurrent neural network theory. It maps input signals from neurons into higher-dimensional computational spaces. This is done by using reservoirs, which are fixed, non-linear systems, or we can say, randomly connected networks of neurons.

When the input signals pass through the reservoir, they create complex high-dimensional states. These high-dimensional states then help in computing the desired output. This method effectively handles time-dependent data.

SpikeProp

SpikeProp is a neuromorphic learning algorithm for Spiking Neural Networks (SNNs). It adapts backpropagation to work with spikes instead of continuous signals. In this method, neurons communicate via discrete spikes, and the algorithm adjusts the timing of these spikes to minimize error. SpikeProp is especially suited for temporal data and tasks and enables more biologically realistic neural network models.

ANN-to-SNN Conversion Techniques

ANN to SNN conversion methods are procedures that convert artificial neural networks (ANN) to spiking neural networks (SNN). These methods allow us to maintain almost the same accuracy achieved by the initial ANNs while using SNNs’ more efficient energy consumption. To convert an ANN with sigmoid/tanh activation to SNN, weight rescaling, threshold adjustment, and spike encoding are some of the frequently used methods. This conversion also enables ANNs that are pre-trained in neuromorphic hardware.

A Comparison Against Traditional Algorithms

Neuromorphic algorithms and traditional ML algorithms are both approaches to artificial intelligence. However, their major differences lie in their:

- Inspiration

- Computational models

- Architecture

- Implementation

Neuromorphic algorithms draw inspiration directly from the brain’s structure and function. This contrasts with many traditional AI approaches that may be more abstractly inspired by cognition or focus purely on mathematical optimization.

Computationally, neuromorphic models often use spiking neurons and event-driven processing, as opposed to the continuous synchronous computations typical in traditional neural networks. This leads to fundamentally different architectures, such as Spiking Neural Networks (SNNs) and reservoir computing systems, which are designed to process temporal information more naturally and efficiently than conventional feed-forward or recurrent networks.

In terms of implementation, neuromorphic algorithms are often designed with specialized hardware in mind, such as neuromorphic chips. These chips can directly implement spiking neurons and plastic synapses. This hardware-software co-design approach differs from traditional AI algorithms, which typically run on general-purpose computing hardware. Additionally, learning mechanisms in neuromorphic systems, like Spike-Timing-Dependent Plasticity (STDP), are more closely tied to biological learning processes, contrasting with the gradient-based optimization methods common in traditional machine learning.

IBM’s TrueNorth

Researchers at International Business Machines Corporation, better known as IBM, developed TrueNorth in 2014. It was an integrated circuit and more specifically, a neuromorphic CMOS integrated circuit. This neuromorphic chip emulates the synaptic structure of the human brain and is a key breakthrough in neuromorphic engineering.

This is a memorable progress on the way to developing more efficient neuromorphic computing systems similar to the human brain. The architecture includes 5.4 billion transistors grouped into 4,096 neo-synaptic cores. Each core is composed of 256 neurons that form parallel structures, permitting it to be energy Independent.

Key features of this chip include:

- 1 million programmable neurons

- 256 million programmable synapses

- 4096 neurosynaptic cores

- Event-driven operation

- Low power consumption (70mW at typical operating conditions)

TrueNorth has been used in the areas of object recognition, motion detection, and gesture recognition.

Commercial development of the IBM TrueNorth chip is discontinued. The reason is that IBM shifted its focus towards quantum computing and the hybrid cloud.

Intel’s Loihi

Key features of this chip include:

- 130,000 neurons

- 130 million synapses

- Support for on-chip learning

- Asynchronous, event-driven operation

- Highly scalable architecture

Intel Lab scientists are constantly working to develop advanced features that Loihi can offer. Loihi 2 was released in 2021. Loihi 2 is ten times faster than Loihi 1.

Researchers use Loihi chips in Robotics, optimization problems, and sparse data processing. Therefore, Loihi has the potential to improve the efficiency of edge computing neuromorphic devices, too.

Real-World Applications

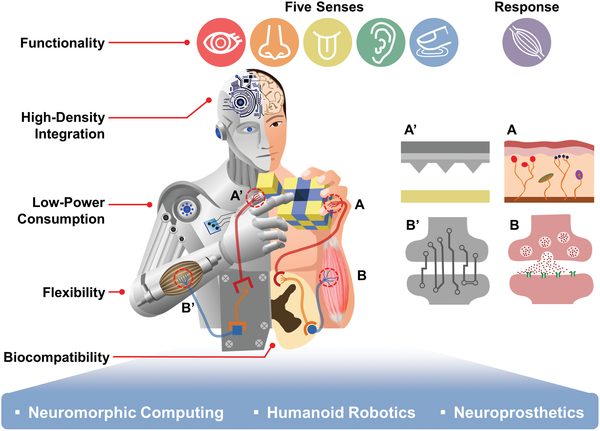

Neuromorphic engineering has potential applications in various fields discussed below.

Brain-computer interfaces

Neuromorphic systems are capable of processing neural signals faster as compared with traditional computers. Due to this capability, they are suitable for brain-computer interfaces. These interfaces could allow a person with paralysis to command prosthetic limbs or interact orally.

Robotics and autonomous systems

This is because neuromorphic systems can provide a better means for the management of robots than traditional computer control. These systems can analyze stimuli in real time and make decisions like biological systems in the process of signal recognition and modulation. Hence is most beneficial for robots programmed to function in complex and unpredictable environments.

Optimization problems

Neuromorphic systems can perform some optimization problems faster than a standard CPU. Moreover, these systems are designed to have parallel structures and can effectively deal with uncertainty, making their application appropriate for scheduling and resource allocation.

Pattern recognition

Neuromorphic systems are best suited to work on pattern recognition. They can analyze enormous amounts of information, received through sensory organs, in a short amount of time. This capability makes them applicable for instances such as image and speech recognition.

Future Research Directions

Neuromorphic engineering is an emerging field of research that has several potential avenues. Some of them may include:

- Scientists are actively developing solutions for scaling neuromorphic systems. They are developing highly effective as well as much bigger, and more refined ANNs closely resembling biological neurons.

- Spiking Neural Networks (SNNs) are among the most important research areas of neuromorphic engineering. In the long run, we could anticipate faster and more accurate learning algorithms of SNNs.

- Material research for neuromorphic hardware is another area of development. We could expect to see more novel materials and fabrication techniques for neuromorphic chips and their machinery.

- Integration of neuromorphic models with traditional computing architectures is equally beneficial. This could leverage the strengths of both approaches. Moreover, integrating traditional AI and ML algorithms will enable neuromorphic systems to learn more complex tasks, adapt to new environments, and improve their performance over time.

- Neuromorphic systems’ low power consumption makes them promising for edge computing applications. Currently, there is active research in neuromorphic implementations for the IoT and other edge computing cases.

What’s Next for Neuromorphic Engineering?

The field of neuromorphic engineering is rapidly changing. Future developments may include more powerful and efficient neuromorphic technology, improved learning algorithms, and novel applications. As our understanding of the brain improves and technology advances, neuromorphic systems may play an increasingly important role in computing and artificial intelligence.

Here are some related articles for your reading:

- A Comprehensive Guide to Artificial Neural Networks

- What are Liquid Neural Networks?

- Recent Research on Home Robots: A Practical Example of Neuromorphic Engineering