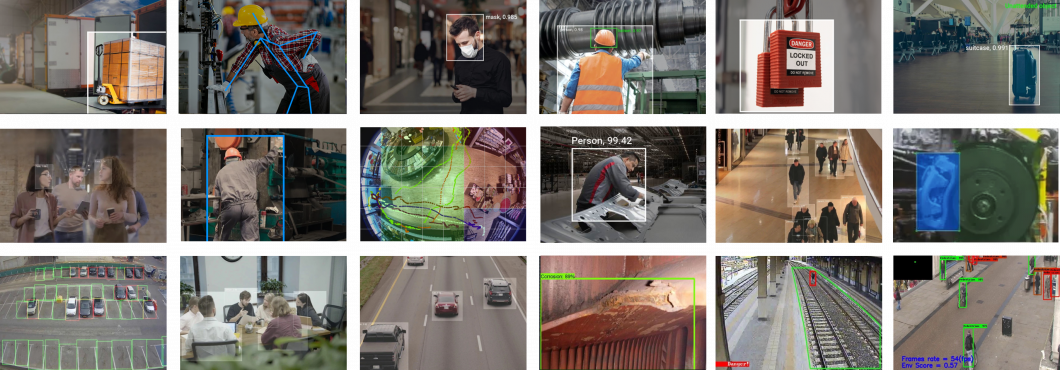

Viso Suite provides extensive capabilities and infrastructure for any computer vision task. The computer vision platform supports a wide range of image recognition methods, traditional image processing, and deep neural networks. Most computer vision systems are based on a combination of different techniques. Therefore, the no-code and low-code application development environment of Viso Suite allows model-driven development with building blocks.

The Viso computer vision platform provides a set of image processing and computer vision capabilities to rapidly develop complete AI vision applications:

Basic computer vision and recognition tasks

Object Detection

Real-time object detection to recognize objects in digital images and videos. Deep learning models are used to recognize predefined object classes (e.g., car, chair, tire, or custom parts). Object recognition methods can be used to detect specific anomalies or patterns (e.g., scratch detection in smart manufacturing).

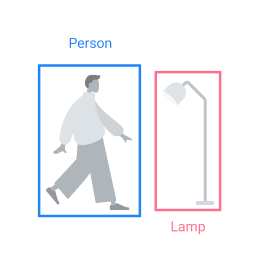

People Detection

Detect a person or multiple people in images and real-time video feeds. Deep learning person detection is used to trigger an alert or send a message in various use cases.

Animal Detection

Animal Detection

Deep neural networks can be used to automatically detect specific animals in video feeds (e.g., dog, cat, cow, pig). It is used in security, road safety, and agriculture applications (Smart farming).

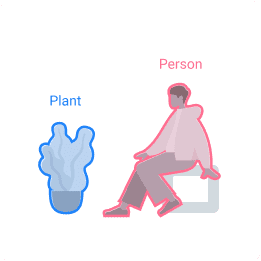

Image Segmentation

Video object segmentation is used to segment several different objects with deep learning models in images from video streams.

Pose Estimation

Human pose estimation with deep learning is used to detect and track semantic key points like joints and limbs in images of video streams.

Image Classification

Deep learning image classification is used to comprehend an entire video frame as a whole by assigning it to a specific label. Classification is used to analyze images that contain one object.

Tracking, counting, and activity analysis

Object Tracking

Object tracking is used to track the movement of detected objects in a video feed. It is used to track multiple objects or people as they move around.

Object Counting

Object counting to track the number of detected objects in a video. Vision-based counting is used in traffic flow monitoring or product counting.

People Counting

People counting is used to count one or multiple people in video streams. A vision-based people counter is used in crowd counting and footfall analytics.

Dwell Time Tracking

Neural networks detect and track people and vehicles to calculate the average dwell time in specific areas. It is used to detect waiting times, inefficiencies, and time spent by vehicles at stops.

Face analysis and facial recognition

Face Detection

Face detection is used to detect the presence of human faces in images and videos. Deep learning models are used to achieve the highest accuracy.

Face Recognition

Use deep neural networks for face recognition in real-time. Trained ML models analyze and compare detected faces with database entries.

Emotion Analysis

AI methods analyze the facial emotions of human faces. Camera-based emotion AI analysis identifies a set of emotional states (happiness, sadness, anger, etc.).

Gender Analytics and Age Estimation

AI algorithms identify the gender of multiple people detected in real-time video feeds. Specific AI models can provide age estimations based on video streams.

Vision-based movement and motion analysis

Activity and Motion Heatmap

Object flow is used to create a motion heatmap based on real-time computer vision detection of objects. It is used for traffic analysis and footfall heatmaps.

Fall Detection

Real-time human fall detection uses deep learning methods to detect and track human movement and analyze motion in video feeds.

Posture Recognition

Detect the human body posture in real-time on video images. Posture recognition is used to detect and track the movement of people to identify the postures “sitting”, “lying”, “standing”.