Critical decision-making is a key responsibility for any decision-maker. That is why it is essential to know when to trust artificial intelligence (AI) and when to trust our intuition. In today’s world, AI systems are being integrated into different aspects of our lives, including decision-making processes for small and large companies. AI is certainly great at processing data and identifying patterns that might escape human perception, but it is not perfect.

Thus, understanding when to rely on AI versus human judgment is crucial for optimal decision-making. This article will explore the decision-making process for humans and AI, and how AI, especially computer vision, can be integrated. We will also compare the results of integrating AI across different criteria. Let’s get started.

Understanding Decision-Making Processes

In critical decision-making scenarios, both humans and AI systems follow different processes to make decisions. The correctness likelihood (CL) is a key metric that allows researchers to study the correctness of decision-making processes. CL is a measure that represents the probability of making the right decision in a specific situation. AI relies on algorithms and learning patterns from training data to make conclusions, but human decision-making is more complex. We use our intuition, past experiences, and contextual understanding, which can be difficult to quantify.

This is why the correctness likelihood metric works better for artificial intelligence systems, as AI can provide a confidence score for their decisions, representing their CL based on statistical analysis. However, we can still measure the CL for humans by thoroughly understanding our critical decision-making process.

How AI Makes Decisions

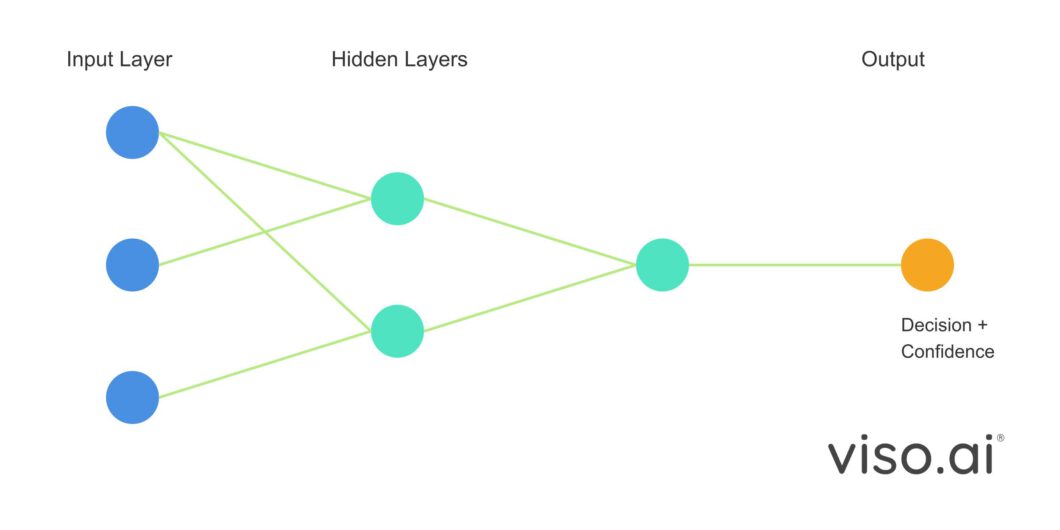

Artificial Intelligence models are made of artificial neural networks (ANNs) and make decisions through pattern recognition and statistical analysis. These models are trained on large datasets to learn patterns and make predictions. AI makes its predictions completely different from human reasoning; it relies on mathematical algorithms and learned representations from data.

Modern AI systems like most Computer vision (CV) or Large language models (LLMs) utilize variations of the artificial neural network to process information. Here is the process for AI systems to make decisions.

- Input Processing: The model receives data (like images or text) and converts it into a format it can process

- Feature Extraction: Neural networks identify relevant patterns and features in the input

- Pattern Matching: These features are compared against patterns learned during training

- Output Generation: The model produces a decision along with a confidence score

However, a key consideration is AI explainability, which is to understand why an AI system arrived at a certain decision. Neural networks are often considered black-boxes in the sense that we can’t explain why they arrived at a particular prediction. For example, suppose a computer vision model detects pneumonia in an X-ray image for patient A. In that case, the only way to know why it made that prediction is to look at the millions of parameters of the network and try to reason about what patterns or features it is detecting.

However, the neural network itself is explainable, we know what kind of functions are applied and how they arrive at outputs. Researchers also incorporate explainability techniques in modern AI systems to make the neural network decision-making process more transparent.

Human Decision-Making Process

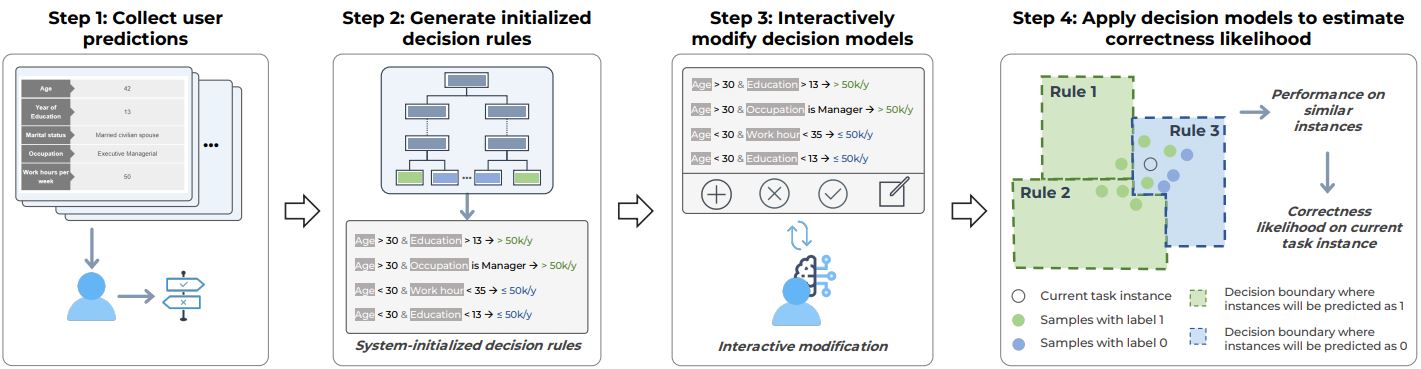

Unlike AI systems, humans make decisions through a complex process of analytical thinking, intuition, and past experiences. This makes measuring human correctness likelihood (CL) more difficult than measuring AI confidence, especially in computer vision tasks. Usually, when a computer vision model makes a prediction, it provides a confidence score. Humans cannot accurately measure their confidence. However, by studying the human decision-making process, researchers can measure the confidence score of our critical decisions.

- Past Experience: We rely on similar images we have seen before

- Pattern Recognition: We look for familiar visual patterns and features from past experiences

- Analysis: We combine our experience with what we see

- Final Decision: We make a prediction based on all the information

While computer vision models can provide clear confidence scores for their predictions (e.g., “90% confident this is a tumor”), measuring human accuracy is more complex. Research shows that humans often struggle to accurately assess their own confidence in visual tasks – we might be very confident about detecting an object but be wrong, or be unsure about our detection when we are actually correct. Researchers can measure the human CL with a well-defined process, like the modeling process shown in the image above, but in general, this is how we can model human performance.

- Comparing predictions to known ground truth data

- Analyzing performance on similar visual tasks

- Studying patterns in decision-making across multiple cases

- Calculating the Correctness Likelihood (CL) based on past performance

This measurement helps us understand when to trust human visual analysis versus computer vision models for critical decision-making. In the next section, we will make a true comparison between AI and humans in visual decision-making.

Trusting AI vs. Humans: Key Criteria

Many factors should come into consideration when deciding to trust AI or human decisions, especially in critical vision tasks. Recent research proves that AI and human capabilities are far from perfect. AI systems usually make mistakes even with high-confidence scores due to factors like bias or overfitting. On the other hand, humans can be overconfident or biased in their wrong decisions.

This is why it is important to understand the strength of AI vs. humans in critical decision-making across criteria like speed, accuracy, adaptability, and accountability for mistakes. By understanding these key aspects, we can decide which tasks are better for humans and which are better for AI. This understanding will also identify areas where a hybrid of AI and human intelligence might lead to optimal results. Let’s analyze these critical factors to understand when to trust AI and when to trust human judgment in visual tasks.

Processing Speed and Efficiency

AI systems have the obvious advantage of processing speed compared to human capabilities. Computer vision models can process hundreds or thousands of images per second, while humans need much more time to process visual information carefully. For example, a computer vision model can analyze hundreds of solar panel images in minutes to identify defects or damage, while a human expert will need several minutes per image to make a careful judgment.

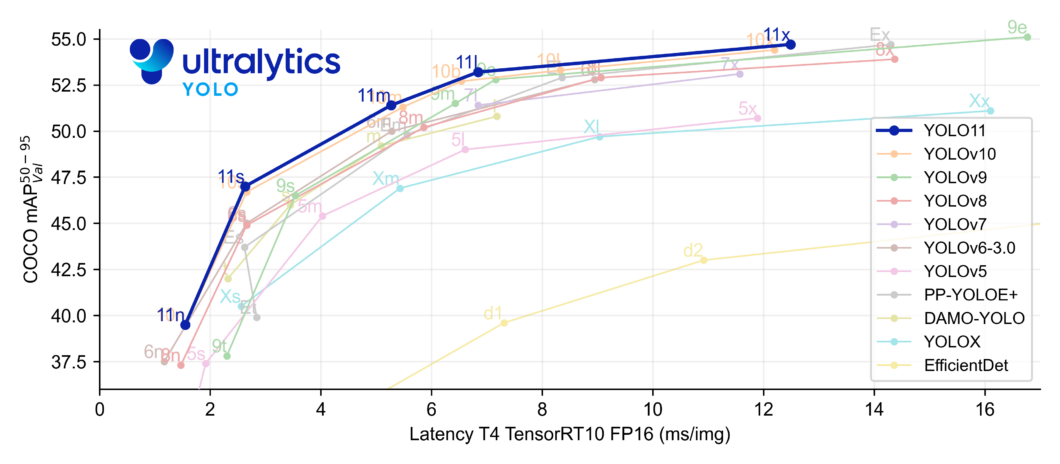

However, research shows that fast AI processing could lead to oversight. This is why there are usually multiple variations of computer vision models with trade-offs between speed, accuracy, and efficiency.

YOLO models are a popular family of computer vision models used for a wide range of critical decision-making scenarios. For instance, the figure above shows the performance of YOLOv11 in the blue line. The blue line has multiple points indicating the different sizes of the model (n: nano, s: small, m: medium, l: large, x: largest). We can see that the smaller models have lower latency, but less accuracy on the benchmark dataset (COCO), while bigger models have higher accuracy and higher latency.

In environments where AI assists in making critical decisions, like when to fix the solar panels, human-in-the-loop integration is a must. When AI’s confidence is lower, humans should be given more time to make their own analysis. This “cognitive forcing” approach helps reduce relying too much on quick AI judgments and allows humans to engage in deeper analytical thinking, which can be of most importance for tasks like medical diagnosis.

Accuracy and Reliability

When it comes to accuracy in visual tasks, neither humans nor AI systems are perfect. Computer vision models can achieve high accuracy on benchmark datasets like the COCO dataset, but this is mostly because images from that dataset were used to train the model. Computer vision models’ performance often drops in real-world scenarios because of issues like overfitting, where the model learns the training data so well that it does not generalize well in the real world.

However, most state-of-the-art models generalize well in vision tasks and critical decision-making. Similarly, humans can be highly accurate in familiar visual tasks but are prone to fatigue, bias, and inconsistency. Research shows that AI confidence scores can be misleading. For example, a computer vision model might be 90% confident in its prediction but still be wrong, while sometimes being correct with lower confidence scores. Recent studies show that when AI gives incorrect predictions with high confidence, humans still tend to trust and follow these predictions.

Human accuracy also varies depending on many conditions, and the state of the human mind. People usually have poorly-calibrated self-confidence that doesn’t reflect their actual accuracy. This means we sometimes can be confident in critical decisions that are actually wrong, and unsure about ones that are actually correct. Furthermore, the key to maximizing accuracy is understanding when AI or humans are more likely to be correct. Here are a few points where AI tends to be more accurate.

- Repetitive visual tasks

- Well-defined pattern recognition

- High-volume inspection tasks

Similarly, humans have a few advantages in being more accurate, humans excel at the following.

- Novel or problem-solving scenarios

- Complex context

- Adapting to unexpected variations

Risk and Accountability

When utilizing computer vision systems for critical decision-making, it is important to consider risk and accountability. AI systems can process images faster and often with high accuracy, but when they make mistakes, it can be critical to companies. For instance, if a computer vision system fails to detect a defect in an important machine part or misdiagnoses a medical condition, who is responsible for the consequences?

This is of most importance when AI systems show high confidence in incorrect predictions, which is dangerous in high-risk scenarios. As we have explained previously, AI systems cannot intuitively explain why they made a certain decision, unlike humans, making it harder to identify the root cause of mistakes. For critical computer vision tasks, a well-defined human-in-the-loop process is essential to manage risks.

In high-risk scenarios it is important to consider the role of computer vision models and the impact of their decisions, and set clear rules to avoid critical mistakes, the following are some example rules.

- Regular validation of AI predictions by human experts

- Clear protocols for when AI confidence is low

- Defined responsibility chains for decision-making

- Documentation of the decision-making process

The key is to find the right balance between using AI’s capabilities and human oversight where the risks are highest. Next, let’s explore hybrid approaches for computer vision and humans to work in a team.

Hybrid Human-AI Approaches in Critical Decision-Making

The future of critical decision-making should not be to choose between AI and human intelligence but to leverage the positives of both. By utilizing hybrid approaches that take advantage of computer vision capabilities and human expertise, organizations can create more reliable decision-making systems. Hybrid approaches play to AI and human strengths, resulting in an effective team-up solution between humans and computer vision.

Creating Effective Human-AI Teams

To create successful hybrid approaches company company-specific workflows have to be designed in a way that utilizes computer vision and human experts to their fullest potential. Computer vision models can process large amounts of images at a high speed, and flag potential issues, while humans can provide context-based help and handle cases where AI is uncertain. A common method is to use AI for initial screening or analysis, with humans as supervisors or reviewers. For example, in manufacturing quality control, CV models can monitor production lines and flag potential defects, human experts then review flagged items and make final decisions based on their experience.

To create effective critical decision-making hybrid approaches between CV models and humans, we can usually implement at least one of the following methods.

- Al-Assisted Detection: Al detects potential objects or anomalies, and humans validate them to make the final decision.

- Human-in-the-Loop: Human provides context guidance for the AI to refine its output and learn from the input.

- Expertise Augmentation: AI provides additional analysis to human-made decisions, giving additional points of view for every decision.

- Collaborative Annotation: Humans usually are the ones who label training data, which allows AI to improve its detection accuracy.

Future of AI-Assisted Critical Decision Making

The future of critical decision-making is not just to understand when to trust AI or humans, but how to combine their capabilities effectively. As computer vision technology advances, the key to success will be maintaining ethical considerations and maximizing the benefits of human and machine intelligence combined. The most effective approaches will maintain human accountability and leverage AI’s processing power.

Looking ahead, we can expect even better hybrid systems that work for different scenarios and risks. However, ethical considerations should always be a priority when implementing these systems. As research continues to integrate computer vision into critical decision-making, transparency, fairness, and accountability should be a top priority for any hybrid system design.