The term ‘prompt’ has been thrown around quite a lot since the introduction of large language models (LLMs) in the world of generative artificial intelligence. For the end-user, these prompts are part of the LLM interface, which they use to interact with the model. In short, prompts are instructions in the form of natural text, images, or just data that help the model perform certain tasks.

The quality and performance of the LLM depend on the quality of the prompt it is given. Prompt engineering allows users to construct optimal prompts to improve the LLM response. This article will guide readers step by step through AI prompt engineering and discuss the following:

- What is a Prompt?

- What is prompt engineering?

- Prompt Engineering Techniques

- Benefits of Prompt Engineering

- Prompt Engineering in Computer Vision

What is a Prompt?

A prompt is what the user provides as input to the model. Every time someone asks ChatGPT a question or asks it to summarize some text, they are prompting it to generate a response according to the provided instructions. Prompts can be as simple as ‘What is 2+2?’, and the model will understand the question and try to give an accurate answer like a human being.

However, generative AI models will not always accurately understand the assignment and might start working in the wrong direction. To counter this, a good prompt must be detailed, leaving nothing to assumption, and provide all relevant information.

Prompt Structures in Modern LLMs

Modern LLMs have three primary prompt types that allow users to tweak the model’s response.

- User Prompt: These are the general instructions that the user directly provides the language model. For example, ‘What was the result of World War 1?’

- Assistant Prompt: These prompts are designed to influence the LLM’s response style, tone, detail, etc. For example, ‘Provide a factual answer referencing historical text.’

- System Prompt: Similar to an assistant prompt, but has a stronger focus on adjusting the structure of the response according to the task. For example, ‘List down the details in bullet points.’

The user prompts come from the end user, while developers and researchers design the Assistant and System prompts to guide the LLM.

What is Prompt Engineering?

The beauty of natural language processing (NLP) is that the same concept or message can be relayed in several ways. We can use different expressions, choices of words, or sentence structures. The same concept applies to language models: they understand language similarly to humans and react differently depending on how the prompt was constructed.

Prompt Engineering refers to constructing and fine-tuning prompts to produce accurate results in the desired format. The entire prompt engineering premise revolves around your ability to describe what you require from the language model. Some prompt engineering examples include:

- “Write a short story about a young wizard named Harry.”

- “Write a short story about a young wizard named Harry. Harry should be 12 years old, and the story should be set in London during the early 1990s.”

- “Write me a short story. The story should be about a young boy named Harry who discovers he is a wizard on his 12th birthday… The story should be set in early 1990s London. The story should revolve around Harry exploring his new identity and making new friends on his journey. The tone of the story should be directed toward young audiences who feel attached to the character“

The three examples above describe the same request, but each provides the instructions differently. The third prompt will generate the most relevant response since it provides intricate details regarding the expected narrative tone and plot progression.

Editing Existing Text

Moreover, prompt engineering also involves asking the model to tweak its current response. This means that once a response has been generated, users can ask it to regenerate and make some amendments.

For example, continuing our examples from earlier, if the LLM has written a story based on prompt 3, you can further prompt it to:

“Write it again, and this time also build around the premise that Harry is an orphan and his parents died in a mysterious accident.”

This technique is called iterative prompting and is discussed in a later section.

LLM’s capabilities extend far beyond just story-writing; hence, numerous prompt engineering techniques benefit different scenarios.

Prompt Engineering Techniques

Some popular prompt engineering techniques include:

Zero-Shot Prompting

This is the most basic type of prompt engineering technique. During Zero-Shot Prompting (ZSP), users query an LLM directly without prior examples. The LLM is expected to generate a response without guidance and with whatever knowledge it has.

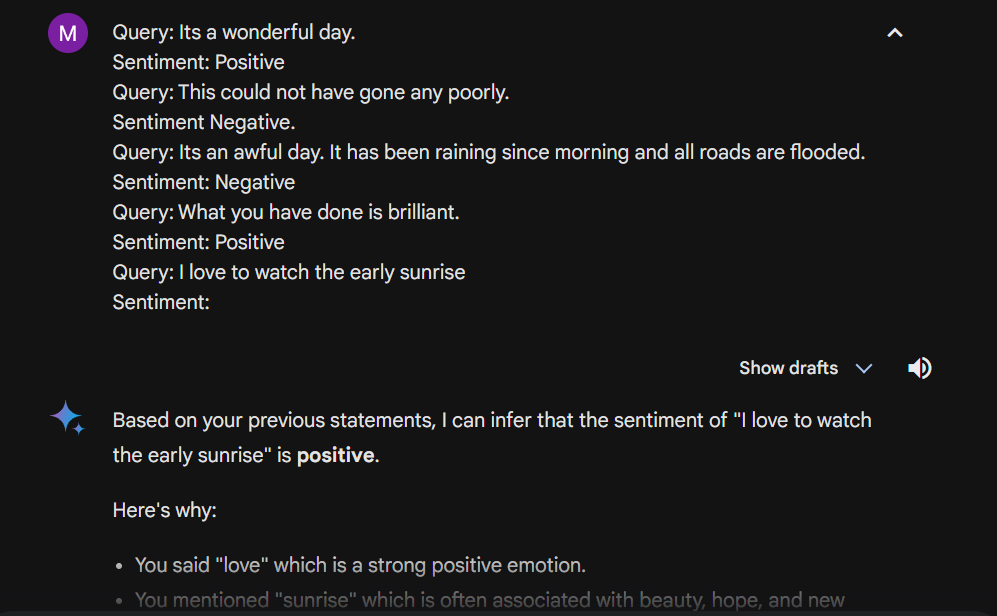

One-Shot Prompting

One-Shot improves upon ZSP by providing the LLM with a query-response pair as an example, along with the actual query. The LLM learns from the example and then tries to generate a similar response to the actual query in question.

Few-Shot Prompting

Few-Shot dials up one-shot by providing a few examples (usually 2 or 4) instead of just 1. This is useful in ensuring absolute consistency amongst all responses.

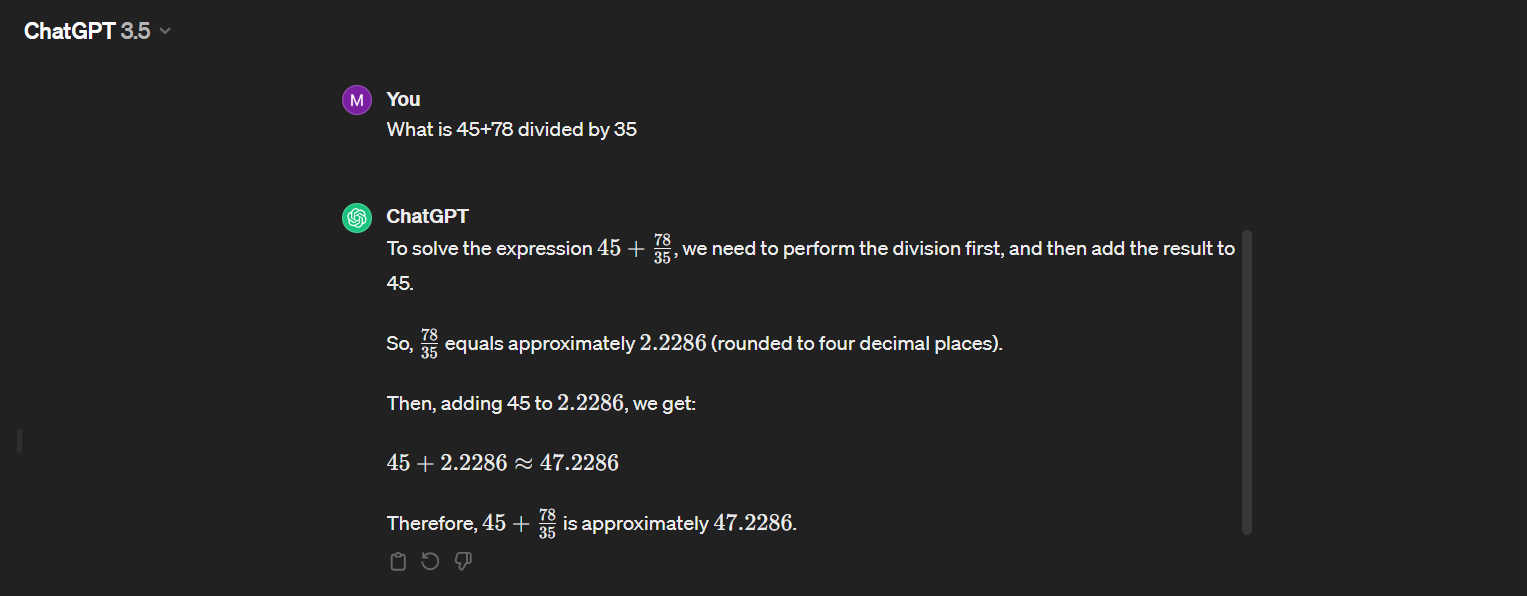

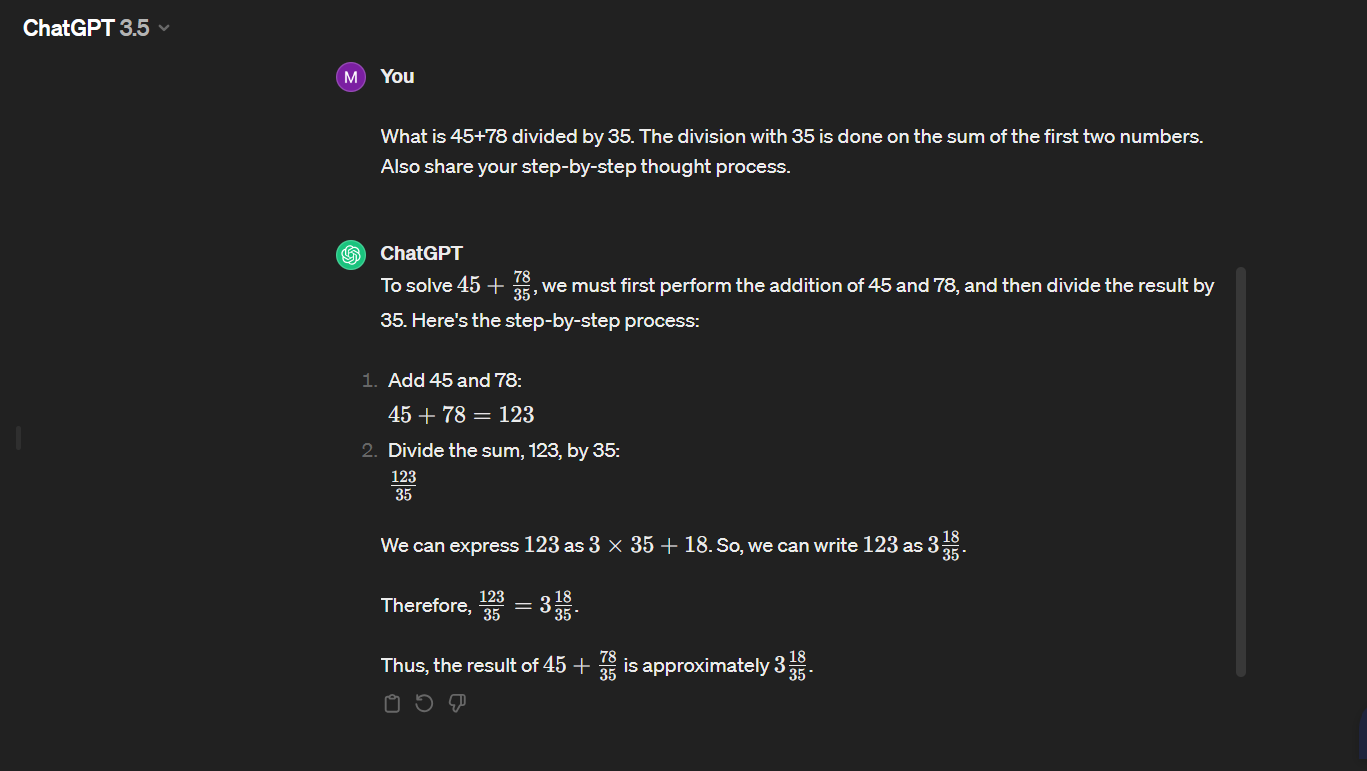

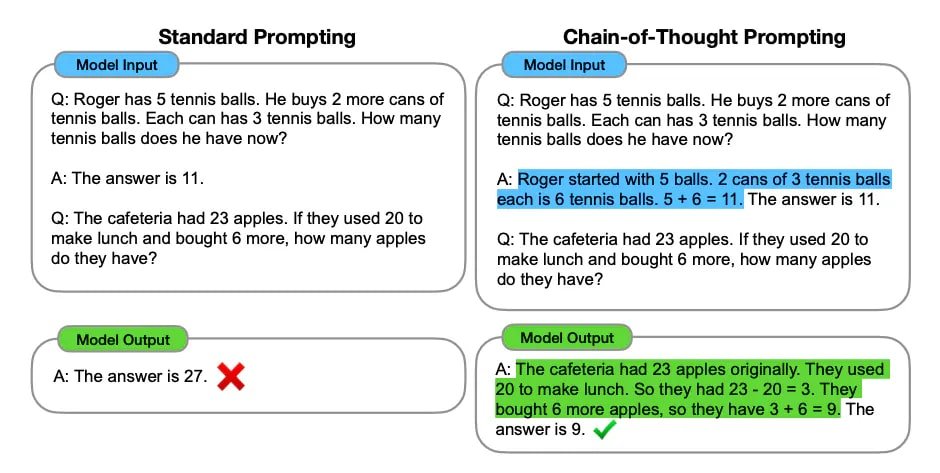

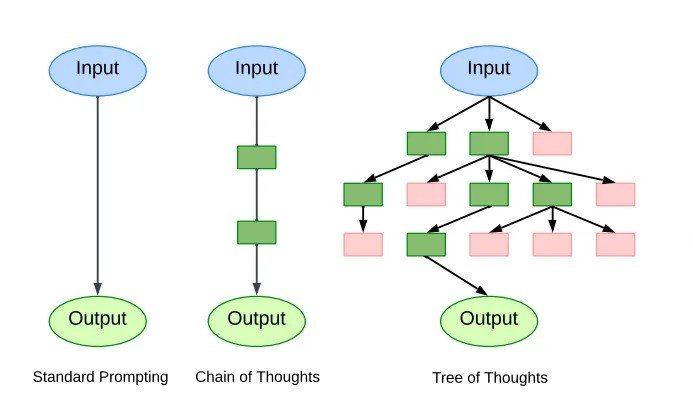

Chain-of-Thought Prompting

CoT Prompting forces the LLM to dissect its output into multiple steps before concluding. It is most useful for tasks related to logical reasoning, such as math problems. CoT prompts allow LLMs to be more accurate in their responses and add a layer of explainability to the process.

Tree-of-Thought Prompting

ToT is similar to CoT, but it establishes various paths instead of following a linear chain. The ToT prompt method tells the model to evaluate multiple decision paths. Any path that does not seem to lead to a plausible conclusion is abandoned. This mode induces critical thinking in the model and leads to improved results.

Iterative Prompting

Modern LLM applications have conversational properties, i.e., they can understand follow-up prompts and generate responses by considering current and previous prompts. Users dissatisfied with a given response can further prompt the LLM with more details to refine its output. This is called iterative prompting. With every iteration, the LLM can tweak its last response depending on the new prompt from the user.

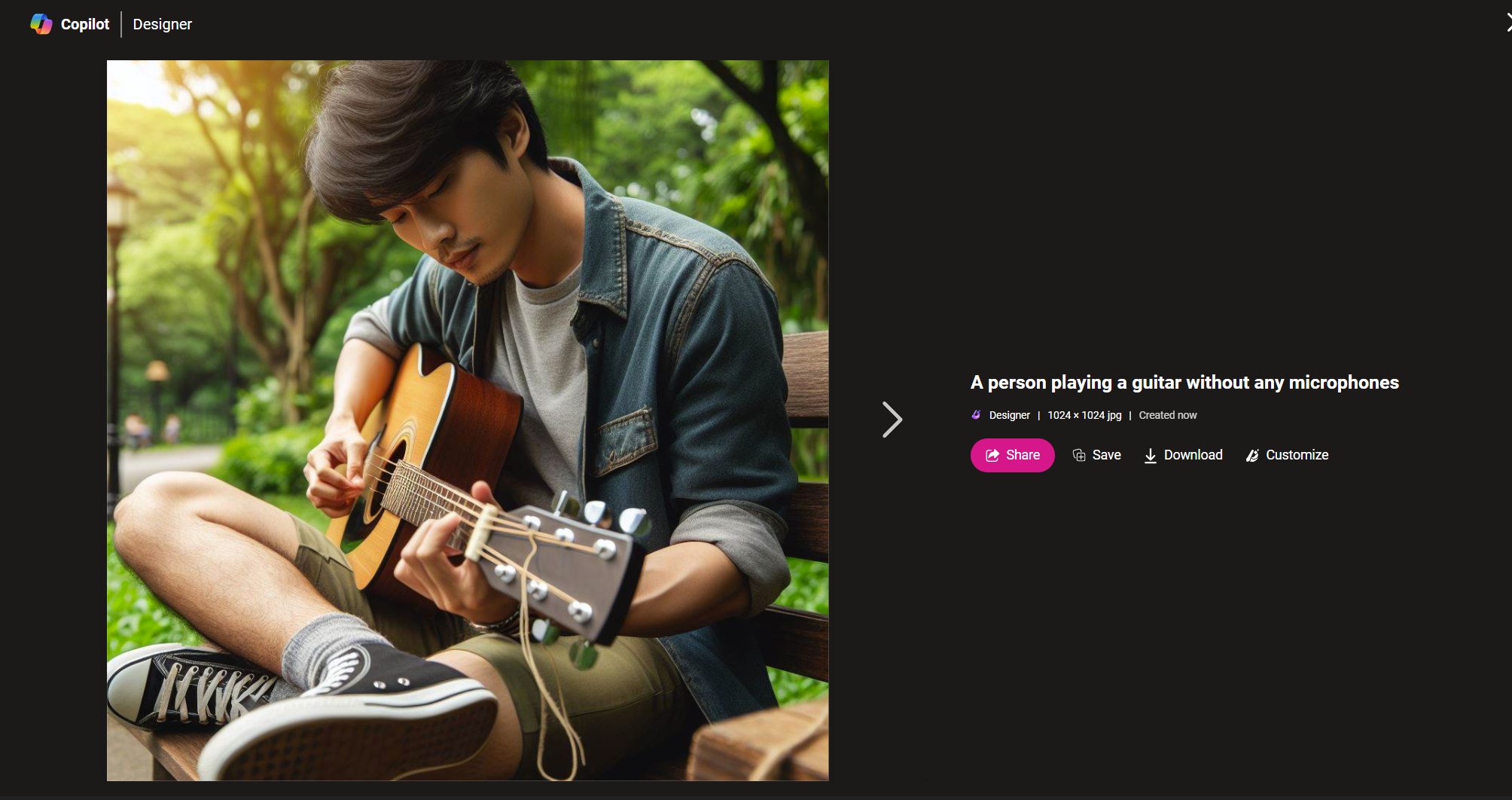

Negative Prompting

While most prompting techniques convey the user’s requirements to the LLM, negative prompting specifies what the user does not want. It is more popular in text-to-image models, where users can specify certain elements they want the model to ignore. However, it can also be used in LLMs, e.g., to specify that the LLM does not use any contractions in its response.

Prompt Engineering Benefits

Let’s discuss some key reasons why prompt engineering is essential for using Generative AI.

Better Response, Relevance, and Accuracy

Prompt engineering allows users to convey concrete requirements to the model. The model better understands what is required in the output and generates an accurate response. Tweaking the model’s responses also ensures they comply with regulatory guidelines, making it more reliable.

Improved Thought Process

Certain carefully crafted prompts force the model to follow a chain of thought, leading to a more logical response. Moreover, techniques like Few-shot prompting allow the model to learn from the user’s example. This way, the model generates outputs in the desired structure and remains consistent for all future conversations.

Improved Explainability

Using techniques like CoT or ToT prompting forces the model to explain its thought process, i.e., how it came to a certain conclusion. The explanation helps determine whether the response is accurate and speaks volumes about the model’s reliability in logical reasoning.

Personalized Responses

Well-constructed system prompts ensure that all the model’s responses are structured in a certain way. This allows users to build personalized chatbots to tackle specific queries and answer in set formats. For example, for an educational bot, the system prompt can ask the model to respond like a college professor. This way, all the models’ responses will be highly technical and use language that college students can understand.

Time-Saving

Having accurate and relevant responses means developers have to spend less time debugging the model. Since the model’s instructions are pre-defined, they have to spend less time trying different iterations of prompts to get the desired response.

Prompt Engineering in Computer Vision

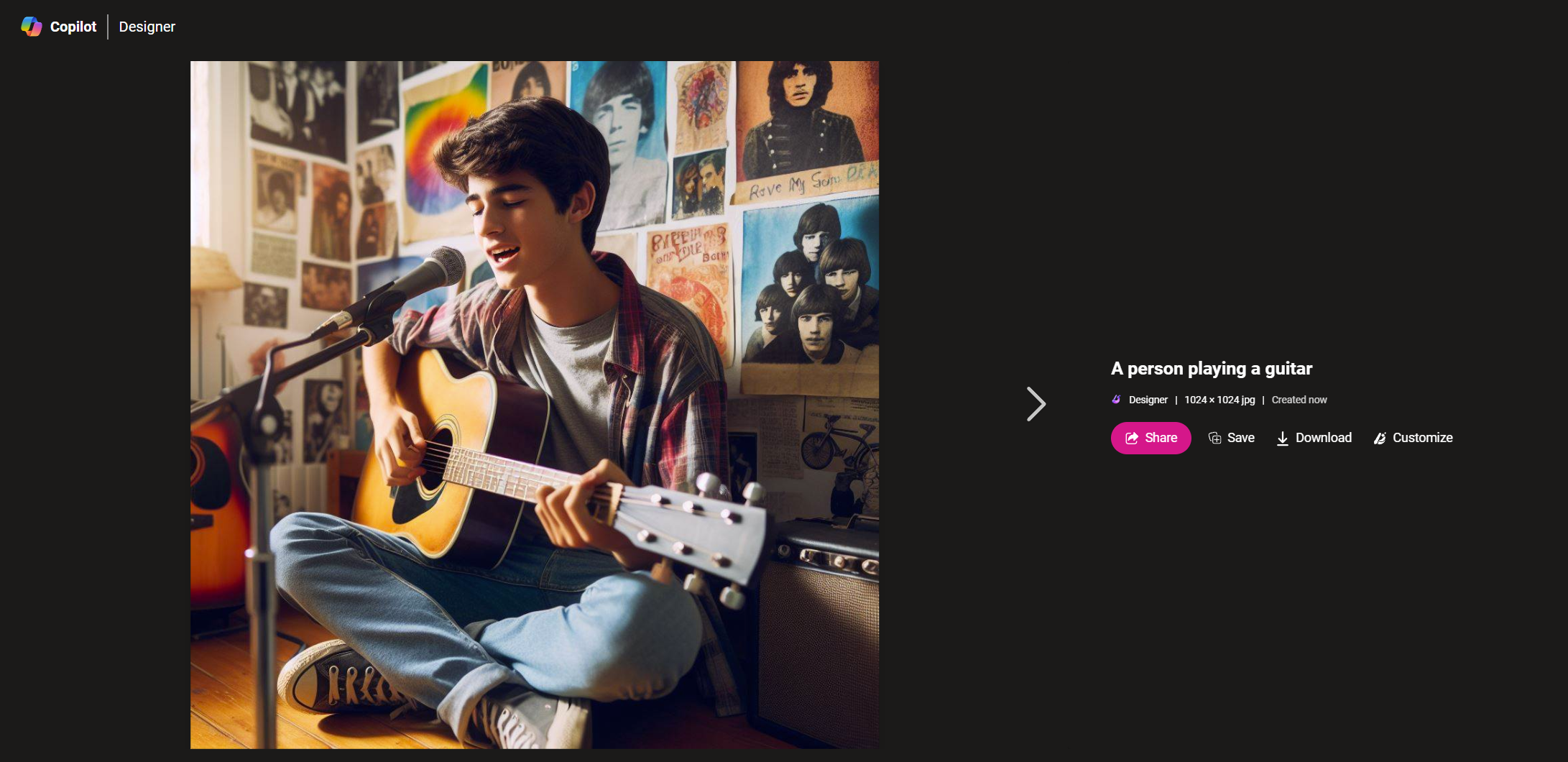

So far, we have discussed prompt engineering in terms of LLMs only because they are mostly associated with language models. However, prompt engineering is also applied to modern text-to-image models such as DALL.E 3 and Stable Diffusion.

The text-to-image model accepts a text prompt describing the required image. The model can understand the various requirements from the prompt and correspondingly generate a visual response. In this scenario, prompt engineering helps the model understand the type of visual that is required by the user.

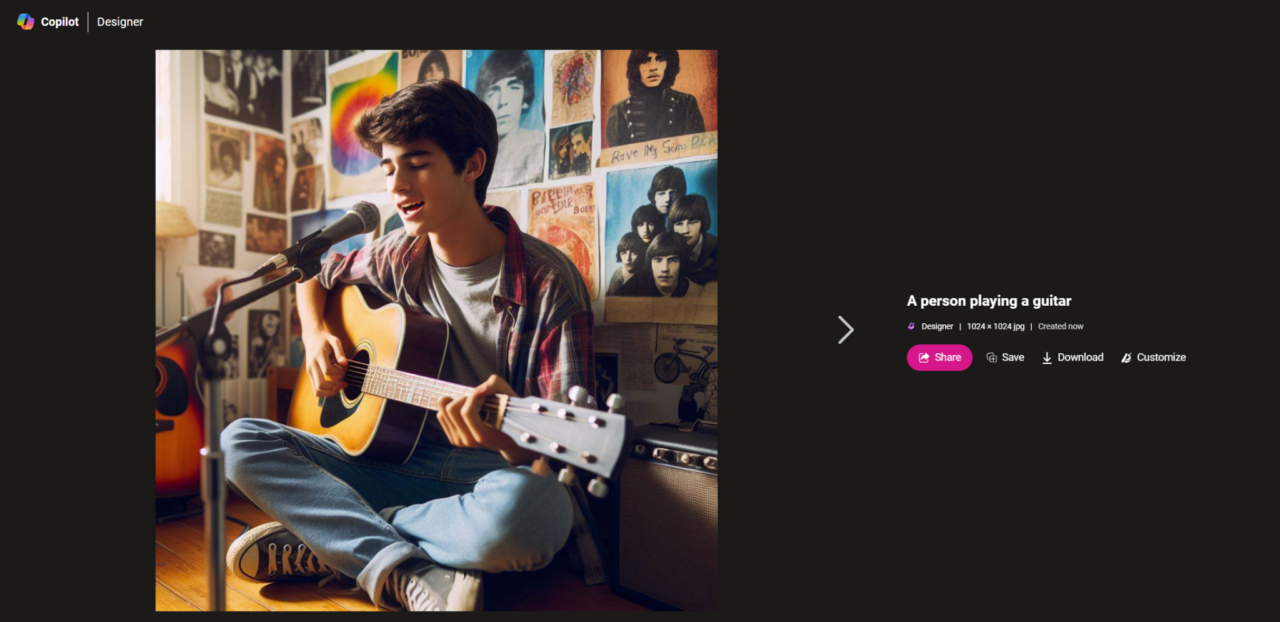

Prompt: Generate a person playing a guitar.

We can use prompt-engineering techniques to improve the results of the generated image. Techniques like iterative and negative prompting are popular in tweaking the results of text-to-image models.

Some generative AI tools, such as Midjourney, provide additional parameters to specify negative prompts. Users can append the parameter ‘–no’ to the prompt, followed by elements that to ignore.

Prompt Engineering: Key Takeaways

The rise of generative AI (GenAI) has led to a new paradigm in the form of prompt engineering jobs. Prompt engineering is applicable in real-world generative applications like Chatbots or text-to-image, requiring natural language inputs. Here’s what we learned for effective prompting:

- A prompt is textual input data telling the model what kind of response it must generate.

- The initial prompt queries are tweaked with prompt engineering to generate an accurate and relevant response.

- Some popular prompt engineering techniques include:

- Chain-of-thought prompting

- Tree-of-thought prompting

- Few-Shot Prompting

- Negative Prompting

- Prompt engineering benefits developers by reducing the time required to fix errors, improving response relevancy to tasks, and allowing them to build a personalized application.

- Prompt engineering also benefits text-to-image models by allowing users to modify the visual output according to personal preference.

Here are some additional resources to catch up on the latest AI developments:

- N-Shot Learning: Zero Shot vs. Single Shot vs. Two Shot vs. Few Shot

- Llama 2: The Next Revolution in AI-Language Models – Complete Guide

- AI Can Now Create Ultra-Realistic Images and Art from Text

- OpenAI Sora: the Text-Driven Video Generation Model