In 2024 the Royal Swedish Academy of Sciences awarded the Nobel Prize in Physics to John J. Hopfield and Geoffrey E. Hinton, who are considered pioneers for their work in artificial intelligence (AI). Physics is an interesting field, and it has always been intertwined with groundbreaking discoveries that change our understanding of the universe and enhance our technology. John Hopfield is a physicist with contributions to machine learning and AI. Geoffrey Hinton, often considered the godfather of AI, is the computer scientist whom we can thank for the current advancements in AI.

Both John Hopfield and Geoffrey Hinton conducted foundational research on artificial neural networks (ANNs). The Nobel Prize’s remarkable achievement comes from their research that enabled machine learning with ANNs, which allowed machines to learn in new ways previously thought exclusive to humans. In this comprehensive overview, we will delve into the groundbreaking research of Hopfield and Hinton, exploring the key concepts in their research that have shaped modern AI and earned them the prestigious Nobel Prize.

Review of Artificial Neural Networks (ANNs): The Foundation of Modern AI

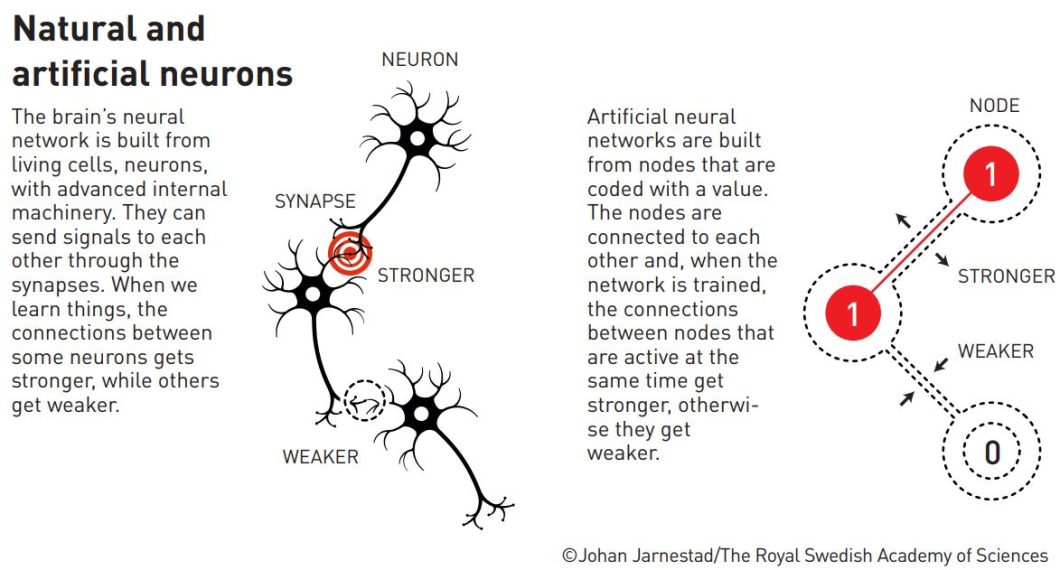

John Hopfield and Geoffrey Hinton made foundational discoveries and inventions that enabled machine learning with Artificial Neural Networks (ANNs), which make up the building blocks for modern AI. Mathematics, computer science, biology, and physics form the roots of machine learning and neural networks. For example, the biological neurons in the brain inspire ANNs. Essentially, ANNs are large collections of “neurons”, or nodes, connected by “synapses”, or weighted couplings. Researchers train them to perform certain tasks rather than asking them to execute a predetermined set of instructions. This is also similar to spin models in statistical physics, used in theories like magnetism or alloys.

Research on neural networks and machine learning has existed ever since the invention of the computer. ANNs are made of nodes, layers, connections, and weights. The layers are made of many nodes with connections between them, and a weight for those connections. The data goes in, and the weights of the connections change depending on mathematical models. In the ANN area, researchers explored two architectures for systems of interconnected nodes:

- Recurrent Neural Networks (RNNs)

- Feedforward neural networks

RNNs are a type of neural network that takes in sequential data, like a time series, to make sequential predictions, and they are known for their “memory”. RNNs are useful for a wide range of tasks like weather prediction, stock price prediction, or nowadays deep learning tasks like language translation, natural language processing (NLP), sentiment analysis, and image captioning. Feedforward neural networks, on the other hand, are more traditional one-way networks, where data flows in one direction (forward), which is the opposite of RNNs that have loops. Now that we understand ANNs, let’s dive into John Hopfield and Geoffrey Hinton’s research individually.

Hopfield’s Contribution: Recurrent Networks and Associative Memory

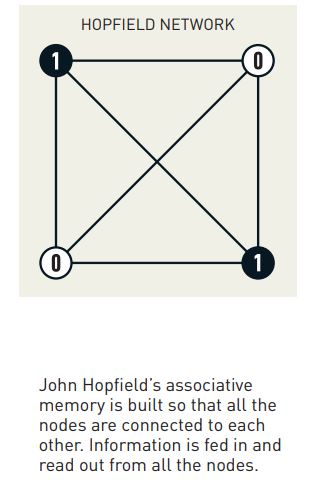

John J. Hopfield, a physicist in biological physics, published a dynamical model in 1982 for an associative memory based on a simple recurrent neural network. The simple memory-based RNN structure was new and influenced by his background in physics, such as domains in magnetic systems and vortices in fluid flow. RNN networks with loops allow information to persist and influence future computations, just like a chain of whispers where each person’s whisper affects the next.

Hopfield’s most significant contribution was the development of the Hopfield Network model.

The Hopfield Network Model

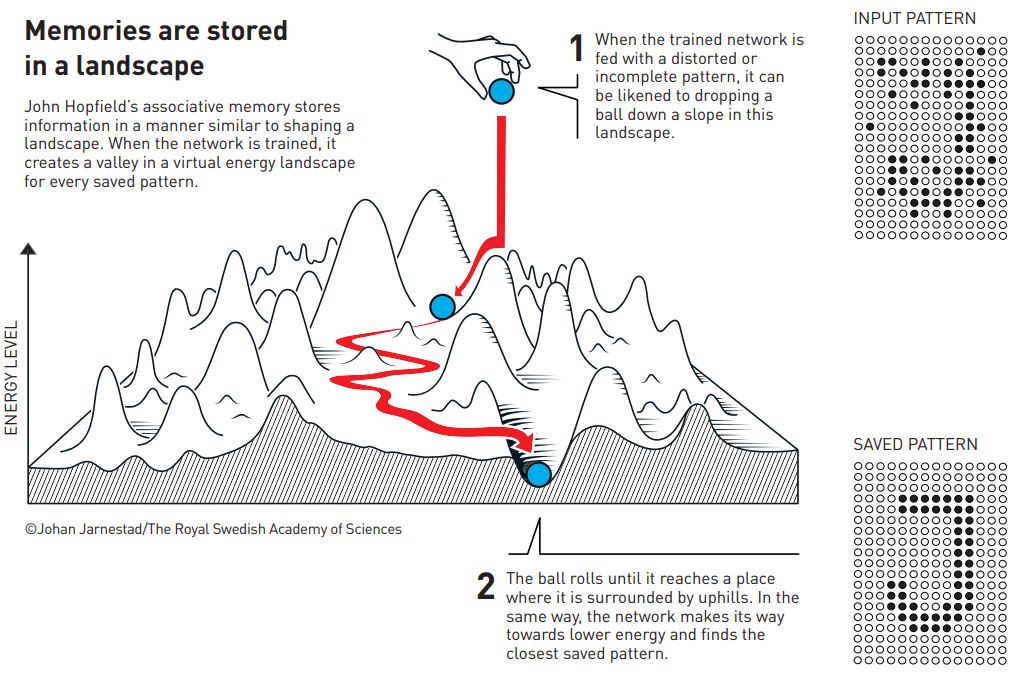

Hopfield’s network model is an associative memory based on a simple recurrent neural network. As we have discussed, RNN consists of connected nodes, but the model Hopfield developed had a unique feature called an “energy function” which represents the memory of the network. Imagine this energy function like a landscape with hills and valleys. The network’s state is like a ball rolling on this landscape, and it naturally wants to settle in the lowest points, the valleys, which represent stable states. These stable states are like stored memories in the network.

The term “associative memory” in this network means it can link patterns into the right stable state, even if distorted. It’s like recognizing a song from just a few notes. Even if you give the network a partial or noisy input, it can still retrieve the complete memory, like filling in the missing parts of a puzzle. This ability to recall complete patterns from incomplete information makes the Hopfield Network a significant contribution to the world of machine learning.

Applications of Hopfield Networks

The Hopfield network influenced research across the computer science field to this day. Researchers found applications in various areas, particularly in pattern recognition and optimization problems. John Hopfield networks can recognize images, even if they’re distorted or incomplete. They are also useful for search algorithms where you need to find the best solution among many possibilities, like finding the shortest route. The Hopfield network has been used to solve common problems in the computer science field like the traveling salesman problem, and using its associative memory for tasks like image reconstruction.

Hopfield’s work laid the foundation for further advancements in neural networks, especially in deep learning. His research inspired many others to explore the potential of neural networks, including Geoffrey Hinton, who took these ideas to new heights with his work on deep learning and generative AI. Next, let’s dive into Hinton’s research and see why he is the godfather of AI.

Hinton’s Contribution: Deep Learning and Generative AI

Geoffrey Hinton, a pioneer in AI, his research led to the current advancements of artificial neural networks. His research changed our perspective on how machines can learn and paved the way for modern AI applications that are transforming industries. Hinton explored the potential of several types of artificial neural networks and made significant contributions to various architectures and training techniques that we will discuss in this section.

Hinton’s Work on Various ANN Architectures

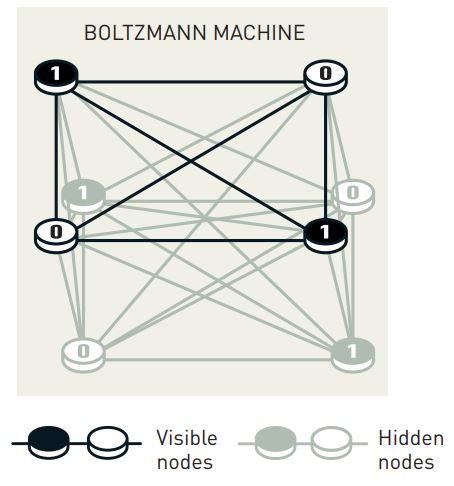

In 1983–1985, Geoffrey Hinton, together with Terrence Sejnowski and other coworkers, developed an extension of Hopfield’s model called the Boltzmann machine. This is a stochastic recurrent neural network, but unlike the Hopfield model, the Boltzmann machine is a generative model. The Boltzmann machine is one of the earliest approaches to deep learning. It is a type of ANN that uses a stochastic (random) approach to learn the underlying structure of data, where the nodes are like the switches, they are either visible (representing the input data) or hidden (capturing internal representations). Imagine it like a network of connected switches, each randomly flipping between “on” and “off” states.

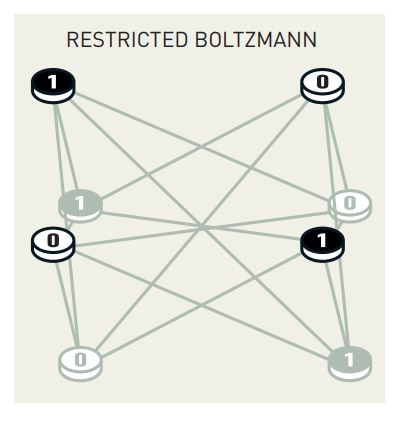

The Boltzmann machine still had the same concept as the Hopfield model, where it aims to find a state of minimum energy, which corresponds to the best representation of the input data. This unique network architecture at the time allowed it to learn internal representations and even generate new samples from the learned data. However, training these Boltzmann Machines can be quite computationally expensive. So, Hinton and his colleagues created a simplified version called the Restricted Boltzmann Machine (RBM). The RBM is a slimmed-down version with less weight, making it easier to train while still being a versatile tool.

In a restricted Boltzmann machine, there are no connections between nodes in the same layer. This proved particularly powerful when Hinton later showed how to stack them together to create powerful multi-layered networks capable of learning complex patterns. Researchers frequently use the machines in a chain, one after the other. After training the first restricted Boltzmann machine, the content of the hidden nodes is used to train the next machine, and so on.

Backpropagation: Training AI Effectively

In 1986 David Rumelhart, Hinton, and Ronald Williams demonstrated a key advancement of how architectures with one or more hidden layers could be trained for classification using the backpropagation algorithm. This algorithm is like a feedback mechanism for neural networks. The objective of this algorithm is to minimize the mean square deviation, between output from the network and training data, by gradient descent.

In simple terms backpropagation allows the network to learn from its mistakes by adjusting the weights of the connections based on the errors it makes which improves its performance over time. Moreover, Hinton’s work on backpropagation is essential in enabling the efficient training of deep neural networks to this day.

Towards Deep Learning and Generative AI

All the breakthroughs that Hinton made with his team were soon followed by successful applications in AI, including pattern recognition in images, languages, and clinical data. One of those advancements was Convolutional Neural Networks (CNNs), which were trained by backpropagation. Another successful example of that time was the long short-term memory method created by Sepp Hochreiter and Jürgen Schmidhuber. This is a recurrent network for processing sequential data, as in speech and language, and can be mapped to a multilayered network by unfolding in time. However, it remained a challenge to train deep multilayered networks with many connections between consecutive layers.

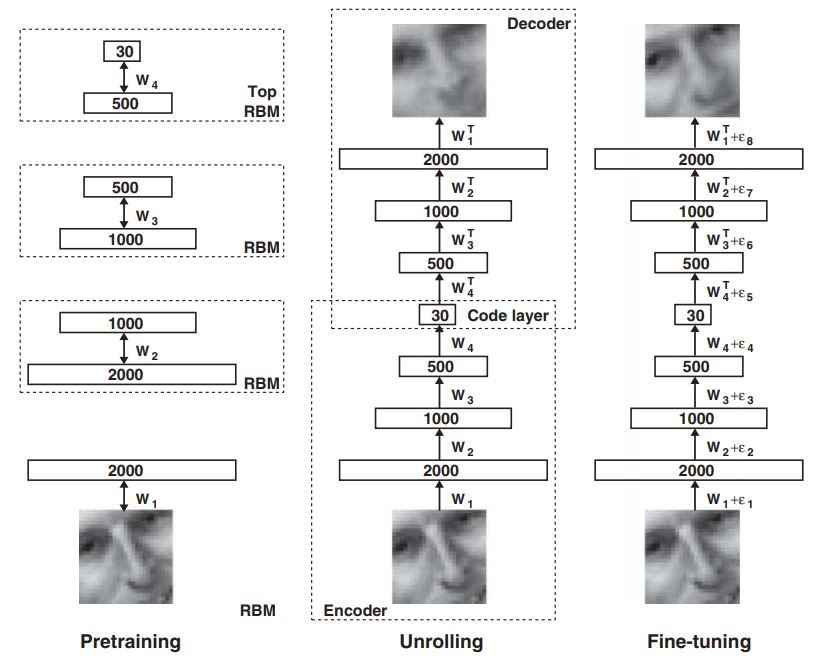

Hinton was the leading figure in creating the solution, and an important tool was the restricted Boltzmann machine (RBM). For RBMs, Hinton created an efficient approximate learning algorithm, called contrastive divergence, which was much faster than that for the full Boltzmann machine. Other researchers then developed a pre-training procedure for multilayer networks, in which the layers are trained one by one using an RBM. An early application of this approach was an autoencoder network for dimensional reduction.

Following pre-training, it became possible to perform a global parameter finetuning using the backpropagation algorithm. The pre-training with RBMs identified structures in data, like corners in images, without using labeled training data. Having found these structures, labeling those by backpropagation turned out to be a relatively simple task. By linking layers pre-trained in this way, Hinton was able to successfully implement examples of deep and dense networks, a great achievement for deep learning. Now, let’s move on to explore the impact of Hinton and Hopfield’s research and the future implications of their work.

The Impact of Hopfield and Hinton’s Research

The groundbreaking research of Hopfield and Hinton has had a deep impact on the field of AI. Their work advanced the theoretical foundations of neural networks and led to the capabilities that AI has today. Image recognition, for example, has been greatly enhanced by their work, allowing for tasks like object detection, faces, and even emotions. Natural language processing (NLP) is another area, thanks to its contributions, we now have models that can understand and generate human-like text, enabling popular applications like the GPTs.

The list of applications used in everyday life based on ANNs is long; these networks are behind almost everything we do with computers. However, their research has a broader impact on scientific discoveries. In fields like physics, chemistry, and biology, researchers use AI to simulate experiments and design new drugs and materials. In astrophysics and astronomy, ANNs have also become a standard data analysis tool, where we recently used them to get a neutrino image of the Milky Way.

Decision support within health care is also a well-established application for ANNs. A recent prospective randomized study of mammographic screening images showed a clear benefit of using machine learning in improving the detection of breast cancer or motion correction for magnetic resonance imaging (MRI) scans.

The Future Implications of the Nobel Prize in Physics 2024

The future implications of John J. Hopfield and Geoffrey E. Hinton’s research are vast. Hopfield’s research on recurrent networks and associative memory laid the foundations, and Hinton’s further exploration of deep learning and generative AI has led to the development of powerful AI systems. Moreover, as AI continues to evolve, we can expect even more groundbreaking research and transformative applications. Their work has laid the foundation for a future where AI can help solve the world’s most pressing challenges. The 2024 Nobel Prize in Physics is a testament to their remarkable achievements and their lasting impact on AI. However, it is important to consider that as we continue to develop and deploy AI systems, we must use them ethically and responsibly to benefit us and the planet.