Artificial intelligence (AI) is not a monolithic field, idea, or area of study. Instead, researchers are working on a spectrum of AI technologies with different capabilities, applications, and “levels of intelligence.” Today, we consider the following three broad categories when discussing the capability and scope of AI systems: Artificial Narrow Intelligence (ANI), Artificial General Intelligence ( AGI), and Artificial Super Intelligence (ASI).

The Different Types of AI

Artificial Narrow Intelligence

What we’d consider the “least” intelligent in human terms is ANI, or artificial narrow intelligence. As the name suggests, narrow AI systems are designed and trained to execute specific tasks. While they can learn and improve their capabilities, their evolution is confined to a specific subset of tasks, and they do not possess general cognitive abilities.

Examples of ANI systems today include:

- Voice assistants: Siri, Alexa, Google Assistant, etc, using large language models (LLMs)

- Autonomous vehicles: Tesla’s Autopilot, Waymo, etc.

- Cybersecurity or spam filters: Crowdstrike Falcon, Gmail, and Microsoft Outlook spam filters, etc.

To learn more, check out our guide to Artificial Narrow Intelligence (ANI).

Artificial General Intelligence

AGI is a hypothetical AI system that can replicate human capabilities to understand new information and apply reason. We say it’s “hypothetical” because we have not yet achieved AGI, and whether it’s even theoretically possible is still up for debate.

As per current definitions, an AGI system would be able to understand, learn, and apply its knowledge across any field, similar to a human. A critical feature of AGI is the ability to generalize knowledge and reasoning from a limited subset of data to entirely new and unseen data sets. A person would, theoretically, be unable to distinguish between another human and an AGI if they did not know which they were interacting with.

While we’re not there yet, there are several potential prospects that some consider to be on the cusp of achieving AGI:

- DeepMind’s AlphaGo

- OpenAI’s GPT-4

- Sophia by Hanson Robotics

Artificial Super Intelligence

While AGI would, at the very least, match human intelligence, Artificial superintelligence (ASI) represents a system that surpasses human intelligence. Compared to us, an ASI would exhibit virtually unfathomable cognitive abilities in all areas, including creativity, problem-solving, and decision-making. Both AGI and ASI should have the potential for emergence or development capabilities that have not been explicitly programmed.

However, super-intelligent AI may represent a higher form of thinking than the human mind is capable of. Because of this, it’s hard even fully to imagine what ultimate form this machine intelligence will take. Any speculation borders on science fiction, which is why it’s called a technological “singularity,” representing a point where all our existing knowledge ceases to help us extrapolate into the future.

That being said, speculative examples of what ASI might be capable of are:

- Superintelligent Autonomous Systems

- Hyperintelligent Research AI

- Universal Problem Solver

- Autonomous swarm intelligence or “hive minds”

Understanding Super Artificial Intelligence: A Theoretical Deep Dive

The current research in Super AI relies largely on the same theoretical foundation and conceptual frameworks as other AI systems. Since we are still nowhere close to ASI or even AGI, we may yet discover new models or computation techniques that completely change our approach to ASI.

Neural Networks

For now, most attempts to develop ASI are still grounded in well-known models, such as neural networks, machine learning/deep learning, and computational neuroscience.

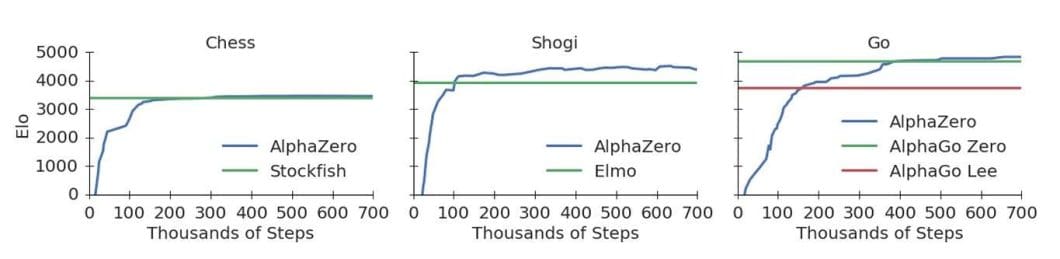

For example, DeepMind’s AlphaGo uses Convolutional Neural Networks (CNN) to evaluate game positions, mimicking high-level human cognition. Similarly, OpenAI continues to demonstrate the potential of transformers (a relatively simple architecture) to facilitate understanding and generating complex human language.

Reinforcement Learning

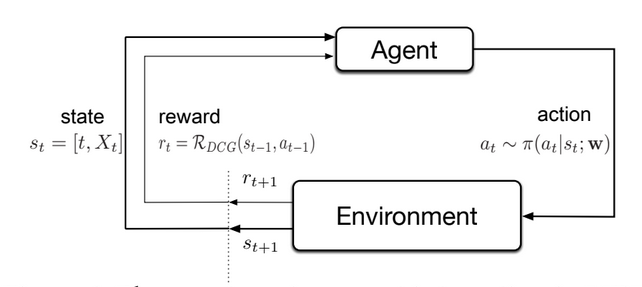

Many also strongly advocate for deep reinforcement learning as potentially being the key to first achieving and then exceeding human intelligence. This is because reinforcement learning is most similar to how humans develop by continuously interacting with and learning from our environment.

Through trial and error, machines can learn by receiving “penalties” for incorrect behaviors and “rewards” for correct actions. This approach is essential to develop self-improving AI systems that can generalize intelligence for a broad spectrum of tasks.

Computational Neuroscience

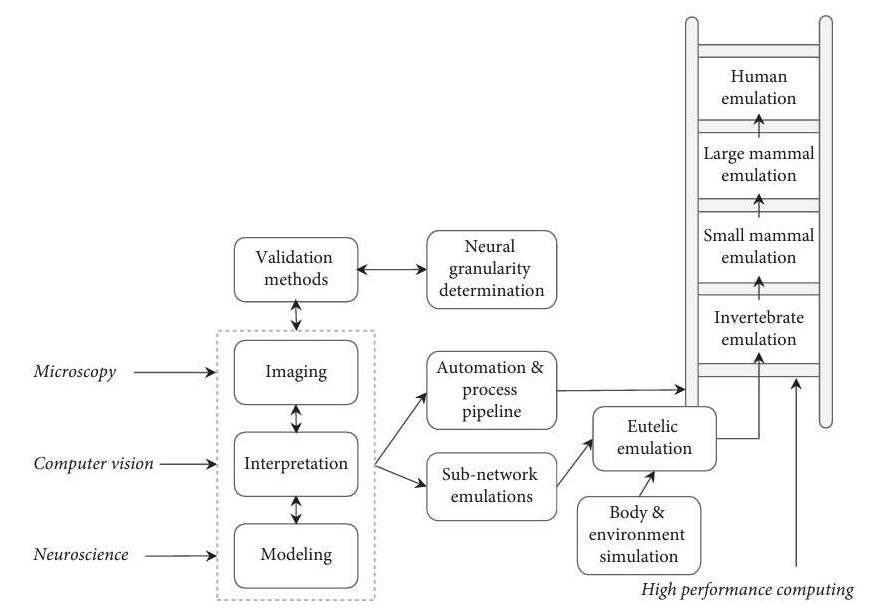

Lastly, computational neuroscience contributes to ASI by providing insights into human brain functions, which can be emulated in AI models. Techniques like neuroevolution, which involve evolving neural networks using genetic algorithms, are being explored to create more efficient AI systems capable of self-enhancement.

Estimates for when we would achieve AGI range from the next 5 to 30 years. As such, most papers on the subject are highly speculative, focusing on the current state of AI. Nick Bostrom’s book, “Superintelligence: Paths, Dangers, Strategies,” is one of the most influential on the subject. It explores the most likely pathways to achieving AGI as well as its potential risks, economic impact, and concerns regarding ethics and morality.

In particular, Bostrom highlights mastering whole-brain emulation as a likely milestone for developing human-level intelligence. As the human brain is the most efficient and powerful computing system we know, this method relies on accurately simulating it.

ASI Characteristics

While our current understanding of what constitutes ASI is likely to evolve, most AI researchers would agree that it should have the following characteristics:

The integration of these technologies facilitates the development of ASI’s key characteristics:

- Self-improvement and recursive self-enhancement

- Autonomy and decision-making capabilities

- Broad and accurate generalization across various domains

Groundbreaking Developments Toward ASI

Alan Turing’s 1950 paper, “Computing Machinery and Intelligence,” is considered by many to be the seminal piece of work on AI and even AGI. This paper proposes the “Turing Test,” still taught to computer science students all over the world, as a measure of “true” machine intelligence.

We’ve come a long way since then in realizing these ideas are, with many AI systems today that exhibit some of the traits of AGI, a stepping stone in the development of ASI. It will likely take a compounding effect of various advancements in computer science across different disciplines to achieve ASI.

For example, the 2017 paper “Mastering Chess and Shogi by Self-Play with a General Reinforcement Learning Algorithm” by Silver et al. describes DeepMind’s AlphaZero, which demonstrated remarkable generalization by mastering multiple games without human data.

Transformer Models

Transformer models, such as those detailed in the 2017 paper “Attention is All You Need” by Vaswani et al., have also revolutionized natural language processing to a state that’s almost unrecognizable from where it was just a few short years prior.

However, it will also take significant strides in the computational capabilities of our hardware to truly realize ASI. Many of the models we’ve just discussed have advanced accuracy, performance in their fields, and computing efficiency. Still, this will likely not be enough by itself to accommodate the sheer computational needs of a super-human intelligence.

Neuromorphic Computers

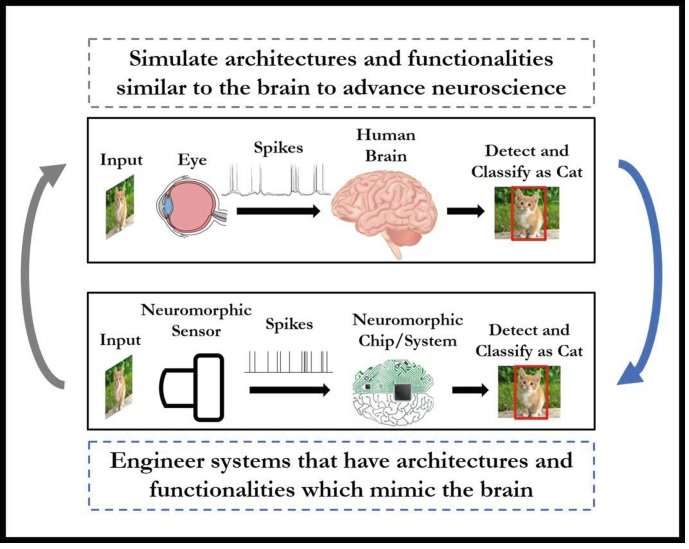

The average human brain consists of roughly 86 billion neurons and an estimated 100 trillion synapses. For now, our best bet to equal this level of performance seems to be the development of neuromorphic computers. These machines use artificial neurons to store and compute data, just like a human brain.

This differs from traditional computers, which use varying mediums for data storage, e.g., RAM/hard drives, and carry out operations on it, e.g., CPU. By eliminating the bottleneck arising from constantly transporting data back and forth, they can carry out operations much faster and more efficiently.

Today, Hala Point by Intel is by far the most impressive computer we have in this regard. It contains 1.15 billion artificial neurons across 1152 Loihi 2 Chips, capable of 280 trillion synaptic operations per second.

Another promising hardware avenue is the development of Quantum computers. The 2019 Google AI paper “Quantum Supremacy Using a Programmable Superconducting Processor” demonstrated the ability of its Sycamore processor to complete a task that would take a traditional computer 10,000 years in 200 seconds.

The Future of Artificial Super Intelligence

Popular culture has given us plenty of reasons to both hope for and despair about the advent of artificial superintelligence (ASI). Films like 2001: A Space Odyssey, for example, explore the potential danger of relying on AI systems that exhibit human, or superhuman-level, intelligence and whose thought processes and incentives we can neither foresee nor understand.

Prominent voices in the tech industry, on the other hand, have differing opinions on the matter. Some, like Elon Musk, Bill Gates, Max Tegmark, and Stephen Hawking, are among those who’ve advocated taking a cautious approach or even outright putting a universal pause on the development of AI systems. Others, like Ray Kurzweil, see it as an inevitable stepping stone in human evolution that we should embrace and encourage.

As of now, AGI and ASI still represent a hypothetical future for humanity. For many, it signals a potential “singularity” or “point of no return” where AI will either become humanity’s most important technological advancement or a massive existential risk leading to its downfall.

The good news is that most estimates say that we still have decades to ensure that the proper safeguards and incentives are put in place. This is to develop AGI or ASI in a way that serves our best interests. The bad news is that the current pace of development has completely eclipsed our political and societal will to regulate the field of AI.