Chatbots are AI agents that can simulate human conversation with the user. These programs are used for a wide variety of tasks and are now more popular than ever on any website. The generative AI capabilities of Large Language Models (LLMs) have made chatbots more advanced and more capable than ever. This makes any business want their own chatbot, answering FAQs or addressing concerns.

This increased interest in chatbots means developers will learn how to create, use, and set them up. Thus, in this article, we will focus on developing LangChain Chatbots. Langchain is a popular framework for this type of development and we will explore it in this hands-on guide. We’ll go through chatbots, LLMs, and Langchain, and create your first chatbot. Let’s get started.

About us: Viso Suite is the world’s only end-to-end Computer Vision Platform. The solution helps global organizations to develop, deploy, and scale all computer vision applications in one place. Get a demo for your organization.

Understanding Chatbots and Large Language Models (LLMs)

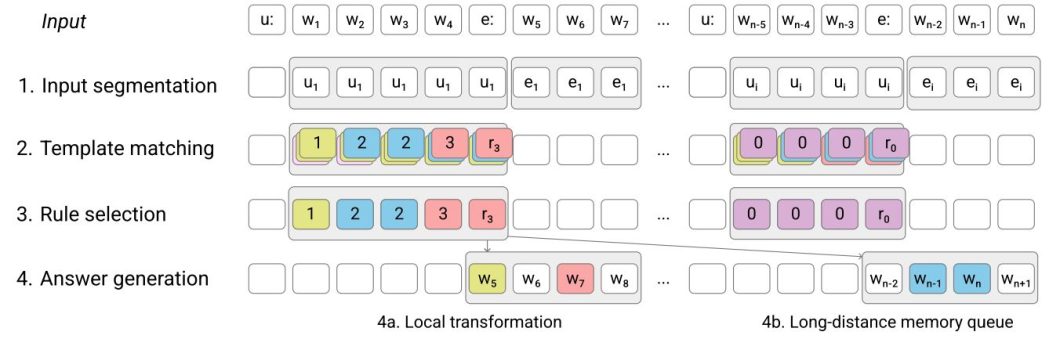

In recent years we have seen an impressive development in the capabilities of Artificial Intelligence (AI). Chatbots are a concept in AI that existed for a long time. Early methods used rule-based pattern-recognition systems to simulate human conversation. Rule-based systems are premade scripts developed by a programmer, where, the chatbot would pick the most suitable answer from the script using if-else statements. To take this a step further, researchers also used pattern recognition to allow the program to replace some of its premade sentences with words used by the user in a pre-made template. However, this approach has many limitations, and as AI research deepened, chatbots developed as well to start using generative models like LLMs.

What are Large Language Models (LLMs)?

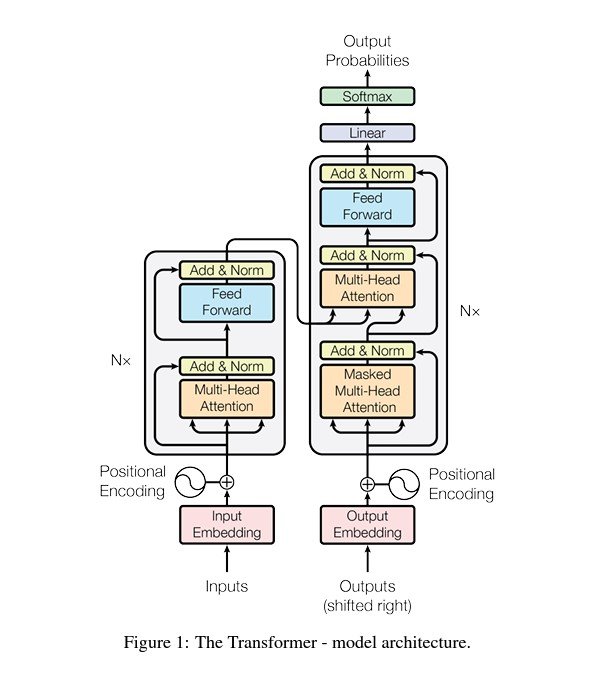

Large Language Models (LLMs) are a popular type of generative AI models that use Natural Language Processing (NLP) to understand and simulate human speech. Language understanding and generation is a long-standing research topic. There were 2 major developments in this field, one of them was predictive modeling which relies on statistical models like Artificial Neural Networks (ANNs). Transformer-based architecture was the second major development. It allowed for the development of popular LLMs like ChatGPT, allowing for better Chatbots.

The transformer architecture allows us to efficiently pre-train very big language models on large amounts of data on (Graphical Processing Units) GPUs. This development makes Chatbots and LLM systems capable of taking action like GPT4 powering Microsoft’s Co-Pilot systems. Those systems can perform multi-step reasoning taking decisions and actions. LLMs are thus becoming the basic building block for Large Action Models (LAMs). Building systems around those LLMs gives developers the ability to create all kinds of AI-powered chatbots. Let’s explore the different types of chatbots and how they work.

Types of Chatbots

While LLMs have expanded the capabilities of chatbots, they are not all created equally. Developing and building a chatbot would potentially mean fine-tuning an LLM to your own needs. Thus there are multiple types of a chatbot.

Rule Based Chatbots

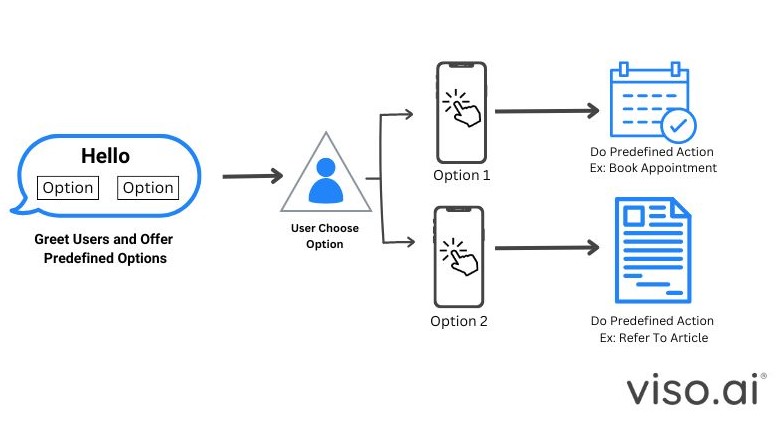

With rule-based chatbots, we have a predefined template of answers and a prompt template structured as a tree. The user message is matched to the right intent using if-else rules. The intent is then matched to the response. This usually requires a complicated rule-based system and regular expressions to match the user statements to intents.

Rule-based and AI Chatbots

A more sophisticated design would be to use a rule-based system and put a Transformer-based LLM within it used by chatbots like ELIZA.

When someone sends a message, the system does a few things. First, it checks if the message matches any predefined rules. At the same time, it takes the entire conversation history as context and generates a response that’s relevant and coherent with the overall conversation flow. This blend of rule-based efficiency and LLM flexibility allows for a more natural and dynamic conversational experience.

Alternative Categorization

- Goal-based: Based on goals to accomplish through a quick conversation with the customer. This conversation allows the bot to accomplish set goals. For example, answer questions, raise tickets, or solve problems.

- Knowledge-based: The chatbot will have a source of information to access and talk about. They are either already trained on this data or have open-domain data sources to rely on and answer from.

- Service-based: It would provide a certain service to a company or a client. Those chatbots are usually rule-based and provide a specific service rather than a conversation. For example, a client could order a meal from a restaurant.

Building a Chatbot with LangChain

The field of Natural Language Processing (NLP) is focused on conversation and dialogue. It is one of the aims of enhancing human-computer interaction. Those systems have gained increasing interest over the years. Thus, development has evolved rapidly toward making those systems easier to build, integrate, and use. This means easier ways to build LLM-based chatbots and apps.

Langchain is a leading language model integration framework that allows us to create chatbots, virtual assistants, LLM-powered applications, and more. Let’s first get into some important Langchain concepts and components.

Langchain Explained

Langchain is an open-source Python library comprising of tools and abstractions to help simplify building LLM applications. This framework enables developers to create more interactive and complex chatbots by chaining together different components. We can incorporate memory, and employ agents and rules-based systems around chatbots. There are a few main components to understand in Langchain that will help us build chatbots efficiently.

- Chains: This is the way all the components are connected in Langchain. Chains are a sequence of components executed sequentially. For example, components like prompt templates, language models, and output parsers.

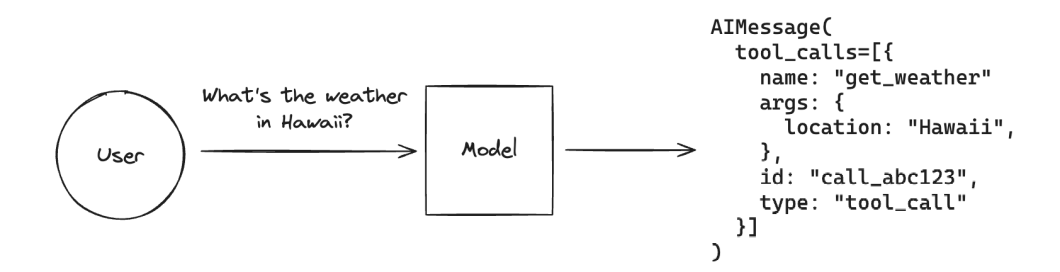

- Agents: This is how chatbots can make decisions and take actions based on the context of the conversation. LLMs can determine the best course of action and execute tools or API calls accordingly. It can even infer other models and become a multimodal AI.

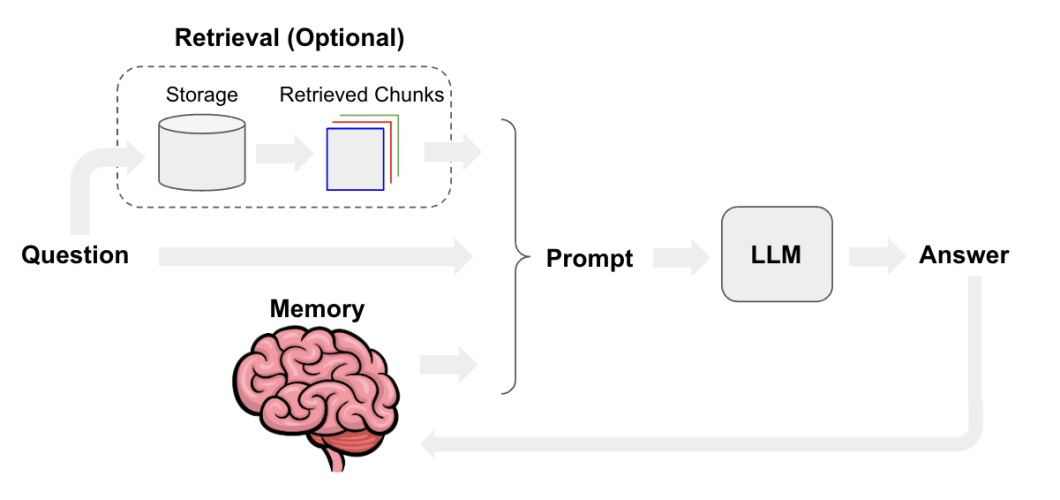

- Memory: Allows chatbots to retain information from previous interactions and user inputs, providing context and continuity to the conversation.

The combination of chains, agents, and memory makes Langchain a great framework for chatbot development. Chains make for reusable components in development. Agents enable decision-making and the use of external tools, and memory brings context and personalization to a conversation. Next, let’s explore developing chatbots with Langchain.

Creating Your First LangChain Chatbot

Langchain can help facilitate the development of chatbots using its components. Chatbots commonly use retrieval-augmented generation (RAG) to better answer domain-specific questions. For example, you can connect your inventory database or website as a source of information to the chatbot. More advanced techniques like chat history and memory can be implemented with Langchain as well. Let’s get started and see how to create a real-time chatbot application.

For this guide, we will create a basic chatbot with Llama 2 as the LLM model. Llama is an open-source LLM by Meta that has great performance and we can download versions of it that can be run locally on modern CPUs/GPUs.

Step1: Choosing the LLM model

The first thing we need to do is to download an open-source LLM like Llama through Huggingface repositories like this. These small models can be run locally on modern CPUs/GPUs and the “.gguf” format will help us easily load the model. You could alternatively download the “.bin” model from the official Llama website or use a Huggingface-hosted open-source LLM. For this guide, we will download the GGUF file and run it locally.

- Go to the Llama 2 7B Chat – GGUF repository

- Go to files and versions

- Download the GGUF file you want from the list, and make sure to read through the repository to understand the different variations offered.

Step2: Installing and setup

When the model is installed, place it in the folder where we will build our Python Langchain code around it. Speaking of which let’s create a Python file in the same folder and start importing and installing the needed libraries. Go ahead and open your favorite code editor, we will be using Visual Studio Code. First, run this command on your terminal to install the needed packages.

pip install langchain llama-cpp-python langchain_community langchain_core

Next, we want to import the libraries we need as follows.

from langchain_community.llms import LlamaCpp from langchain_core.prompts import PromptTemplate from langchain_core.output_parsers import StrOutputParser

Note that the first import can be changed to “from langchain_community.llms import HuggingFaceHub” if you are importing a model hosted on Huggingface.

Now we are ready to start loading and preparing our components. Firstly, let us load the LLM model.

llm = LlamaCpp( model_path="/Local LLM/dolphin-llama2-7b.Q4_K_M.gguf", n_gpu_layers=40, n_batch=512, max_tokens=100, verbose=False, # Enable detailed logging for debugging )

Here we load the model and give it some essential parameters. The number of GPU layers determines how much the GPU will be working, the less it is the more work on the CPU. The batch size determines how much input the model will be processing at one time. Max tokens define how long the output is. Lastly, verbose will just determine if we get detailed debugging information.

Step3: Prompt-Template and Chain

We will use two more components, a prompt template and a chain. A prompt template is an important input to the model from which we can do many things like RAG, and memory. In our case we will keep it simple, the prompt template will give the chatbot some context, pass the question to it, and tell it to answer. The chain will then connect the model with the prompt template.

template = """SYSTEM:{system_message}

USER: {question}

ASSISTANT:

"""

prompt = PromptTemplate(template=template, input_variables=[ "question", "system_message"])

system_message = """You are a customer service representitve. You work for a web hosting company. Your job is to understand user query and respond.

If its a technical problem respond with something like I will raise a ticket to support. Make sure you only respond to the given question."""

Here we create our prompt template. This way we tell the model how to act, what to expect, and how to output. The way the template is structured depends on the model we are using. For example, here we are using the dolphin Llama 2 7b model, the template for this model uses three actors SYSTEM, USER, and ASSISTANT. The system prompt tells the chatbot what to do, the user prompt is what the user asks or says, and the assistant is for the chatbot to put its answer. Then we structure this as a prompt template for Langchain through the “PromptTemplate” method. We give it two parameters, the template we defined, and the variables to use.

Now, let’s connect everything through a chain.

output_parser=StrOutputParser() chain=prompt|llm|output_parser

Here we did two things, we first defined an output parser, which will make our output look better. Then we define a variable to hold the chain, which is connected with the “|” character, and applies to any Langchain component. Next, let’s run this chatbot and test it.

Step4: Inference and Test:

We can now start using the chain we just made, to do that we are going to use a simple while loop to run the chain on every input we give.

print("Hi, how can we help?")

while True:

question = input("> ")

answer = chain.invoke({"question":question, "system_message": system_message})

print(answer, '\n')

This while loop will do the following.

- Get input from the user.

- Pass the input to the chain we made and run the chain using the invoke function. The invoke function will take a dictionary of the variable name and the variable itself.

- We print the answer to the user.

We also added a simple print statement saying “Hi, how can we help?” before the loop as a welcome note to the user. Now let’s see some example input/output.

Hi, how can we help? > Hi My website has been down all day I am sorry to hear that your website has been experiencing issues. I understand that this is causing some inconvenience. To better assist you and resolve this problem, I will raise a ticket with our support team to look into the issue and work on getting your website back up and running as soon as possible. Please provide more information about the error or issue you are encountering, such as any error messages or steps that you have already taken, and I will make sure to include this in the

We see that the chatbot responds well to the query and according to system instructions in the template. However, the chatbot output is limited because of the maximum token limit we set. Further adjustments can be made to how the output is displayed, and we can use things like summarization, memory, and RAG to enhance the chatbot’s functionality.

> Thank you, I need this resolved asap please I understand your concern and will make sure to prioritize your request. Please provide more details about the issue you are experiencing, so that I can better assist you in resolving it as soon as possible.

The bot can continue the chat as long as the loop is running, but it will not have access to previous messages because we don’t have a memory component. While memory is more advanced, it is recommended to dive into Langchain tutorials and implement it if you’re interested. Now that we’ve explored how to build chatbots with LangChain, let’s look at what the future holds for chatbots.

Chatbots: The Road Ahead

We have seen substantial development in the field of LLMs and Chatbots in recent years. Every day, it feels like developers and researchers are pushing the boundaries, opening up new possibilities for us to use and interact with Artificial Intelligence. We have seen that building chatbot-based apps and websites has become easier than ever. This enhances the user experience in any business or company. However, we can still feel the difference between talking with a chatbot and a human, thus, researchers are constantly working to make user interaction smoother by making these AI models feel as natural as possible.

Going forward we can expect to find these AI chatbots everywhere, whether it’s for customer support, restaurants, online businesses, real estate, banking, and almost every other field or business there is. In addition, those AI chatbots pave the way to create better-speaking agents. When researchers combine those models with advanced speech algorithms we get a smoother and more natural conversation.

While the future of chatbots seems limitless, there’s a serious side to all this progress which is ethics. We should acknowledge issues like privacy breaches, misinformation spread, or AI biases that might sneak into conversations. However, spreading awareness to the developers and users will make sure these AI chatbots play fair and keep things honest.

Further Reads for Chatbots

If you want to read and understand more about the concepts related to AI models, we recommend you to read the following blogs:

- Microsoft’s Florence-2: The Ultimate Unified Model

- Generative AI: A Guide To Generative Models

- Scalable Pre-Training of Large Autoregressive Image Models

- Foundation Models in Modern AI Development

FAQs

Q1. What is LangChain?

A. A framework for building LLM-powered applications, like chatbots, by chaining together different components.

Q2. How do LLMs enhance chatbots?

A. LLMs enable chatbots to understand and generate human-like text, leading to more natural and engaging conversations.

Q3. What are the different types of chatbots?

A. The different types of chatbots are:

- Rule-based (predefined rules)

- AI-powered (using LLMs)

- Task-oriented (specific tasks)

- Conversational (open-ended)

Q4. Why use Langchain to develop chatbots?

A. Langchain offers a modular approach that empowers developers to build more sophisticated chatbot systems by connecting various components and using their preferred LLM. This flexibility is crucial for creating highly customized and interactive chatbot experiences. Additionally, Langchain offers other features that would otherwise be hard to build, like memory, agents, and retrieval.