Geoffrey Hinton et al. introduced deep belief networks (DBNs) in 2006. These deep learning algorithms consist of latent variables and use them to learn underlying patterns within the data. The underlying nodes are linked as a directed acyclic graph (DAG), giving the network generative and discriminative qualities.

DBNs work similarly to traditional multi-layer perceptrons (MLPs) and offer certain benefits over them, including faster training and better weight initialization.

In this article, we will discuss

- The working principle behind Deep Belief Networks

- Restricted Boltzmann Machines (RBMs)

- Deep Belief Network Architecture

- Training a Deep Belief Network

- Key Benefits and Applications of DBNs

Idea behind Deep Belief Networks

Deep belief networks consist of various layers of neurons, each connected to the neurons of the subsequent layer. The overall architecture is similar to an MLP, but only the layers are connected, and there are no intra-layer connections.

Each layer can be considered a separate model as it trains independently on the output of the previous one. This way, a DBN is a stack of networks, each of which has learned different features and traits from the raw data.

The complex layer-wise neural architecture allows DBNs to work on both supervised and unsupervised problems. Moreover, their ability to comprehend complex underlying data patterns makes them excellent for generative applications. Let’s look at the architecture in detail.

Deep Belief Network (DBN) structure

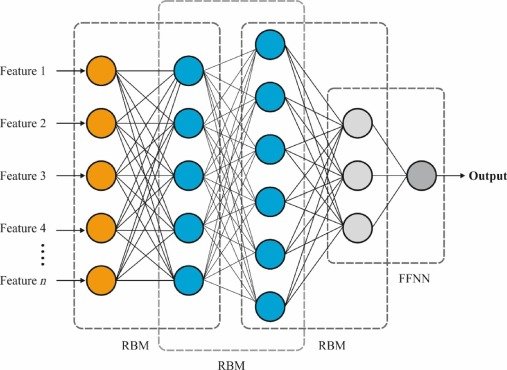

A deep belief network is a stack of multiple Restricted Boltzmann Machine (RBM) structures that form the foundation of deep architectures. Each of these RBMs consists of a visible layer and a hidden layer. The visible layer accepts the input from the previous layer, while the hidden layer stores the processed output. For a better understanding, we need to understand the RBM first.

Restricted Boltzmann Machines

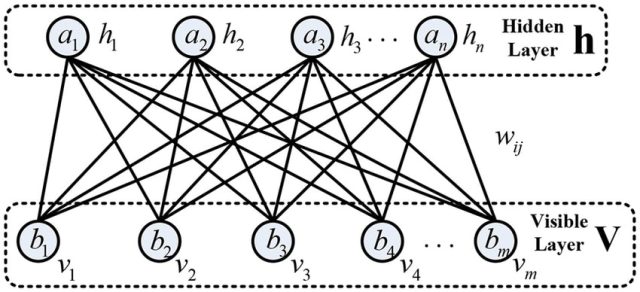

A Restricted Boltzmann Machine is a two-layer probabilistic neural network. Its concept was first introduced in 1986 as the Harmonium model, but the term RBM was not used until the mid-2000s. Its first layer (visible layer) interacts with the raw data, and the second (hidden layer) learns high-level features from the first one.

They are ‘Restricted’ since the connections only exist between neurons in subsequent layers. RBMs have performed excellently in forming data representations and gained popularity in tasks such as collaborative filtering.

Structure of DBNs

A Deep Belief Network extends the RBM functionality by creating overlapping stacks of the model. The stacks overlap since the hidden layer of one model is the visible layer of the next one. These RBMs are trained independently, and the overall architecture forms the deep belief network.

Each RBM within the DBN is an energy-based model. This means that it uses an energy value to define the relationship between its hidden and visible units. The energy value is calculated using a linear combination of the unit activations, pairwise combinations of the activations, and any biases associated with the units.

A lower energy means a higher probability of association between the units. By minimizing the energy value for the overall network, the RBM constructs a plausible representation of the original data.

DBN training

The training of a deep belief network consists of a pre-training phase and then task-specific fine-tuning. The two methodologies are a hybrid of unsupervised and supervised learning approaches.

Pre-training

The pre-training phase aims to initialize the DBN so that the trained weights directly represent the input data. We perform this pre-training phase with unsupervised learning and train each RBM module independently as a feature detector.

The ensemble’s first layer (often called the input layer or bottom layer) interacts directly with the raw data, learning its features and creating a latent representation during the process. Each subsequent layer is then trained so the first’s output becomes the next’s input. This greedy layer-wise learning allows for efficient feature learning.

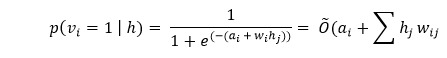

The RBM training is conducted using the Contrastive Divergence algorithm. The training comprises a positive phase and a negative phase. The positive phase samples the activation of the visible layer to calculate probabilities for the activation of the hidden units, and vice versa for the negative phase.

We iterate this process multiple times, covering various data samples and updating weights after each pass. Finally, the last layer (output layer) outputs the network’s prediction.

Fine-tuning

Once we’ve completed the pre-training and initialized the model weights, it is further fine-tuned for downstream tasks. We perform fine-tuning using labeled data and a supervised learning algorithm like backpropagation. This way, the model can be trained for various tasks, including classification or regression, and the initialized weights result in faster training and better results.

Benefits of DBNs

Deep belief networks use probabilistic modeling and a supervised learning approach to offer certain benefits over conventional neural networks. These include:

- Ability to handle large data using hidden units to extract underlying correlations.

- Faster training and better results.

- Achieving global minima due to better weight initialization.

DBN applications

Deep Belief Networks work similarly to deep neural networks and can handle large datasets and various data types. Therefore, they are great for tasks like:

- Image classification

- Text classification

- Image generation

- Speech recognition

Present state of Deep Belief Networks

Deep belief networks did not gain much popularity despite demonstrating hybrid capabilities. Much of this was because the probabilistic model required a large amount of data to understand the underlying patterns properly like gradient descent.

On the other hand, the advances in conventional deep networks, such as Convolutional Neural Networks (CNNs), Recurrent Neural Networks (RNNs), and Artificial Neural Networks (ANNs), have provided ground-breaking results. These algorithms have become more efficient with time and are the foundations of most modern CV and NLP applications.

Deep belief networks played a vital role in the growth and evolution of deep learning, but are not so common today.

Deep Belief Networks: key takeaways

DBNs have been a crucial part of the deep learning ecosystem. Here’s what you need to know about them:

- Deep Belief Networks are constructed by stacking multiple Restricted Boltzmann Machines.

- We train each RBM in the stack independently with greedy learning.

- Training DBNs consists of an unsupervised pre-training phase followed by supervised fine-tuning.

- DBNs understand latent data representations and can generate new data samples.

- DBNs are also employed in classification, motion capture, speech recognition, etc.

- The DBN architecture is not very popular today and has entirely been replaced by other Deep Learning algorithms like CNN and RNN.

Modern machine learning has come a long way. Here are a few topics where you can learn more about Computer Vision: