Augmented reality (AR) and virtual reality (VR) transform how we interact with the outside world. Even with engaging immersive narratives and interactive experiences, the magic is created behind the scenes by the intricate coordination of cutting-edge technologies.

Computer vision is a main driver, quietly but forcefully directing the smooth transition between the virtual and real worlds. In this article, we walk you through the details of computer vision in mixed reality:

- Basics of AR/VR and essential techniques

- Challenges you should know

- Important real-world applications

- The best open-source projects

- Top AI vision trends for AR and VR

Basics of Computer Vision in AR and VR

Understanding, analyzing, and automatically extracting data from digital images and videos is the focus of the Artificial Intelligence (AI) subfield known as Computer Vision. Our interactions with the environment are being profoundly changed by Augmented reality (AR) and Virtual reality (VR). And both of those immersive technologies rely largely on computer vision.

Computer Vision (CV) is a fundamental building block that can transform industries and enhance everyday encounters. The VR and AR technology creates a seamless, immersive experience. CV does this by bridging the gap between the digital and physical worlds.

In Augmented Reality (AR), computer vision is used for:

- Object detection is used to recognize objects in visual data

- Object tracking is used to understand movement, count people, and objects

- Simultaneous localization and mapping (SLAM) enables robots to localize themselves on a map

In Virtual Reality (VR), Computer Vision is used for:

- Hand pose estimation and gesture tracking

- Eye-tracking and gaze recognition

- Room mapping and point-cloud techniques

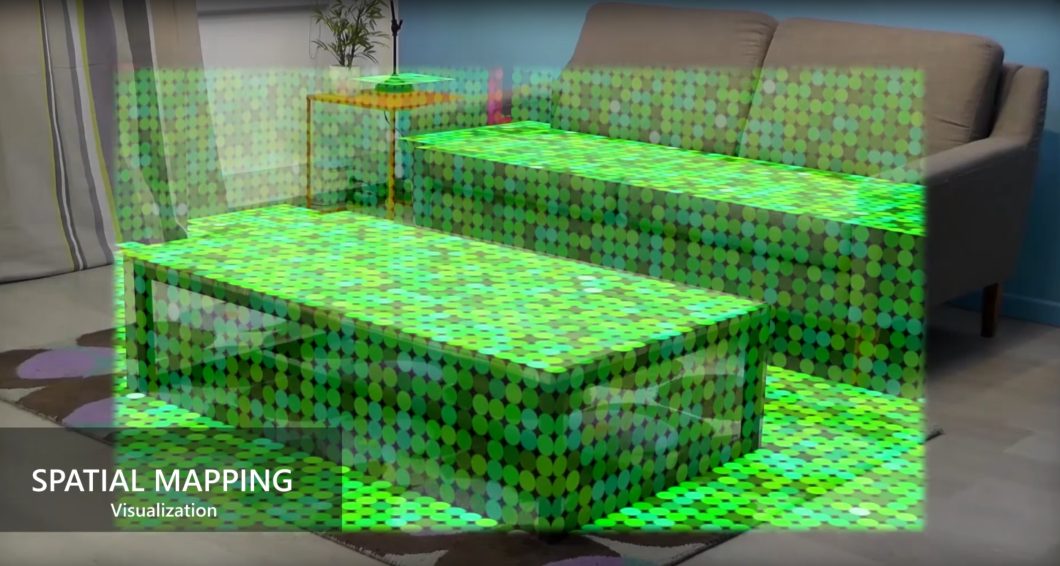

Advanced Tracking and Spatial Mapping

For smooth and immersive AR/VR experiences, precise tracking and spatial mapping are essential. Those technologies make it possible to recognize objects’ shape, location, and orientation in a 3D space. This information is used to create various augmented and virtual reality applications. Examples include:

- Precise Object Placement. Virtual objects can be accurately positioned and anchored in the real world, enabling realistic interactions and occlusion effects.

- Natural Navigation. Users can move through virtual environments or manipulate objects as the system tracks their movements and gestures.

- Augmented Reality Overlays. Information and graphics can be seamlessly overlaid onto the real world, aligned with physical objects and surfaces.

Immersive Object Recognition and Interaction

Creating fully immersive augmented reality and virtual reality experiences requires a foundational understanding of object detection and interaction. These technologies let users easily interact with virtual objects as if they were physically present. Thus, providing a new level of engagement and realism.

In the following, we will look into some of the most popular AR/VR techniques that push the limits of immersive object interaction and recognition.

Occlusion-Aware Rendering

For an augmented reality experience to be credible, virtual things must accurately interact with and obscure real-world objects. Therefore, we need precise depth estimates and scene comprehension to determine which objects are in front of others and modify the display appropriately.

This can be achieved using depth-sensing cameras for stereo vision and learning-based approaches.

Real-time Object Manipulation

Enabling users to pick up, move, and interact with virtual objects as if they were physically present is key to engaging AR/VR experiences. This requires accurate object recognition, pose estimation, real-time physics simulation, and key techniques like collision detection and response, grasping and manipulation techniques, and haptic feedback.

Surface Detection and Tracking

Accurately detecting and tracking real-world surfaces allows virtual elements to be effectively attached to and interacted with. Thus, creating natural and intuitive interactions in AR.

Multimodal Object Recognition and Interaction

Combining information from multiple sensors (cameras, LiDAR, IMU) can lead to more robust and accurate object recognition and interaction, especially in challenging environments. For example, LiDAR data can provide accurate depth information, while cameras offer rich texture and color details.

Object Properties and Behavior Recognition

Recognizing the properties and behavior of objects (e.g., rigidity, weight, fragility) can further enhance interaction realism. This can be achieved by analyzing object shapes, materials, and past interactions through machine learning techniques.

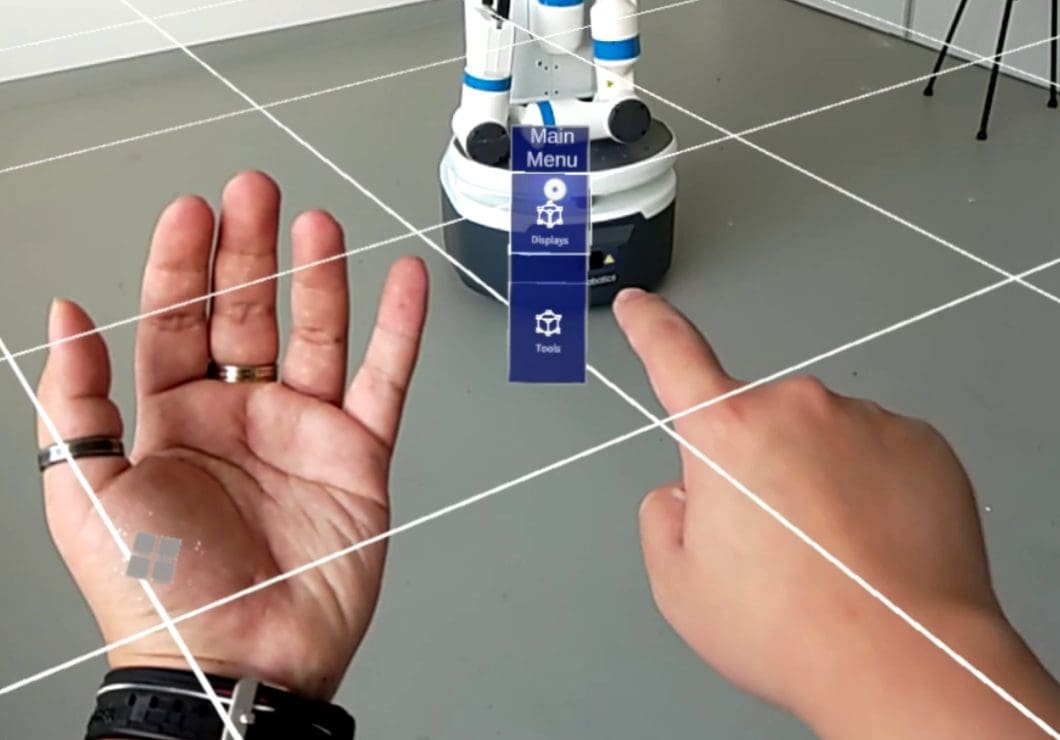

Real-time Gesture Recognition

Real-time gesture recognition sits at the heart of intuitive and natural interactions in AR/VR. Interpreting hand and body movements allows users to control virtual objects, navigate environments, and express themselves within these immersive worlds. In the following, we will dive deeper into the technologies and applications shaping this exciting field:

Hand Pose Estimation

The foundation of gesture recognition lies in accurately understanding the pose and configuration of the hand. This is achieved through various techniques:

- Hybrid Approaches. Marker-based and markerless techniques are combined, often using markers for initial calibration and coarse tracking. Markerless methods provide finer-grained details of finger movements.

- Marker-Based Tracking. Without computer vision, physical, small markers are attached to gloves or fingers to measure and track their movements. While simple and reliable, it can be cumbersome and limit natural hand gestures.

- Markerless Tracking. Leverages computer vision algorithms to analyze hand poses directly from camera images. Deep learning models trained on vast datasets of hand images achieve impressive accuracy but require significant computational resources.

Gesture Recognition and Classification

Once hand poses are estimated, gestures need to be identified and classified based on their meaning. This involves:

- Gesture Libraries. Predefined sets of common gestures with associated hand poses are used for simple recognition tasks.

- Machine Learning Models. Deep learning algorithms trained on large datasets of labeled hand gestures can accurately recognize complex and dynamic gestures. Other methods track key points to understand movement.

- Context-Aware Recognition. Considers the surrounding environment and user intent to improve gesture recognition accuracy, especially when multiple interpretations are possible.

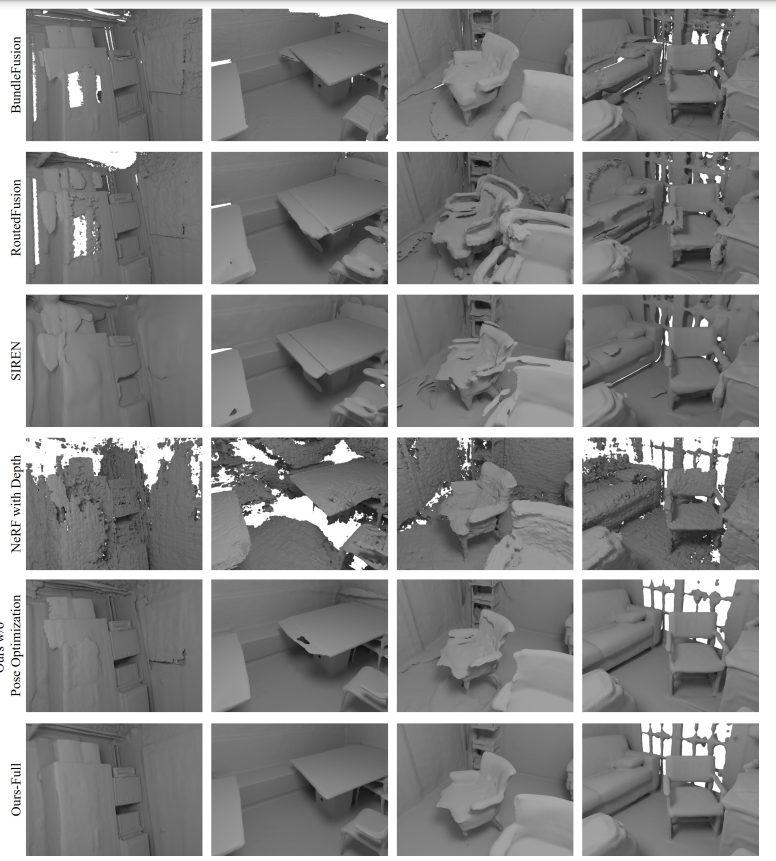

Simultaneous Localization and Mapping (SLAM)

A key component of AR/VR is SLAM (Simultaneous Localization and Mapping). This enables robots or intelligent devices to track their location inside an environment and create a map of it at the same time. SLAM techniques are necessary for navigating complex environments and maintaining spatial awareness in hectic circumstances.

- Visual SLAM. Leverages cameras to capture visual data and extract features like edges and corners. Algorithms then use these features to estimate the device’s pose (position and orientation) and update the map accordingly.

- LiDAR SLAM. Employs LiDAR sensors to measure distances to objects and generate 3D point clouds of the environment. This makes more accurate and resilient mapping possible, particularly in low-texture or poorly light situations.

- Fusion-based SLAM. Combines data from multiple sensors (cameras, LiDAR, IMUs) to achieve more robust and accurate tracking and mapping, particularly in challenging conditions where individual sensors might struggle.

Enhanced User Interfaces with Computer Vision

In addition to helping AR and VR users comprehend their surroundings, computer vision is also transforming the way these immersive experiences allow users to interact with digital components. Developers may design more intuitive, natural, and contextually aware user interfaces (UIs) by utilizing insights obtained from visual data.

Here’s a list of some of the most important techniques:

Eye Tracking

Automated eye tracking goes beyond eye gaze detection, understanding where users are looking and for how long. This information can be used to:

- Focus Attention. VR systems can direct rendering resources towards areas where users are fixating, improving visual fidelity and reducing computational load.

- Adapt Content. This is done by adjusting the content, level of detail, or narrative based on where the user is looking. Thus, creating a more personalized and engaging experience.

Gaze-based Interaction

Building upon eye tracking, gaze-based interaction eliminates the need for physical controllers or traditional UI elements. Users can directly interact with virtual objects or menus by looking at them and performing predefined actions like dwell-time selection, gaze gestures, or iris tracking. This creates a more immersive and hands-free interaction experience.

Dynamic UI Overlays

Static UI overlays in AR can disrupt the natural view of the real world. Computer vision enables dynamic overlays that:

- Adapt to the Environment. Overlays can adjust their size, position, and appearance based on the surrounding objects and scene context. Thus, reducing visual clutter and maintaining user focus.

- Perform Occlusion-Aware Rendering. Virtual elements can be selectively hidden or rendered transparently when occluded by real-world objects, ensuring a seamless blending of the physical and digital worlds.

Facial Expression Recognition

Understanding user emotions through facial expressions can enhance AR/VR interfaces in several ways:

- Adaptive Interactions. Virtual avatars or systems can respond empathetically to user emotions, providing personalized feedback or adjusting the experience accordingly.

- Accessibility for Individuals With Disabilities. Facial emotion recognition can be used to develop alternative communication methods for individuals with speech or motor impairments.

Challenges in Computer Vision for Virtual and Augmented Reality

While computer vision opens a world of exciting possibilities in AR/VR, significant challenges remain:

- Computational Limitations. Real-time processing of visual data, especially for complex scenarios with high-resolution images and multiple sensors, requires significant computational resources. Battery life and device overheating can become limitations in mobile AR/VR applications.

- Lighting and Environmental Variations. Algorithmic model performance can degrade significantly under varying lighting conditions, shadows, and occlusions. Accurate object recognition and tracking become challenging in poorly lit or cluttered environments.

- Occlusion Handling. Accurately handling occluded objects and ensuring seamless transitions when real-world objects partially obscure virtual elements remains a technical hurdle.

- Data and Privacy Concerns. Training robust computer vision models requires vast amounts of labeled data. Thus, raising concerns about data privacy and potential biases in the dataset.

Innovations Pushing Forward

Despite these challenges, researchers and developers are still pushing the boundaries of computer vision for AR/VR in the real world:

- Edge Computing. Offloading computationally intensive tasks from devices to the cloud or edge networks reduces the processing burden on AR/VR devices. In turn, improving performance and battery life.

- Lightweight Deep Learning Models. Developing smaller and more efficient deep learning architectures optimizes performance on resource-constrained devices without sacrificing accuracy.

- Sensor Fusion. Combining data from multiple sensors (cameras, LiDAR, IMU) provides richer environmental information, leading to more robust and accurate tracking, mapping, and object recognition.

- Synthetic Data Generation. Generating realistic synthetic data with controlled variations in lighting, backgrounds, and occlusions can augment real-world datasets and improve algorithm robustness.

- Privacy-Preserving Techniques. Secure enclaves and differential privacy methods can protect user data during collection, processing, and storage, addressing privacy concerns in computer vision applications.

Practical Applications of AR/VR Across Industries

Video Games

In AR gaming, the real world is enhanced with digital overlays, allowing gamers to interact with the virtual environment. This technology introduces elements like geolocation-based challenges, bringing gameplay into the streets and public spaces. On the other hand, VR gaming transports players into entirely virtual worlds, offering a level of immersion where users feel present in the game environment.

The use of motion controllers, haptic feedback, and realistic simulations enhances the gaming experience, making it more engaging and lifelike.

Education and Training

Augmented Reality (AR) uses computer vision to precisely map and overlay digital information onto real-world educational content, enabling students to interact with augmented content. In Virtual Reality (VR), intricate computer vision systems create immersive, synthetic environments by tracking user movements, gestures, and interactions. These technologies use complex CV models for real-time object recognition, spatial mapping, and precise alignment of digital elements.

For example, virtual environments allow architecture students to explore and manipulate three-dimensional architectural models, providing a realistic sense of scale and proportion. Students can virtually walk through buildings, visualize different design elements, and experience how spaces come together.

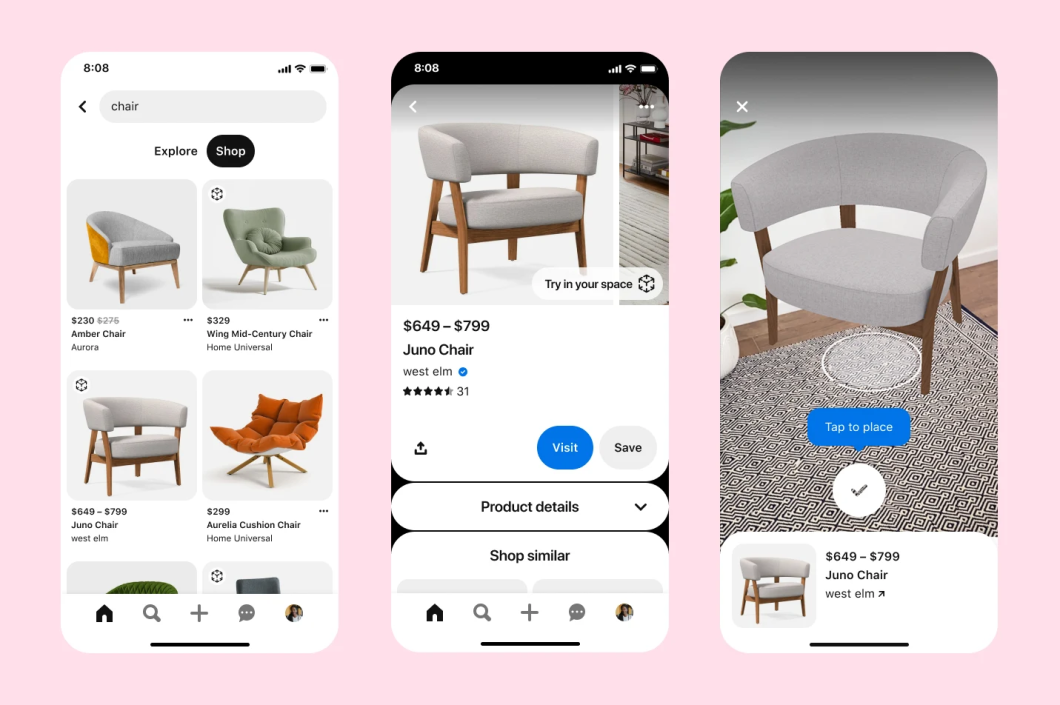

Retail and Product Visualization

In retail applications, the implementation of AR/VR technologies goes beyond virtual try-on experiences, playing a crucial role in transforming the overall shopping journey. These technologies offer immersive and interactive features like augmented product displays and virtual showrooms. Customers can explore detailed product information, compare options, and experience a virtual walk-through of the store.

Additionally, AR applications provide real-time information about products, promotions, and personalized recommendations, creating a dynamic and engaging shopping environment. This not only enhances the customer experience but also provides retailers with valuable insights into consumer preferences and behavior.

Manufacturing and Design

In manufacturing, AR overlays offer real-time guidance and information for tasks such as assembly, maintenance, and design validation. Workers can access crucial data and instructions overlaid in their physical environment, improving efficiency and accuracy. These technologies facilitate enhanced training programs by allowing workers to visualize complex processes and machinery virtually.

Moreover, AR/VR applications contribute to design validation, enabling engineers to assess and refine prototypes in a simulated environment before physical production. This integration enhances overall productivity, reduces errors, and ensures a more streamlined and effective manufacturing workflow.

Healthcare

In the healthcare sector, the integration of AR/VR solutions plays a pivotal role in remote surgery assistance, providing surgeons with immersive and precise visualizations that enhance their ability to perform procedures from a distance. Additionally, AR/VR is instrumental in rehabilitation exercises, offering interactive and personalized simulations that aid patients in their recovery.

Medical training also benefits, as these technologies enable realistic and immersive simulations for training healthcare professionals. The precise tracking and object recognition capabilities contribute to the accuracy and effectiveness of these applications. These advancements are seen in patient care, surgical procedures, and medical education.

Collaborations and Integration with AI

The synergy between computer vision and artificial intelligence (AI) is propelling advancements in AR/VR at an unprecedented pace. By combining their respective strengths, these technologies are unlocking new levels of perception, understanding, and interaction within immersive experiences.

Machine learning sits at the heart of this collaboration, empowering computer vision algorithms to:

- Learn From Vast Datasets. Models trained on large collections of labeled visual data can recognize objects, track movements, and interpret gestures with increasing accuracy.

- Adapt to Diverse Environments. By learning from different lighting conditions, backgrounds, and object variations, algorithms become more robust and generalize well to unseen scenarios.

- Reason and Make Decisions. AI-powered CV can identify objects and reason about their relationships, interactions, and implications within the AR/VR environment.

Examples of Collaborative Innovation:

- Real-Time Scene Understanding. AI can analyze visual data in real-time to understand the spatial layout, objects, and activities occurring within the AR/VR scene. This enables dynamic adaptation of virtual elements and content based on the context.

- Personalized AR Experiences. AI algorithms can personalize AR experiences by learning user preferences and tailoring content, interactions, and information delivery to individual needs and interests.

- Emotionally Intelligent VR Avatars. AI can analyze user facial expressions and voice patterns to create virtual avatars that respond empathetically and dynamically to adapt their behavior to user emotions.

- Predictive Maintenance in AR Applications. By analyzing visual data from industrial equipment, AI-powered computer vision can predict potential failures and guide technicians through AR-assisted repair processes.

The Best Virtual Reality and Augmented Reality Open-Source Projects

The open-source community plays a pivotal role in advancing computer vision for AR/VR. By offering freely available resources like platforms, libraries, and datasets, open-source empowers developers and researchers to create groundbreaking applications.

Here are some noteworthy contributions from the community for the open-source AR/VR tools:

- OpenCV. A flexible library for real-time computer vision applications. OpenCV is frequently used for image processing, object tracking, and AR/VR applications.

- ARKit. Apple’s framework for building AR experiences on iOS devices provides access to camera, LiDAR, and motion tracking capabilities.

- ARCore. Google’s framework for building AR experiences on Android devices offers similar functionalities to ARKit.

- Stanford’s SUN3D. A large-scale dataset of images with corresponding 3D scene annotations is valuable for training object recognition and scene understanding algorithms.

- Matterport3D. A comprehensive collection of 3D scans of indoor environments, useful for enabling computers to develop and test spatial mapping and navigation algorithms in AR/VR.

- ReplicaNet. A dataset of synthetically generated images and 3D models, offering a controlled environment for training and evaluating computer vision algorithms under various conditions.

Trends in Computer Vision for AR and VR

The future of computer vision in AR/VR is brimming with exciting possibilities:

- Hyper-Realistic Experiences. Advancements in rendering, object recognition, and scene understanding will create virtually indistinguishable blends of physical and digital worlds.

- Affective Computing. VR/AR systems will recognize and respond to users’ emotions through facial expressions, voice analysis, and physiological data, leading to more personalized and engaging experiences.

- Mixed Reality (MR). The lines between AR and VR will continue to blur, creating real and virtual environments with increasingly sophisticated interactions.

- Ubiquitous AR. As AR devices become smaller and more integrated into everyday wearables, CV will enable interactions with the digital world.

To wrap up, the role of computer vision in AR/VR is the foundation for realistic and captivating experiences. With its capabilities ranging from gesture control and object identification to real-time interaction and spatial mapping, the environment is changing how we engage with it. With its ability to solve enduring issues, promote open-source collaboration, and embrace continuous innovation, computer vision will continue to push the boundaries of the virtual world. This will change the course of real-life human-computer interaction and upend a multitude of industries.

Real-World Computer Vision For Businesses

Our computer vision platform, Viso Suite, is the end-to-end solution for enterprises to build and scale real-world computer vision. Viso Suite covers the entire AI lifecycle, from data collection to security, in a state-of-the-art platform. To learn more, book a demo with us.