Stanford University and panel researchers P. Stone and R. Brooks et al. (2016) created the report “One Hundred Year Study on Artificial Intelligence (AI100)”. The study panel summarized the current progress and envisioned future advancements in the areas of AI and home robots as follows:

- AI and computer vision’s advancement led the gaming industry to surpass Hollywood as the biggest entertainment industry.

- Deep learning and Convolutional Neural Networks (CNNs) have enabled speech understanding and computer vision on our phones, cars, and homes.

- Natural Language Processing (NLP) and knowledge representation and reasoning have empowered the machines to perform meaningful web searches. Moreover, they can answer any question and communicate naturally.

- Home Robots: Over the next 15 years, mechanical and AI technologies will increase home robots’ reliable usage in a typical household. Also, special-purpose robots will deliver packages, clean offices, and improve the security system.

Home Robots 2030 Roadmap

In the Home Robots Roadmap paper, panel researchers stated that technical burdens and the high price of mechanical components still limit robot applications. Also, the complexity of market-ready hardware may slow the development of self-driving cars and household robots.

Chip producers (Nvidia, Qualcomm, etc.) have already created System in Module (SiM), with a lot of System-on-Chip (SoC) components. These offer larger power than the supercomputers of 10 years ago. In addition, they possess 64 cores, specialized silicon for camera drivers, additional DSPs, and processors for AI algorithms. Therefore, the low-cost devices will be able to support more embedded AI than was ever possible over the past 20 years.

Cloud systems provide a platform and IoT services for the new home robots. Thus, the researchers can collect data in multiple homes, which will, in turn, employ SaaS machine learning and control the deployed robots.

The huge progress in speech recognition and image classification, enabled by deep learning, will enhance robots’ interactions with people in their homes. Low-cost 3D sensors, driven by gaming platforms, have enabled the development of 3D perception algorithms. This will enhance the design and adoption of home and service robots.

In the past few years, low-cost and safe robot arms have been the subject of many research labs worldwide. This provoked the creation of robots applicable in the home, in a viable time. A lot of AI startups around the world are developing AI-based robots for homes, focusing on HCI. Therefore, ethics and privacy issues may arise as consequences.

Practical Challenges in Home Robotics

Researchers N.M. Shafiullah et al. (NYU, 2023) published their research on Bringing Robots Home. They introduced the concept of a “general home machine”, a domestic assistant that will adapt and learn from users’ needs.

The researchers aimed for a cost-effective home robot, an important goal in robotics that has remained elusive for decades. In their research, they started a large-scale effort towards introducing Dobb·E, an affordable general-purpose robot. In addition, they tested learning robotic manipulation within a household environment.

The home robot Dobb·E is a behavior cloning framework. Therefore, it is a subclass of imitation learning, a machine learning approach where the model learns to perform by imitating humans. Here we summarize the practical challenges they have overcome in their robot development.

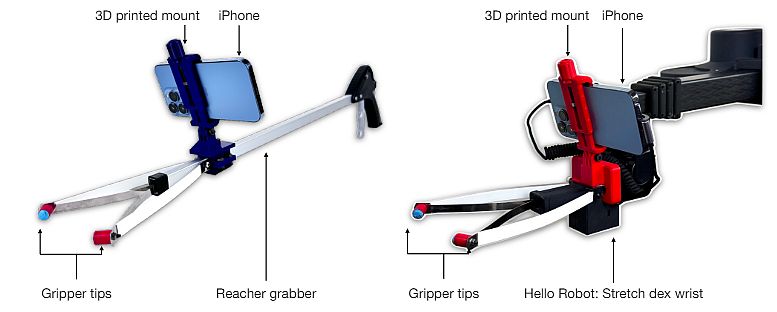

Hardware Design

The basis of their robot is a single-arm mobile manipulator robot available for purchase in the open market. They replaced the standard suction-cup gripper with small, cylindrical tips. This replacement helped the robot manipulate finer objects, such as door and drawer handles, without getting stuck.

- For experiments, they used the Stretch RE1, with the proficient wrist attachment that enables the 6D movement of the robot.

- The robot hardware is cheap, weighing just 23 kilograms, and runs on a battery for up to two hours.

- Additionally, Stretch RE1 has an Intel NUC computer onboard which can run a learned policy at 30 Hz. With the experiments, the authors proved that cylindrical tips are better at such manipulations. However, the robot makes pick-and-place tasks slightly slower.

Moreover, their setup operates with only one robot-mounted camera. Thus, they didn’t have to worry about having and calibrating an environment-mounted camera. This makes the setup robust to camera calibration issues and mounting-related environmental changes.

Preparing the Dataset

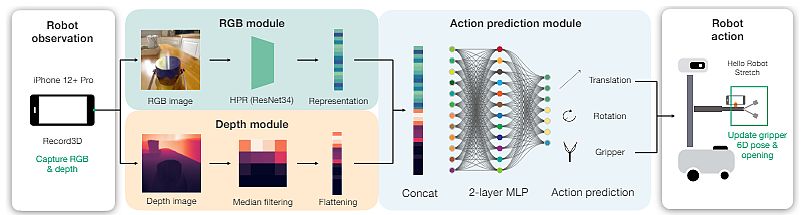

With the good hardware setup, collecting data from multiple households became rather simple. The researchers attached an iPhone to a Control Stick. Then, the demonstrator performed different actions with the stick while recording with the Record3D app.

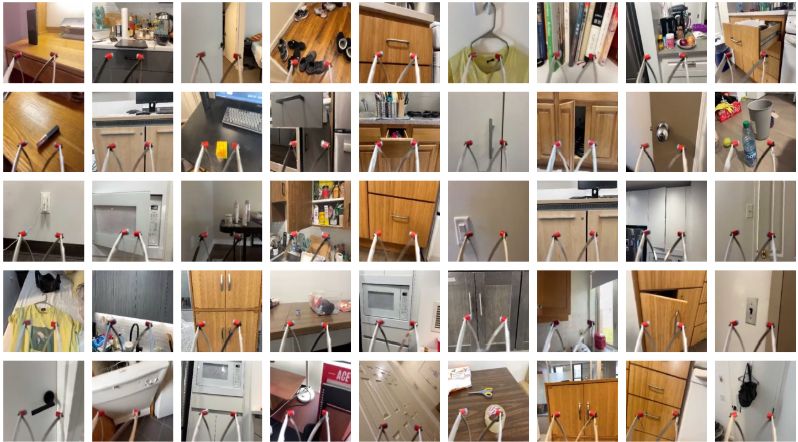

With the help of volunteers, the authors collected a household tasks dataset that they called Homes of New York (HoNY). Thus, they proved the effectiveness of the Stick as a data collection tool making it a launch pad for their large-scale learning approach.

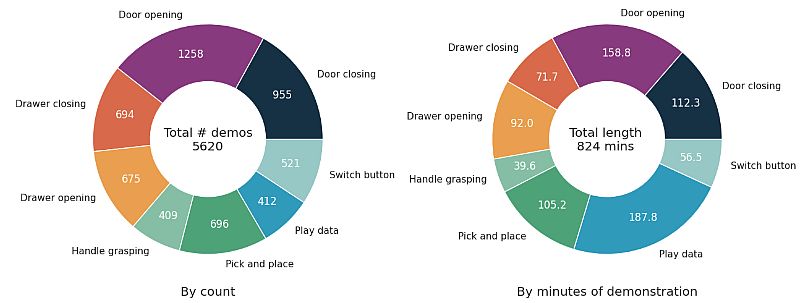

- They collected the HoNY dataset with the help of volunteers across 22 different homes. It contained 5620 demonstrations in 13 hours of total recording time, almost 1.5 million frames.

- The authors asked the volunteers to focus on 8 total classes of tasks. The tasks included switching buttons, picking and placing, handle grasping, door opening/closing, drawer opening/closing, and playing data.

- For the play data, they asked the volunteers to collect data from doing anything arbitrary around their home that they would like to do with the stick.

Deployment in Homes

As the Stick device collected data, the researchers prepared the dataset and developed the algorithm to tune the pre-trained model. The last step was to combine them and deploy them on a real robot in a home environment.

In their work, the researchers focused on solving tasks that mostly included acting in the environment. Thus, they assumed that the robot had already navigated to the task space and was accustomed to the task target. In a new smart home system, they begin by simply collecting a set of demonstrations on the task to solve a new task.

- They collected 24 new demonstrations as a general rule, enough for simple, five-second tasks. Therefore, collecting these demos took them about five minutes.

- However, some environments took longer to reset, so collecting demonstrations took longer.

- To examine the spatial generalization abilities of their robot, they gathered the data from several positions. The figure above summarizes the task types, generally performed in a small 5×5 grid.

State-of-the-Art Home Robots (2024)

Dong et al. (March 2024) published their review on The Rise of AI Home Robots in Consumer Electronics. They explored existing home robots with great potential to become household’s mainstream appliances.

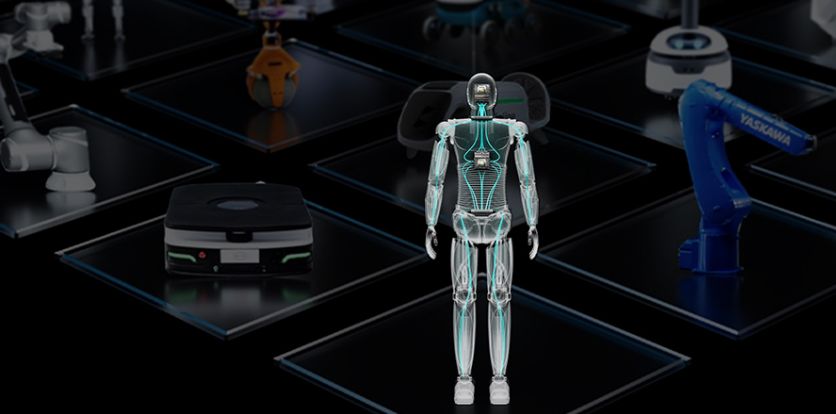

NVIDIA GR00T

Recently, NVIDIA announced Project GR00T (2024), a general-purpose model for humanoid robots, aiming to expand its work in robotics and embodied AI.

As part of the initiative, the company also announced a new computer, Jetson Thor. GR00T is a humanoid robot based on the NVIDIA Thor system-on-a-chip (SoC). Also, it made upgrades to the NVIDIA Isaac robotics platform. It included generative AI models and tools to simulate the AI infrastructure.

NVIDIA trained the GR00T robot with GPU-accelerated simulation. Thus, it enabled humanoid robots to learn from a set of human demonstrations with imitation and reinforcement learning. The GR00T model gets multimodal instructions and past interactions as input. Then, it produces the actions for the robot to execute.

Tesla Bot – Optimus

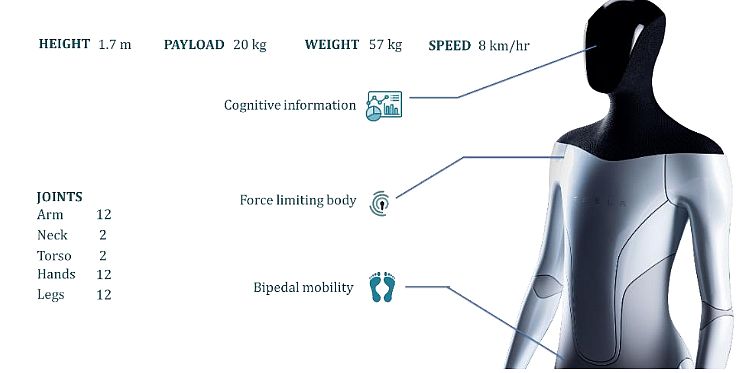

In 2022, the latest prototype of the Optimus Tesla Bot. The robot walked forward to the stage and began to perform dance moves. Optimus is important because it is not revolutionary. Instead, it is a normal technology development to solve business problems.

The team placed the robot on a stand and it waved to the audience, showing its wrist and hand motion. Musk said the prototype contained battery packs, actuators, and other parts, but wasn’t ready to walk.

Optimus is around the same size and weight as a person, 70 kg and 160 cm. The home robot can function for several hours without recharging. In addition, Optimus can follow verbal instructions to perform various tasks. It can perform complex jobs such as cleaning your laundry and bringing items indoors.

Moley Robotic Kitchen

In 2021, we witnessed the development of Moley, a robotic chef with two smoothly moving arms and a stack of pre-programmed recipes. Moley is a home robot with two arms, with an affordable price and quite an elegant look. Its position is above the kitchen appliances, so it can grasp and hold items.

The Moley kitchen is an automated kitchen unit, consisting of cabinets, and robotic arms. It also provides a GUI screen with access to a library of recipes. Also, it possesses a full set of kitchen appliances and equipment that are suitable for both robot and human use. Moreover, the Moley robot can imitate the skills of human chefs, such as learning new recipes.

Moley’s best qualities include its consistency, timing, and patience. The robot contains two highly complex robotic hands and different types of sensors. A highly precise frame structure holds these components and sensors. They can replicate the movements of a human hand.

Robear: A Care-giving Robot

Japan is still the center for robotic research and applications in healthcare and home robots. In 2015, the RIKEN-SRK Center for Human-Interactive Robot Research introduced Robear, an experimental nursing-care robot. It can lift patients from beds into wheelchairs or help them to stand up, offering much-needed help for the elderly. The household robot can also bring medicine and other supplies to their bed.

Robear weighs 140kg and is the successor to the heavier robot RIBA-II. However, Robear remains a research project, as Riken and its partners continue to improve the robot’s technology. They aim to reduce its weight and ensure that it will be safe, in this case, to prevent falling when it lifts a patient.

Amazon Astro

Amazon’s research team adapted navigation technology used for robot vacuums to develop its home robot, Astro. It efficiently maps the house and then navigates smoothly, following simple voice commands, like “Go to the bedroom” or “Take this drink to Maya in the bedroom.”

In September 2022, Amazon announced that the robot can perform pet monitoring and check your windows and doors. The home device can alert you in case of open doors or windows. Moreover, users can navigate the space in a variety of ways using the app. They can use the forward or back buttons, tapping commands on the screen, so that the Astro robot moves or chooses rooms to visit.

Astro’s price range is around $1500, and it has the Alexa assistant on board. The smart device can play music, stream TV shows, answer questions, tell the weather, tell jokes, etc. Because Astro’s height is only 44 cm, it also has a way to change its perspective. Its head possesses a periscope that contains a 12-megapixel camera and an additional 5-megapixel camera.

What’s Next?

Although there are current pitfalls, such as high cost and limited functionality, home robots are on the way to becoming ubiquitous domestic devices. The ongoing AI advancement has highly influenced the development of home robots.

These advanced machines will perform home tasks by self-learning and interacting with human users. The progress of autonomous robots will create intelligent home assistants that will understand the individual needs of their users. Therefore, they will enhance the entire human-robot relationship.