AI music is revolutionizing the music industry through a wide range of artificial intelligence (AI) applications. Music-generative AI changes how we understand, create, and interact with music. At the forefront of this transformation are Large Language Models (LLMs). These intelligent models have transcended their traditional linguistic boundaries to influence music generation.

AI software in music encompasses a variety of capabilities and techniques. Various AI tools are used to solve complex challenges, from comprehending complex musical structures to composing melodies and lyrics.

Other facets of this dynamic field include:

- Music Theory Analysis

- Audio Signal Processing

- Mood and Emotion Detection

- Style Transfer

- Natural Language Processing (NLP)

- Machine Learning Algorithms

- Generative Models

- Sound Synthesis

- Interactive Music Systems

- Data Mining and Pattern Recognition

AI tools for music, like Microsoft’s Muzic, leverage LLMs to analyze, understand, and generate music. They dissect song elements like rhythm, harmony, and lyrics, offering previously unattainable insights. These LLMs reshape the music industry by democratizing music creation. Thus, both seasoned artists and novices have unprecedented capabilities to produce music at scale.

Microsoft’s Muzic for Understanding and AI Generation in Music

We’ll explore the intersection of AI and music via the lens of Microsoft’s Muzic.

Muzic is a “research project on AI music that empowers music understanding and generation with deep learning and artificial intelligence.”

This pioneering project blends leading AI music tools into a comprehensive music understanding and generation framework. Microsoft launched the project in 2019 in Asia. To date, it has produced at least 14 research papers. Muzic’s source code is available on GitHub under the Microsoft Open Source Code of Conduct.

Apple’s DAWs (digital audio workstations), GarageBand, and Logic Pro have dominated music production. Without a workstation of its own, some view Microsoft as a laggard in the AI music space. This initiative is an ambitious step towards leapfrogging their competitors.

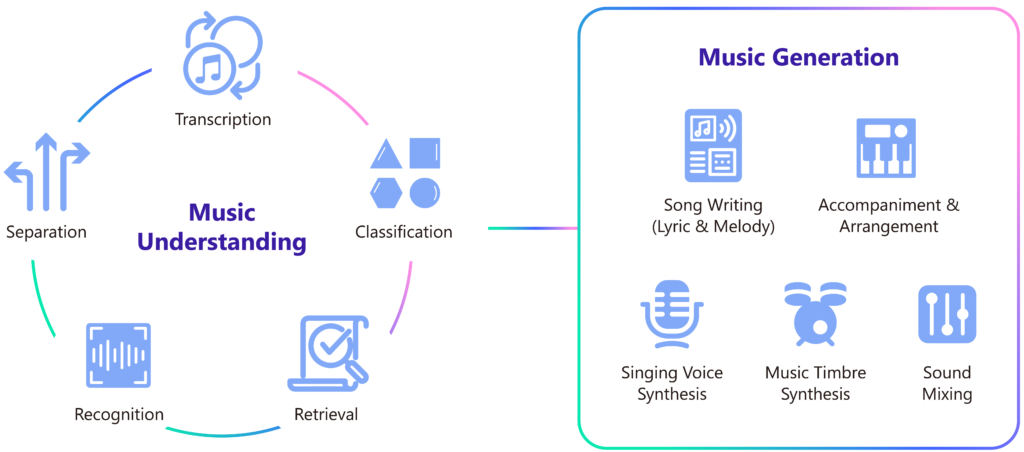

Muzic’s multifaceted approach aims to combine AI’s computational power with the artistry of music. It includes symbolic music understanding, automatic lyrics transcription, and various facets of music generation.

The AI software Muzic reflects a commitment to enhancing AI’s ability to comprehend and contribute creatively to the musical domain. It serves as a platform for experimentation and innovation, pushing the boundaries of what AI can achieve in music.

The Core Components of AI Music

As shown, Microsoft follows a two-pronged approach to music understanding and generating tracks. These two processes have to work in harmony to create music that’s intelligible and mimics human creativity.

Instead of reinventing the wheel, Microsoft leans on existing models and tools with mature capabilities in a particular area. Where gaps existed, researchers developed and proposed their tools, such as PDAugment.

Curating and combining these tools into a single framework provides aspiring music producers with a comprehensive production pipeline.

AI Music Understanding

Music Understanding involves the interpretation and analysis of musical elements and structures. This component is crucial as it enables AI to comprehend music at a level similar to human understanding. This then paves the way for a more nuanced and sophisticated AI-assisted music creation.

- Symbolic Music Understanding (MusicBERT): MusicBERT is based on the BERT (Bidirectional Encoder Representations from Transformers) NLP model. Muzic utilizes this tool to interpret and process musical symbols at a granular level. This technology is integral to Muzic’s ability to analyze complex musical compositions, enabling it to recognize patterns and structures within music.

- Automatic Lyrics Transcription (PDAugment): PDAugment focuses on the transcription of lyrics using deep learning and data augmentation. It also uses techniques like Automatic Speech Recognition (ASR), melody modeling, language modeling, noise reduction, and signal enhancement to improve its output. This tool is used to convert sung vocals into written text.

AI Music Generation

Music Generation deals with the creation of new music using AI. This enables the generation of unique compositions and song elements based on the information provided by the music understanding process.

- Song Writing (SongMASS): SongMASS is an AI tool leveraging a MASS (masked sequence-to-sequence pre-training) model. This model masks a portion of the input sequence and learns to predict it, thereby pre-training the model to understand and generate sequences effectively. It’s used to generate cohesive and creative song lyrics, contributing to the songwriting process.

- Lyric Generation (DeepRapper): DeepRapper is an AI-based lyric generation tool. It focuses on generating hip-hop rap lyrics, utilizing NLP and machine learning techniques to produce rhythmically and thematically coherent verses. Feeding DeepRapper a textual prompt with a single line will generate entire verses. What sets it apart from predecessors is its ability to model beats that correspond with the lyrics instead of just rhythm.

- Lyric-to-Melody Generation: These are a class of tools used to convert textual lyrics into melodious tunes using sophisticated AI algorithms. They handle one of the most complex tasks: turning written words into singable melodies.

- TeleMelody: TeleMelody is a two-stage lyric-to-melody generation system that utilizes music templates for better control and efficiency in automatic songwriting. It addresses issues in traditional end-to-end models, like data scarcity and lack of melody control, by separating lyric-to-template and template-to-melody processes. This approach enables high-quality, controllable melody generation with minimal lyric-melody paired data.

- Relationships Between Lyrics and Melodies (ReLyMe): ReLyMe is another tool focused on the lyric-to-melody conversion. It’s designed to generate melodies that are both technically sound and emotionally resonant with the lyrics. It relies on advanced neural network architectures to interpret pitch, tone, style, rhythm, and other important musical elements.

- Re-Creation of Creations (ROC): This tool can take pieces of music and reinterpret or modify them, creating new versions while retaining the essence of the original. ROC operates in two stages: first, generating and storing a vast library of music fragments indexed by key features like chords and tonality. Then, re-creating melodies by aligning these fragments with lyrical features without needing paired lyric-melody data, effectively aligning lyrical and musical elements. It adds a layer of creative reinterpretation, similar to how you would use text-to-text or image-to-image generators to create unique variations of the same original content.

- Accompaniment Generation (PopMAG): PopMAG is unique in its focus on creating harmonious backdrops for songs. It uses AI to compose music that complements the main melody. In the Muzic ecosystem, PopMAG plays a crucial role in enhancing the fullness and depth of AI-generated music. The online demo clearly illustrates how it takes a basic tune and transforms it into a full-sounding composition. It also combines sequence-to-sequence and generative AI models to achieve this.

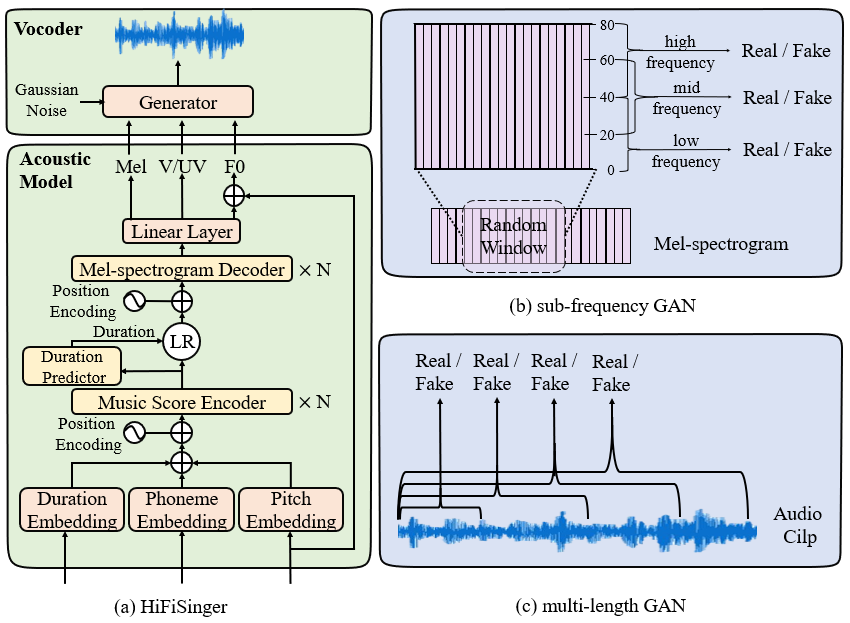

- Singing Voice Synthesis (HiFiSinger): HiFiSinger is a high-fidelity Singing Voice Synthesis (SVS) system developed to address higher sampling rates (48 kHz) necessary for expressive and emotive singing voices. It utilizes a FastSpeech-based acoustic model and a Parallel WaveGAN vocoder, enhanced with multi-scale adversarial training, including novel sub-frequency and multi-length GANs. These innovations enable HiFiSinger to manage wider frequency bands effectively and longer waveforms typical in high-quality singing synthesis, significantly improving voice quality.

MuseCoco – Microsoft’s Text-to-MIDI AI Music Generator

As part of its continued innovation, Microsoft’s Muzic team announced MuseCoco. MuseCoco is a text-to-MIDI generator that was released in May 2023.

A MIDI is like a digital music sheet that tells electronic instruments what notes to play and how to play them. It doesn’t produce sound on its own; it needs an instrument or software to turn those instructions into actual music.

The MuseCoco project is available on GitHub, but there’s no consumer application or video demo yet. Until recently, Microsoft was lagging behind competitors like Google’s MusicLM and Meta’s MusicGen. However, its basic online demo shows promise and isn’t far off in quality.

The tool stands out for its two-stage framework. First, it understands text descriptions and extracts musical attributes. Second, it uses these attributes to generate symbolic music. This approach, named “Music Composition Copilot,” greatly enhances the efficiency of music creation.

MuseCoco’s efficiency lies in its self-supervised model training. It extracts attributes directly from music sequences. It then synthesizes and refines text using ChatGPT, adhering to predefined attribute templates. This results in precise control over the generated music.

The tool employs a large-scale model with 1.2 billion parameters, ensuring exceptional controllability and musicality. While focusing on symbolic music, MuseCoco does not handle timbre information. For practical applications, MuseScore is ideal for exporting .mid files to enhance timbre.

MuseCoco is particularly useful because it allows for easy editing and tweaking of the music. Thus, it is ideal for those who want to create music but may not have the technical skills. You first describe what you want, and then MuseCoco creates it. Plus, the format of the generated music is easily editable for further refinement.

Advancements of AI in Music

Advancements in AI are transforming the music industry. It enables personalized music creation, automates composition, and facilitates music production. AI algorithms can also analyze trends, predict hits, and tailor music to individual tastes. Music generation tools will alter the marketing and consumption of music.

Tools like MuseCoco democratize music creation, allowing anyone to compose without formal training. AI is also enhancing music recommendations and refining the user experience on streaming platforms.

These advancements raise questions about copyright, authenticity, and the role of human content creators. It signals a paradigm shift in the industry’s future landscape.

As with all generative AI tools, AI music walks the fine line between fair use and copyright infringement. While you can use it to create original products, questions arise over how “original” its creations are. For example, if their training data uses real-life, copyrighted quality music. Even text-to-music tools can generate copies or derivatives of others’ work.

It sparks other legal and ethical considerations as well. For example, there is a palpable fear of potential job displacement for musicians. It also highlights the vacuum regarding clear copyright laws around AI-generated songs.

Copyright law still needs to catch up with AI’s capabilities. This creates grey areas in ownership and rights over AI-created works. Within the music world, there’s also debate over the value of AI-generated music compared to that of humans.

Despite these concerns, AI in music is a significant step forward. It can be a tool to foster creativity and expand musical possibilities. It opens doors to work with traditional composition, acting as a digital collaborator.

Applied AI for Vision

At viso.ai, we work in computer vision, a related discipline that involves real-time AI video analytics. Viso enables companies to build and scale computer vision applications with one end-to-end platform, Viso Suite, which provides intuitive no-code and low-code tools.

Organizations within the entertainment industry can leverage AI vision for a wide range of applications, such as enhancing event security, large crowd counting and analytics, waiting time and queue length detection, and more.