AI tools have evolved and today they can generate completely new texts, codes, images, and videos. ChatGPT, within a short period, has emerged as a leading exemplar of generative artificial intelligence systems.

The results are quite convincing, as it is often hard to recognize whether the content is created by a man or a machine. Generative AI is especially good and applicable in 3 major areas: text, images, and video generation.

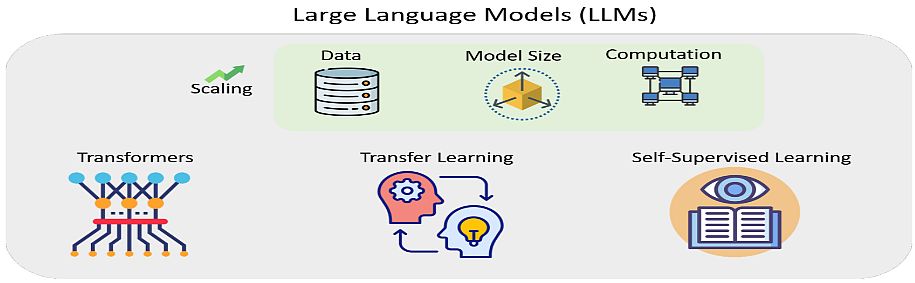

Large Language Models

Text generation as a tool is already being applied in journalism (news production), education (production and misuse of materials), law (drafting contracts), medicine (diagnostics), science (search and generation of scientific papers), etc.

In 2018, OpenAI researchers and engineers published an original work on AI-based generative large language models. They pre-trained the models with a large and diverse corpus of text, in a process they call Generative Pre-Training (GPT).

The authors described how to improve language understanding performance in NLP by using GPT. They applied generative pre-training of a language model on a diverse corpus of unlabeled text, followed by discriminative fine-tuning on each specific task. This annulates the need for human supervision and for time-intensive hand-labeling.

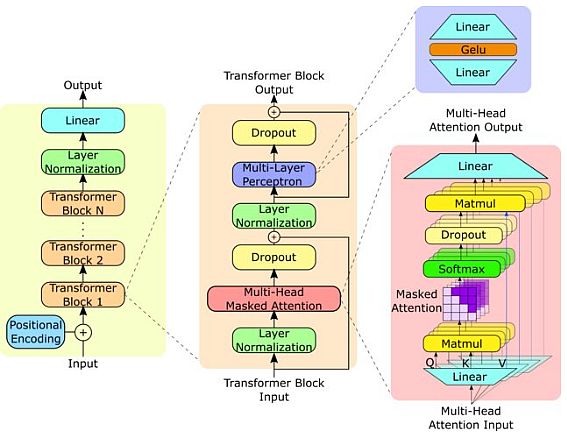

GPT models are based on a transformer-based deep learning neural network architecture. Their applications include various Natural Language Processing (NLP) tasks, including question answering, text summarization, sentiment analysis, etc. without supervised pre-training.

Previous ChatGPT Models

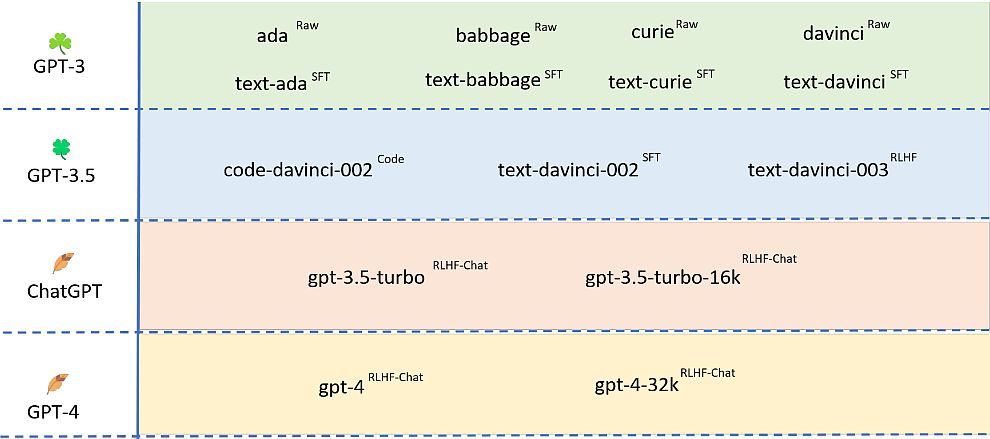

The GPT-1 version was introduced in June 2018 as a method for language understanding by using generative pre-training. To demonstrate the success of this model, OpenAI refined it and released GPT-2 in February 2019.

The researchers trained GPT-2 to predict the next word based on 40GB of text. Unlike other AI models and practices, OpenAI did not publish the full version of the model, but a lite version. In July 2020, they introduced the GPT-3 model as the most advanced language model with 175 billion parameters.

GPT-2 Model

GPT-2 model is an unsupervised multi-task learner. The advantages of GPT-2 over GPT-1 were using a larger dataset and adding more parameters to the model to learn stronger language models. The training objective of the language model was formulated as P (output|input).

GPT-2 is a large transformer-based language model, trained to predict the next word in a sentence. The transformer provides a mechanism based on encoder-decoders to detect input-output dependencies. Today, it is the golden approach for generating text.

You don’t need to train GPT-2 (it is already pre-trained). GPT-2 is not just a language model like BERT, it can also generate text. Just give it the beginning of the phrase upon typing, and then it will complete the text word by word.

At first, recurrent (RNN) networks, in particular, LSTM, were mainstream in this area. But after the invention of the Transformer architecture in the summer of 2017 by OpenAI, GPT-2 gradually began to prevail in conversational tasks.

GPT-2 Model Features

To improve the performance, in February 2019, OpenAI increased its GPT by 10 times. They trained it on an even larger volume of text, on 8 million Internet pages (a total of 40 GB of text).

The resulting GPT-2 network was the largest neural network, with an unprecedented number of 1.5 billion parameters. Other features of GPT-2 include:

- GPT-2 had 48 layers and used 1600-dimensional vectors for word embedding.

- Large vocabulary of 50,257 tokens.

- Larger batch size of 512 and a larger context window of 1024 tokens.

- Researchers performed normalization at the input of each sub-block. Moreover, they added a layer after the final self-attention block.

As a result, GPT-2 was able to generate entire pages of connected text. Also, it reproduced the names of the characters in the course of the story, quotes, references to related events, and so on.

Generating coherent text of this quality is impressive by itself, but there is something more interesting here. GPT-2 without any additional training immediately showed results close to the state-of-the-art on many conversational tasks.

GPT-3

GPT-3 release took place in May 2020 and beta testing began in July 2020. All three GPT generations utilize artificial neural networks. Moreover, they train these networks on raw text and multimodal data.

At the heart of the Transformer is the attention function, which calculates the probability of occurrence of a word depending on the context. The algorithm learns contextual relationships between words in the texts provided as training examples and then generates a new text.

- GPT-3 shares the same architecture as the previous GPT-2 algorithm. The main difference is that they increased the number of parameters to 175 billion. Open-AI trained GPT-3 on 570 gigabytes of text or 1.5 trillion words.

- The training materials included: the entire Wikipedia, two datasets with books, and the second version of the WebText dataset.

- The GPT-3 algorithm can create texts of different forms, styles, and purposes: journal and book stories (while imitating the style of a particular author), songs and poems, press releases, and technical manuals.

- OpenAI tested GPT-3 in practice where it wrote multiple journal essays (for the UK news magazine Guardian). The program can also solve anagrams, solve simple arithmetic examples, and generate tablatures and computer code.

ChatGPT – The Latest GPT-4 Model

OpenAI launched its latest version, the GPT-4 model, on March 14, 2023, together with its publicly available ChatGPT bot, and sparked an AI revolution.

GPT-4 New Features

If we compare Chat GPT 3 vs 4, the new model processes images and text as input, something that previous versions could only do with text.

The new version has increased API tokens from 4096 to 32,000 tokens. This is a major improvement, as it provides the creation of increasingly complex and specialized texts and conversations. Also, GPT-4 has a larger training set volume than GPT-3, i.e. up to 45 TB.

OpenAI trained the model on a large amount of multimodal data, including images and text from multiple domains and sources. They sourced data from various public datasets, and the objective is to predict the next token in a document, given a sequence of previous tokens and images.

- In addition, GPT-4 improves problem-solving capabilities by offering greater responsiveness with text generation that imitates the style and tone of the context.

- New knowledge limit: the message that the information collected by ChatGPT has a cut-off date of September 2021 is coming to an end. The new model includes information up to April 2023, providing a much more current query context.

- Better instruction tracking: The model works better than previous models for tasks that require careful tracking of instructions, such as generating specific formats.

- Multiple tools in a chat: the updated GPT-4 chatbot chooses the appropriate tools from the drop-down menu.

ChatGPT Performance

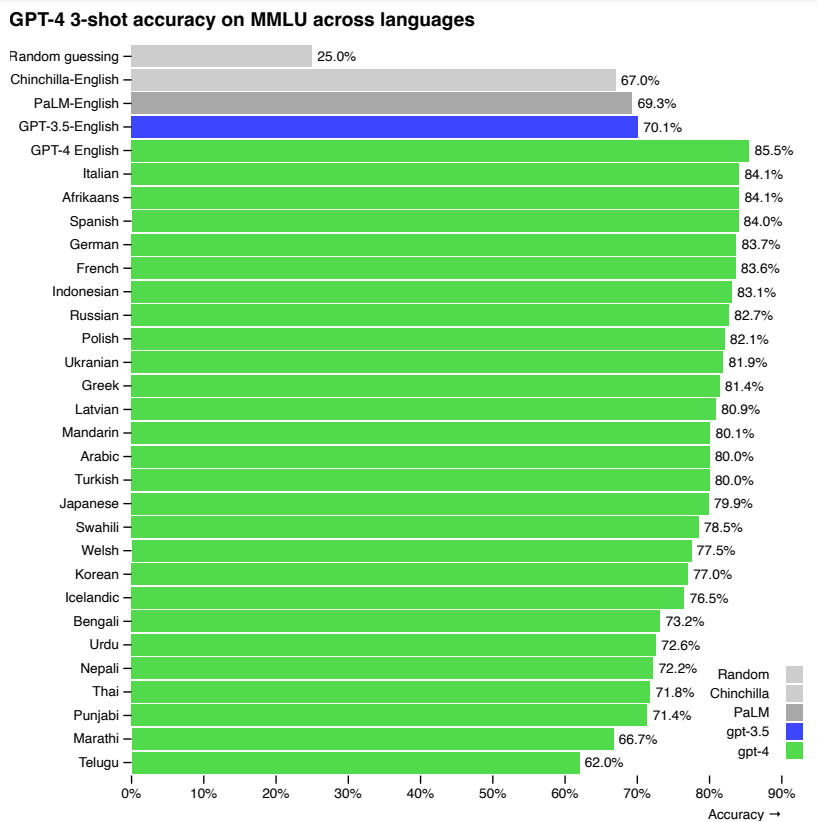

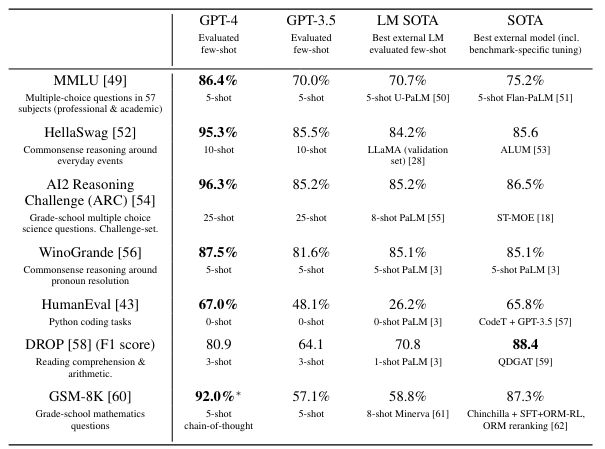

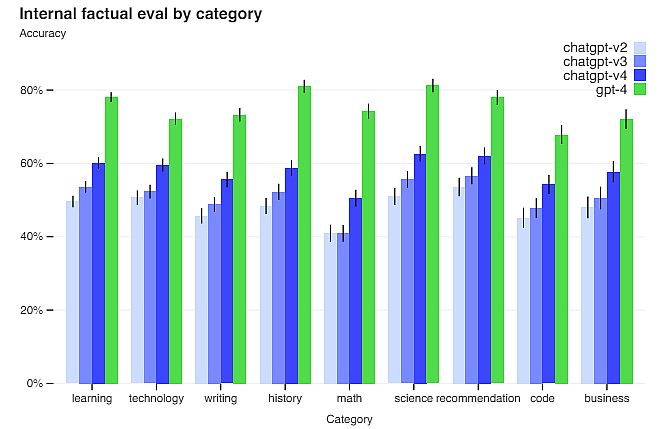

GPT-4 (ChatGPT) exhibits human-level performance on the majority of professional and academic exams. Notably, it passes a simulated version of the Uniform Bar Examination with a score in the top 10% of test takers.

The model’s capabilities on bar exams originate primarily from the pre-training process, and they don’t depend on RLHF. On multiple-choice questions, both the base GPT-4 model and the RLHF model perform equally well.

On a dataset of 5,214 prompts submitted to ChatGPT and the OpenAI API, the responses generated by GPT-4 were better than the GPT-3 responses on 70.2% of prompts.

GPT-4 accepts prompts consisting of both images and text, which lets the user specify any vision or language task. Moreover, the model generates text outputs given inputs consisting of arbitrarily interlaced text and images. Over a range of domains (including images), ChatGPT generates superior content to its predecessors.

How to Use ChatGPT 4

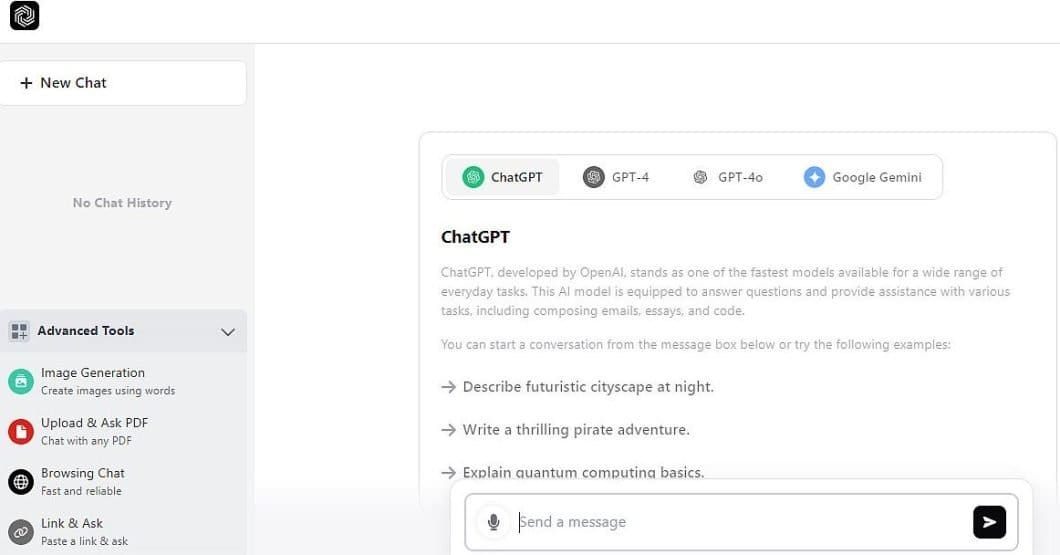

You can access ChatGPT here, and its interface is easy and clear. The basic usage is a free version, while the Plus plan costs a $20 per month subscription. There are also Team and Enterprise plans. For all of them, you need to create an account.

Here are the main ChatGPT 4 options, with the screenshot below as an example:

- Chat bar and sidebar: The chat bar “Send a message” button is placed on the bottom of the screen. ChatGPT remembers your previous conversations and will respond with context. When you register and log in, the bot can remember your conversations.

- Account (if registered): Clicking on your name in the upper right corner gives you access to your account information, including settings, the option to log out, get help, and customize ChatGPT.

- Chat history: In Advanced tools (left sidebar), you can access GPT-4 past conversations. You can also share your chat history with others, turn off chat history, delete individual chats, or delete your entire chat history.

- Your Prompts: The questions or prompts you send the AI chatbot appear at the bottom of the chat window, with your account details on the top right.

- ChatGPT’s responses: ChatGPT responds to your queries and the responses appear on the main screen. Also, you can copy the text to your clipboard to paste it elsewhere and provide feedback on whether the response was accurate.

Limitations of ChatGPT

Despite its capabilities, GPT-4 has similar limitations to earlier GPT models. Most importantly, it is still not fully reliable (it “hallucinates” facts). You should be careful when using ChatGPT outputs, particularly in high-stakes contexts, with the exact protocol for specific applications.

GPT-4 significantly reduces hallucinations relative to previous GPT-3.5 models (which have themselves been improving with continued iteration). Thus, GPT-4 scores 19 percentage points higher than the previous GPT-3.5 on OpenAI evaluations.

GPT-4 generally lacks knowledge of events that have occurred after the pre-training data cuts off on September 10, 2021, and does not learn from its experience. It can sometimes make simple reasoning errors that do not seem to comport with competence across so many domains.

Also, GPT-4 can be confidently wrong in its predictions, not double-checking the output when it’s likely to make a mistake. GPT-4 has various biases in its outputs that OpenAI still tries to characterize and manage.

Open AI intends to make GPT-4 have reasonable default behaviors that reflect a wide swath of users’ values. Therefore, they will customize their system within some broad bounds and get public feedback on improving it.

What’s Next?

ChatGPT is a large multimodal model capable of processing image and text inputs and producing text outputs. The model can be used in a wide range of applications, such as dialogue systems, text summarization, and machine translation. As such, it will be the subject of substantial interest and progress in the upcoming years.

Is this blog interesting? Read more of our similar blogs here: