Human brain activity has spurred countless investigations into the fundamental principles that govern our thoughts, emotions, and actions. At the heart of this exploration lies the concept of neuron activation. This process is fundamental to the transmission of information throughout our extensive neural network.

This process is often mimicked in the world of artificial intelligence and machine learning. AI systems make decisions and function similarly to how information travels through neural pathways across brain regions.

In this article, we’ll discuss the role that neuron activation plays in modern technology:

- Learn what Neuron activation is

- The biological concepts of the human brain vs. the technical concepts

- Functions and real-world applications of neuron activation

- Current research trends and challenges

Neuron Activation: Neuronal Firing in the Brain

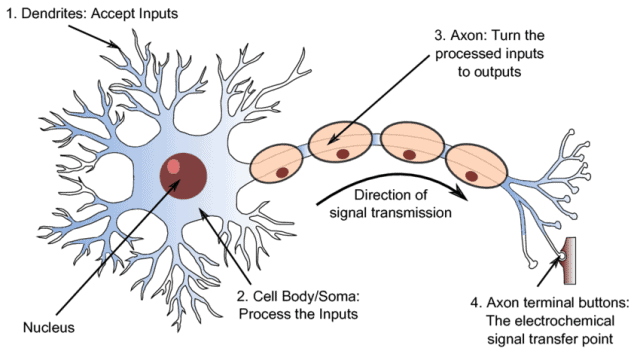

The human brain has approximately 100 billion neurons, each connected to thousands of other neurons through trillions of synapses. This complex network forms the basis for cognitive abilities, sensory perception, motor functions, and the nervous system. At the core of neuron firing is the action potential. This is an electrochemical signal that travels along the length of a neuron’s axon.

The process begins when a neuron receives excitatory or inhibitory signals from its synaptic connections. If the sum of these signals surpasses a certain threshold, an action potential is initiated. This electrical impulse travels rapidly down the axon, facilitated by the opening and closing of voltage-gated ion channels.

Upon reaching the axon terminals, the action potential triggers the release of neurotransmitters into the synapse. Neurotransmitters are chemical messengers that travel the synaptic gap and bind to receptors on the dendrites of neighboring neurons. This binding can either excite or inhibit the receiving neuron, influencing whether it will fire an action potential. The resulting interplay of excitatory and inhibitory signals forms the basis of information processing and transmission within the neural network.

Neuron firing is not a uniform process but a nuanced orchestration of electrical and chemical events. The frequency and timing of action potentials (firing rate) contribute to the coding of information in the brain regions. This firing and communicating is the foundation of our ability to process sensory input, form memories, and make decisions.

Neural Networks Replicate Biological Activation

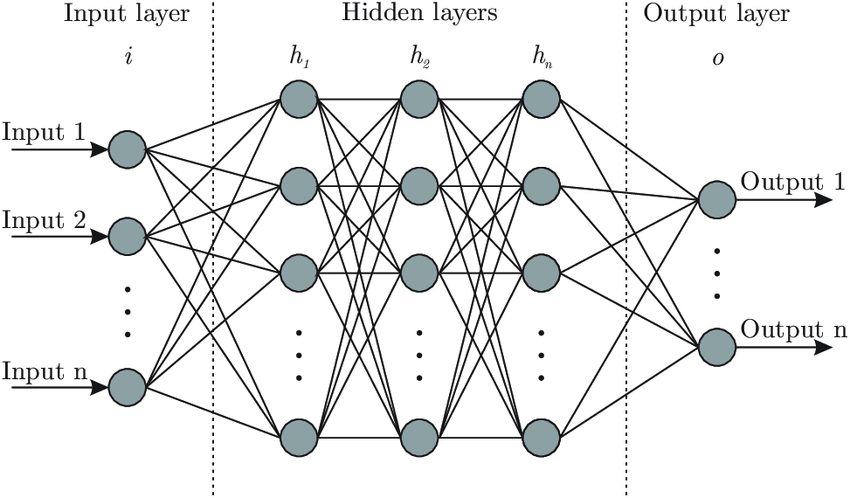

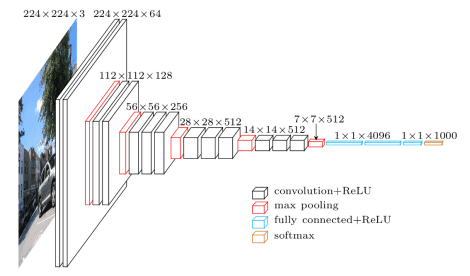

Artificial Neural Networks (ANNs) can learn from data and adapt to new neural activation patterns. By adjusting the weights of connections between neurons, ANNs can refine their responses to inputs. This gradually improves their ability to perform tasks such as image recognition, natural language processing (NLP), and speech recognition.

Inspired by the functioning of the human brain, ANNs leverage neuron activation to process information, make decisions, and learn from data. Activation functions, mathematical operations within neurons, introduce non-linearities to the network, enabling it to capture intricate patterns and relationships in complex datasets. This non-linearity plays a critical role in the network’s ability to learn and adapt.

In a nutshell, neuron activation in machine learning is the fundamental mechanism that allows Artificial Neural Networks to emulate the adaptive and intelligent features observed in human brains.

Activation Synthesis Theory

According to the Activation-Synthesis Theory introduced by Allan Hobson and Robert McCarley in 1977, activation refers to the spontaneous firing of neurons in the brainstem during REM sleep. This previous study found that spontaneous firing leads to random neural activity in various brain regions. This randomness is then synthesized by the brain into dream content.

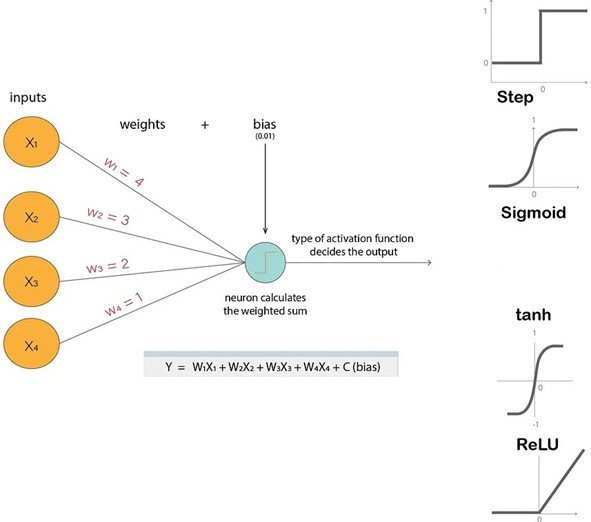

In machine learning, particularly in ANNs, activation functions play an essential role: These functions determine whether a neuron should fire, and the output then passes to the next layer of neurons.

In both contexts, the connection lies in the idea of neural activation to interpret the signals. The activation functions are designed and trained to extract patterns and information from input data. Unlike the random firing in the brain during dreaming, the activations in ANNs are purposeful and directed toward specific tasks.

While the Activation-Synthesis Theory itself doesn’t directly inform machine learning practices, the analogy highlights the concept of interpreting neural activations or signals in different contexts. One applies to neuroscience to explain dreaming, and the other to the field of AI and ML.

Types of Neural Activation Functions

Neural activation functions determine whether a neuron should be activated or not. These functions introduce non-linearity to the network, enabling it to learn and model complex relationships in data. There are the following types of neural activation functions:

- Sigmoid Function. A smooth, S-shaped function that outputs values between 0 and 1. This is commonly used for classification tasks.

- Hyperbolic Tangent (tanh) Function. Similar to the sigmoid function, but outputs values between -1 and 1, often used in recurrent neural networks.

- ReLU (Rectified Linear Unit) Function. A more recent activation function that outputs the input directly if it is positive, and zero otherwise. This helps prevent neural networks from vanishing gradients.

- Leaky ReLU Function. A variant of ReLU that allows a small positive output for negative inputs, addressing the problem of dead neurons.

Challenges of Neuron Activation

Overfitting Problem

Overfitting occurs when a model learns the training data too well. Thus, it captures noise and details specific to that dataset but fails to generalize effectively to new, unseen data. In neuron activation, this can hinder the performance and reliability of ANNs.

When activation functions and the network’s parameters are fine-tuned to fit the training data too closely, the risk of overfitting increases. This is because the network may become overly specialized in the details of the training dataset. In turn, it loses the ability to generalize well to different data distributions.

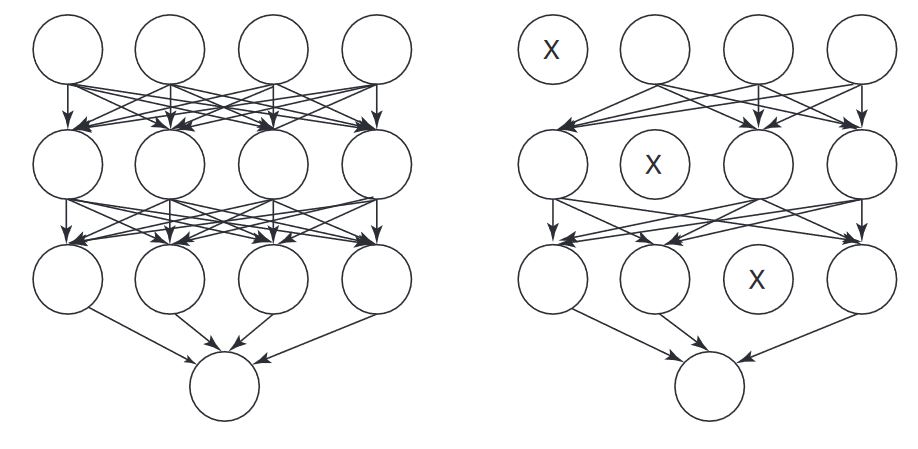

To reduce overfitting, researchers employ techniques such as regularization and dropout methods. Regularization introduces penalties for overly complex models, discouraging the network from fitting the noise in the training data. Dropout involves randomly “dropping out” neurons during training, preventing them from contributing to the learning process temporarily (see the example below). These strategies encourage the network to capture essential patterns in the data while avoiding the memorization of noise.

Growing Complexity

As ANNs grow in size and depth to handle increasingly complex tasks, the choice and design of activation functions become crucial. Complexity in neuron activation arises from the need to model highly nonlinear relationships present in real-world data. Traditional activation functions like sigmoid and tanh have limitations in capturing complex patterns. This is because of their saturation behavior, which can lead to the vanishing gradient problem in deep networks.

This limitation has driven the development of more sophisticated activation functions like ReLU and its variants. These can better handle complex, nonlinear mappings.

However, as networks become more complex, the challenge shifts to choosing activation functions that strike a balance between expressiveness and avoiding issues like dead neurons or exploding gradients. Deep neural networks with numerous layers and intricate activation functions increase computational demands and may lead to challenges in training. Thus, requiring careful optimization and architectural considerations.

Real-World Applications of Neuron Activation

The impact of neuron activation extends far beyond machine learning and artificial intelligence. We have seen neuron activation applied across various industries, including:

Finance Use Cases

- Fraud Detection. Activation functions can help identify anomalous patterns in financial transactions. By applying activation functions in neural networks, models can learn to discern subtle irregularities that might indicate fraudulent activities.

- Credit Scoring Models. Neuron activation contributes to credit scoring models by processing financial data inputs to assess one’s creditworthiness. It contributes to the complex decision-making process that determines credit scores, impacting lending decisions.

- Market Forecasting. In market forecasting tools, activation functions aid in analyzing historical financial data and identifying trends. Neural networks with appropriate activation functions can capture intricate patterns in market behavior. Thus, assisting in making more informed investment decisions.

Healthcare Examples

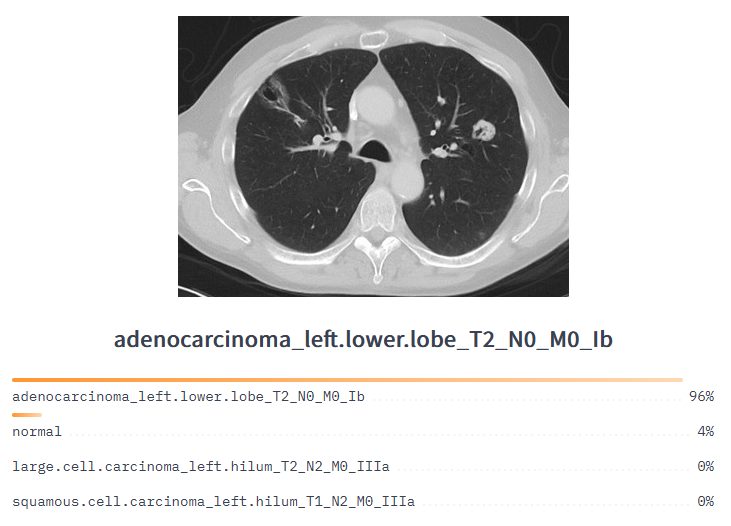

- Medical Imaging Analysis. Medical imaging tasks can apply neuron activation in instances such as abnormality detection in X-rays or MRIs. They contribute to the model’s ability to recognize patterns associated with different medical conditions.

- Drug Discovery. Neural networks in drug discovery utilize activation functions to predict the potential efficacy of new compounds. By processing molecular data, these networks can assist researchers in identifying promising candidates for further exploration.

- Personalized Medicine. In personalized medicine, activation functions help tailor treatments based on one’s genetic and molecular profile. Neural networks can analyze diverse data sources to recommend therapeutic approaches.

Robotics

- Decision-Making. Activation functions enable robots to make decisions based on sensory input. By processing data from sensors, robots can react to their environment and make decisions in real time.

- Navigation. Neural networks with activation functions help the robot understand its surroundings and move safely by learning from sensory data.

- Human Interaction. Activation functions allow robots to respond to human gestures, expressions, or commands. The robot processes these inputs through neural networks.

Autonomous Vehicles

- Perception. Neuron activation is fundamental for the perception capabilities and self-driving of autonomous vehicles. Neural networks use activation functions to process data from various sensors. These include cameras and LiDAR to recognize objects, pedestrians, and obstacles in the vehicle’s environment.

- Decision-Making. Activation functions contribute to the decision-making process in self-driving cars. They help interpret the perceived environment, assess potential risks, and make vehicle control and navigation decisions.

- Control. Activation functions assist in controlling the vehicle’s actions, like steering, acceleration, and braking. They contribute to the system’s overall ability to respond to changing road conditions.

Personalized Recommendations

- Product Suggestions. Recommender systems can process user behavior data and generate personalized product suggestions. By understanding user preferences, these systems enhance the accuracy of product recommendations.

- Movie Recommendations. In entertainment, activation functions contribute to recommender systems that suggest movies based on individual viewing history and preferences. They help tailor recommendations to match users’ tastes.

- Content Personalization. Activation functions work in various content recommendation engines, providing personalized suggestions for articles, music, or other forms of content. This enhances user engagement and satisfaction by delivering content aligned with individual interests.

Research Trends in Neuron Activation

We’ve seen an emphasis on developing more expressive activation functions, able to capture complex relationships between inputs and outputs, and thereby enhancing the overall capabilities of ANNs. The exploration of non-linear activation functions, addressing challenges related to overfitting and model complexity, remains a focal point.

Furthermore, researchers are delving into adaptive activation functions, contributing to the flexibility and generalizability of ANNs. These trends underscore the continuous evolution of neuron activation research, with a focus on advancing the capabilities and understanding of artificial neural networks.

- Integrating Biological Insights. Using neuroscientific knowledge in the design of activation functions, researchers aim to develop models that more closely resemble the brain’s neural circuitry.

- Developing More Expressive Activation Functions. Researchers are investigating activation functions that can capture more complex relationships between inputs and outputs. Thus, enhancing the capabilities of ANNs in tasks such as image generation and natural language understanding.

- Exploring Non-Linear Activation Functions. Traditional activation functions are linear in the sense that they predictably transform the input signal. Researchers are exploring activation functions that exhibit nonlinear behavior. These could potentially enable ANNs to learn more complex patterns and solve more challenging problems.

- Adaptive Activation Functions. Some activation functions are being developed to adapt their behavior based on the input data, further improving the flexibility and generalizability of ANNs.

Ethical Considerations and Challenges

The use of ANNs raises concerns related to data privacy, algorithmic bias, and the societal impacts of intelligent systems. Privacy issues arise as ANNs often require vast amounts of data, leading to concerns about the confidentiality of sensitive information. Additionally, Algorithmic bias can perpetuate and amplify societal inequalities if training data reflects existing biases.

Deploying ANNs in critical applications, such as medicine or finance, poses challenges in accountability, transparency, and ensuring fair and unbiased decision-making. Striking a balance between technological innovation and ethical responsibility is essential to navigate these challenges and ensure responsible development and deployment.

- Privacy Concerns. Neural activation often involves handling sensitive data. Ensuring robust data security measures is crucial to prevent unauthorized access and potential misuse.

- Bias and Fairness. Neural networks trained on biased datasets can amplify existing social biases. Ethical considerations involve addressing bias in training data and algorithms to ensure fair and equitable outcomes.

- Transparency and Explainability. Complexity raises challenges in understanding decision-making processes. Ethical considerations call for efforts to make models more transparent and interpretable to create trust among users.

- Informed Consent. In applications with personal data, receiving informed consent from individuals becomes a critical ethical consideration. Users should understand how their data is used, particularly when it comes to areas like personalized medicine.

- Accountability and Responsibility. Determining responsibility for the actions of neural networks poses challenges. Ethical considerations involve establishing accountability frameworks and making sure that developers, organizations, and users understand their roles and responsibilities.

- Regulatory Frameworks. Establishing comprehensive legal and ethical frameworks for neural activation technologies is vital. Ethical considerations include advocating for regulations that balance innovation with protection against potential harm.

The Tech in the Real World

As research advances, we can expect to see more powerful ANNs to tackle real-world challenges. A deeper understanding of neuron activation will help unlock the long-term potential of both human and artificial intelligence.

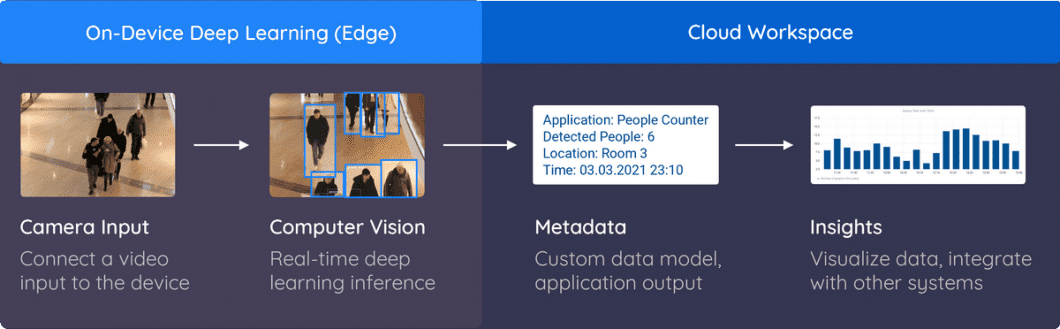

To get started with computer vision and machine learning, check out Viso Suite. Viso Suite is our end-to-end enterprise platform. Book a demo to learn more.