AI art generation involves using artificial intelligence systems to create or assist in creating visual art. This technology leverages machine learning algorithms to understand and replicate artistic styles, generate novel images, or even collaborate with human artists.

It’s a giant leap forward in democratizing art creation, making it accessible to individuals without formal training. It also opens up new avenues for digital communication. Today, we use artificial intelligence (AI) generators in a wide range of applications to create artwork for personal or commercial purposes.

The journey of AI in art traces back to the development of neural networks and deep learning technologies. Notable breakthroughs include the introduction of Convolutional Neural Networks (CNNs), which dramatically improved the ability of machines to analyze and understand visual content. And Generative Adversarial Networks (GANs) which opened new doors for generating high-quality, realistic images.

NLP (natural language processing) capabilities also make it easy to prompt these systems using text-to-image models.

AI models like Google’s DeepDream may have set the tone for modern AI image generators. However, Midjourney AI and Stable Diffusion arguably represent the peak of what’s possible today. These models leverage intricate algorithms and vast training data to produce diverse, complex, and artistically pliable artworks.

How do AI-Art Generators Like Midjourney vs Stable Diffusion Work?

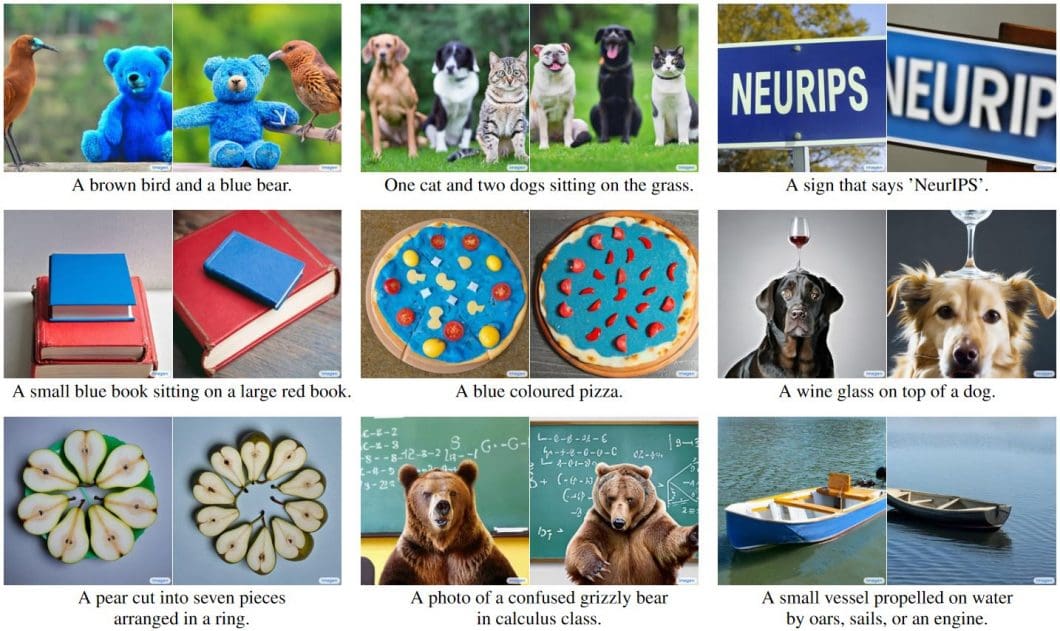

AI art generators like Midjourney and Stable Diffusion transform textual prompts into visual art using various underlying processes. Here’s a brief overview of the process:

- Prompt Interpretation: The user inputs a descriptive text prompt. The system uses natural language processing to analyze and understand the prompt’s intent and details.

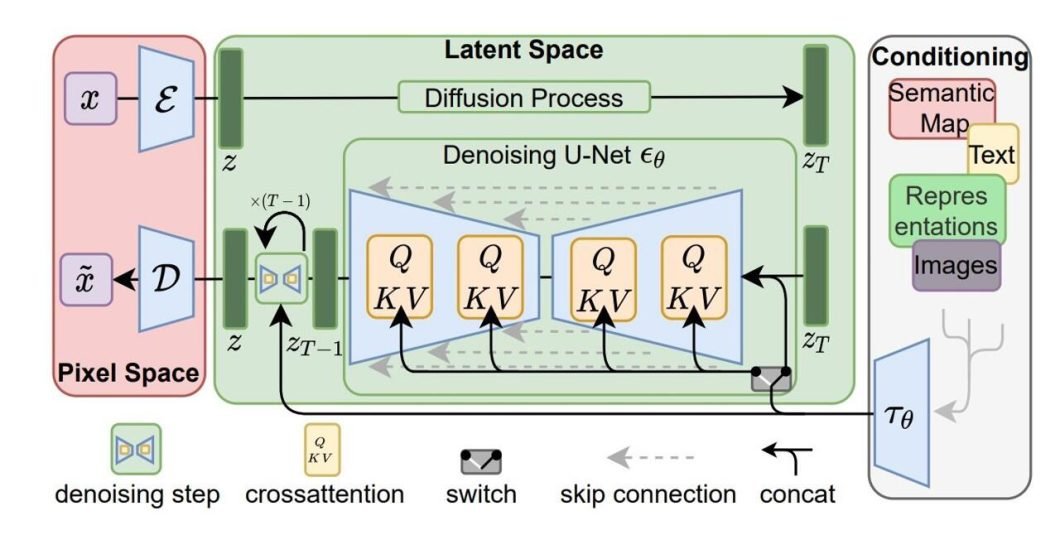

- Model Selection: Based on the prompt, the system selects the most appropriate pre-trained model. Midjourney might use custom models optimized for certain styles. Stable Diffusion typically relies on the versatility of the Latent Diffusion Model (LDM).

- Image Synthesis: In the sampling step, the image generator selects specific outputs from a model’s learned probability distribution. For Stable Diffusion, this involves the iterative refinement of noise into detailed images, leveraging a process known as “diffusion.” Midjourney uses a form of generative modeling, which may involve proprietary enhancements for creativity and fidelity.

- Refinement and Output: The engine refines the AI-generated images through additional layers of processing. This may include style adjustments and resolution enhancements. It then outputs the final image(s), providing a visual representation of the initial prompt.

Introduction to Midjourney AI

Midjourney AI was developed by an independent research team out of San Francisco, Midjourney, Inc. The platform initially launched on 12 July 2022, staying in beta for some time. As of 21 December 2023, Midjourney is in its v6 iteration and has been in alpha since v4, launched in November 2022.

Despite not being known for creating images that are photorealistic, it has the capacity to do so. For example, its lifelike depiction of the Pope in a puffer jacket went viral, sparking confusion online.

Currently, you can only prompt the Midjourney AI art generator through a Discord account. However, a more accessible interface is in the works. However, there are clear guides on how to use the Midjourney AI generator.

It also requires a subscription to use, with no free trial or plan available. Pricing ranges from $10/month to $120/month.

With each prompt, the AI of Midjourney produces four image variations. You can immediately download an upscaled version of one of these or select it for further editing. Plus, it has the ability for you to upload and blend your own images into its output.

Midjourney is also not an open-source project, so they’re fairly secretive about its underlying technologies and models. However, we do know that it prioritizes deep learning and multi-layered neural networks.

Key Features

- High-Quality Art Generation: Excels at generating high-resolution images with an incredible amount of detail.

- Stylistic Qualities: The Midjourney model generates images primarily with a somewhat surreal and dreamlike quality. It’s not always the best for hyper-realistic images but excels at artistic interpretations.

- Prompt Flexibility: Supports a broad range of text prompts, turning abstract concepts into digital art. While some engines are better at handling simpler, more generic prompts, Midjourney excels at detailed instructions.

- Style Adaptability: Capable of mimicking various artistic styles, from classical to contemporary to futuristic.

Technical Deep Dive

The power behind Midjourney’s prompt interpretation and art generation lies in its sophisticated algorithms and deep learning models. It employs:

- Advanced Natural Language Processing (NLP): It demonstrates a deep comprehension of context, nuances, and creativity. It can also process negative prompts to leave out undesired elements or modifications.

- Generative Adversarial Networks (GANs): Although the specifics of Midjourney’s technology are proprietary, it likely uses GANs or similar generative models. This is likely what gives it its ability to create diverse and aesthetically pleasing images.

- Custom Algorithms: These optimize the balance between the engine’s artistic freedom and adherence to the user’s vision. It helps ensure outputs that match the user’s prompt while introducing an element of originality.

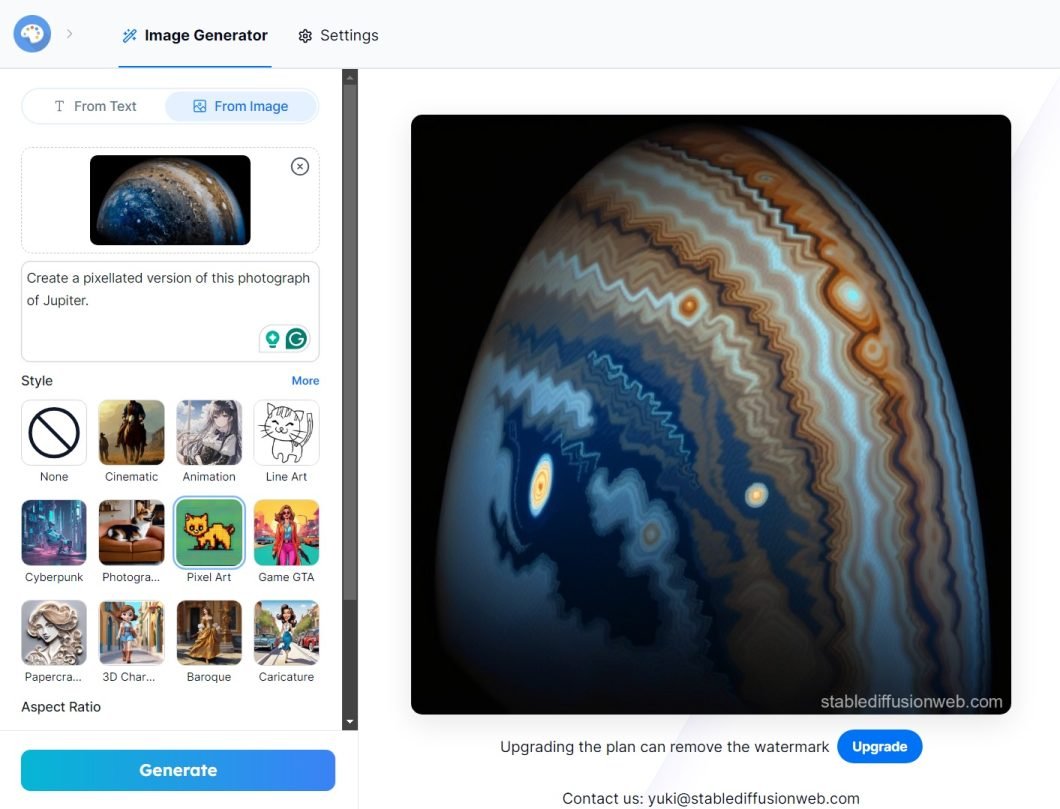

Introduction to Stable Diffusion

Stable Diffusion was developed by Stability AI in collaboration with researchers from EleutherAI and LAION. Since its initial release in August 2022, we’ve now entered its stable release model, SDXL 1.0, as of July 2023. Its code consists primarily of Python. Stable Diffusion’s accessibility and open-source nature have made it one of the most popular AI image generators.

You can find the Stable Diffusion Git here or test it out on Hugging Face spaces.

On top of the official SDXL, there are many other models built for compatibility with Stable Diffusion. This allows you to find the best Stable Diffusion model for your exact needs. Realistic Vision, DreamShaper, and Anything v3 are just some of the options.

Unlike some counterparts, Stable Diffusion is known for its ability to produce both photorealistic images and stylized art. This makes it a viable option not just for art but also for practical use cases, like concept visualization.

Stable Diffusion runs on a variety of platforms, including local machines, cloud services, and community-developed web portals. It also offers a free plan, allowing you to generate up to 10 images per day with watermarks. Its priced plans give you commercial rights over the images created as well. Or, you can upload an image and suggest modifications.

Stable Diffusion’s prompt generator, ControlNet, allows for more precise spatial and semantic control. It offers fine-tuned controls, like selecting the exact version, adjusting the number of steps, or using randomized seeds. It’s even possible to transfer OpenPose models to Stable Diffusion to generate subjects with specific poses.

You can also use ControlNet to define specific areas to position subjects, aspect ratios, or segmentation maps.

Key Features

- High-Resolution Image Generation: Capable of producing detailed images up to 1024×1024 pixels.

- Photorealistic Images: Stable Diffusion tends to perform better at generating more realistic-looking images. However, the stylistic outputs were not always impressive or high-quality.

- Prompt Customization: Stable Diffusions excels more at interpreting simpler and more direct prompts. However, you can get more control over the output by using its various controls or the ControlNet prompt generator.

- Community-Driven Development: As an open-source project, Stable Diffusion benefits from a global community of developers and artists

Technical Overview

Stable Diffusion operates on the cutting edge of AI and machine learning technologies, such as:

- Latent Diffusion Models (LDMs): This enables Stable Diffusion to gradually refine images in a latent space. This results in high-quality outputs that are both coherent and detailed.

- CLIP Guidance: Integrates OpenAI’s CLIP model to better understand and interpret text prompts. This helps improve the accuracy and relevance of depictions.

- Open-Source Ecosystem: The model’s open-source nature encourages experimentation and modification. It encourages developers to tweak their algorithms and contribute to their evolution.

- SDXL Turbo: If you want to know how to speed up Stable Diffusion, there’s a solution for that too. The XL Turbo version of Stable Diffusion uses Adversarial Diffusion Distillation (ADD) for real-time text-to-image generation. It does this by reducing the necessary step count from 50 to just one. Released in November 2023, it’s not ready for commercial use yet.

Comparative Analysis of Midjourney vs Stable Diffusion

Pricing Advantage: Stable Diffusion

Stable Diffusion is more affordable as it offers a free tier and lower-priced plans. It’s also easier to understand your needs upfront as you pay for credits to generate individual images, not CPU time like Midjourney. That being said, it’s possible that Midjourney will work out more cost-efficiently, depending on the scale you operate at.

Core Features: A Tie with Different Strengths

Midjourney excels in creating art that is rich in detail and texture. Its outputs typically have artistic and nuanced qualities, and it’s best creating stylized content. Meanwhile, Stable Diffusion specializes in creating highly realistic visual imagery. While its style presets are useful, they don’t always produce results that are up to par.

Image Output Quality: Midjourney

Midjourney generally outperforms Stable Diffusion with bold, artistic renditions that are highly detailed. While Stable Diffusion produces more realistic images, Midjourney’s abstract and artistic interpretations offer a distinct aesthetic.

Ease of Implementation: Stable Diffusion Wins

Stable Diffusion is more accessible, offering various user-friendly interfaces, including DreamStudio and Clipdrop. Midjourney’s current limitation to Discord may deter users unfamiliar with the platform.

Community Support: Midjourney’s Unique Advantage

Midjourney benefits from its Discord-based community, where users actively share, learn, and collaborate. This direct interaction within a dedicated platform offers a cohesive and dynamic community experience. In contrast, Stable Diffusion’s community is dispersed across multiple platforms. While there’s arguably more information out there owing to its open-source nature, it’s not a closed-loop experience.

User Suitability: Niche Preferences

Each platform has its niche, making it less suitable for certain users. Midjourney’s emphasis on artistic quality over rapid production. Its artistic focus and Discord-based operation may limit its appeal to users seeking technical customization.

Conversely, Stable Diffusion is highly accessible with various beginner-friendly experiences. It also offers sophisticated prompting tools and third-party model integrations for more advanced users.

Learn More About Generative AI

To continue learning about generative AI, including audio, photo, and video, check out our other blogs:

- Generative Adversarial Networks (GANs)

- OpenAI Sora: the Text-Driven Video Generation Model

- Deepfakes in the Real World: Applications and Ethics

- AI for Music Generation

- How to Detect AI-Generated Content

- Creating Hyper-Realistic Art from AI

- The 3 Types of Artificial Intelligence: ANI, AGI, and ASI

- Synthetic Data: A Model Training Solution