TensorFlow Lite (TFLite) is a collection of tools to convert and optimize TensorFlow models to run on mobile and edge devices. Google developed TensorFlow for internal use but later chose to open-source it. Today, TFLite is running on more than 4 billion devices!

As an Edge AI implementation, TensorFlow Lite greatly reduces the barriers to introducing large-scale computer vision with on-device machine learning, making it possible to run machine learning everywhere.

The deployment of high-performing deep learning models on embedded devices to solve real-world problems is a struggle using today’s AI technology. Privacy, data limitations, network connection issues, and the need for optimized models that are more resource-efficient are some of the key challenges of many applications on the edge to make real-time deep learning scalable.

What is TensorFlow Lite?

TensorFlow Lite is an open-source deep learning framework designed for on-device inference (Edge Computing). TensorFlow Lite provides a set of tools that enables on-device machine learning by allowing developers to run their trained models on mobile, embedded, and IoT devices and computers. It supports platforms such as embedded Linux, Android, iOS, and MCU.

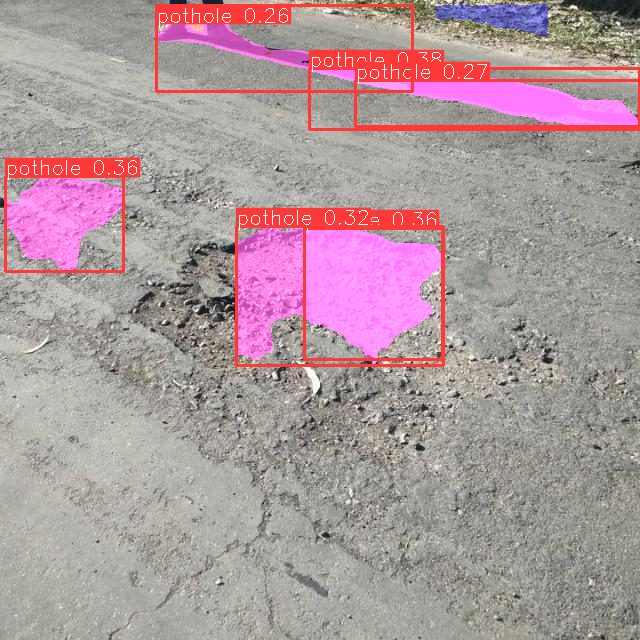

TensorFlow Lite is specially optimized for on-device machine learning (Edge ML). As an Edge ML model, it is suitable for deployment to resource-constrained edge devices. Edge intelligence, the ability to move deep learning tasks (object detection, image recognition, etc.) from the cloud to the data source, is necessary to scale computer vision in real-world use cases.

What is TensorFlow?

TensorFlow is an open-source software library for AI and machine learning with deep neural networks. TensorFlow for computer vision was developed by Google Brain for internal use at Google and open-sourced in 2015. Today, it is used for both research and production at Google.

What is Edge Machine Learning?

Edge Machine Learning (Edge ML), or on-device machine learning, is essential to overcome the limitations of pure cloud-based solutions. The key benefits of Edge AI are real-time latency (no data offloading), privacy, robustness, connectivity, smaller model size, and efficiency (costs of computation and energy, watts/FPS).

To learn more about how Edge AI combines Cloud with Edge Computing for local machine learning, I recommend reading our article Edge AI – Driving Next-Gen AI Applications.

Computer Vision on Edge Devices

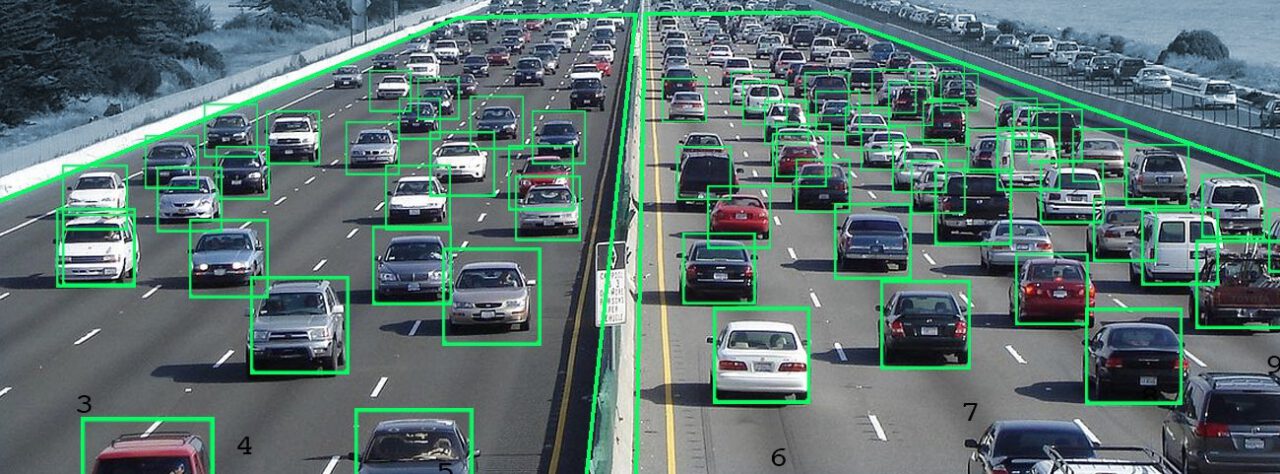

Among other tasks, object detection is of great importance to most computer vision applications. Existing approaches of object detection implementations can hardly run on resource-constrained edge devices. To mitigate this dilemma, Edge ML-optimized models and lightweight variants that achieve accurate real-time object detection on edge devices have been developed.

Difference between Tensorflow Lite and Tensorflow

TensorFlow Lite is a lighter version of the original TensorFlow (TF). TF Lite is specifically designed for mobile computing platforms and embedded devices, edge computers, video game consoles, and digital cameras. TensorFlow Lite is supposed to provide the ability to perform predictions on an already trained model (Inference tasks).

TensorFlow, on the other hand, can help build and train the ML model. In other words, TensorFlow is meant for training models, while TensorFlow Lite is more useful for inference and edge devices. TensorFlow Lite also optimizes the trained model using quantization techniques (discussed later in this article), which consequently reduces the necessary memory usage as well as the computational cost of utilizing neural networks.

TensorFlow Lite Advantages

- Model Conversion: TensorFlow models can be efficiently transferred into TensorFlow Lite models for mobile-friendly deployment. TF Lite can optimize existing models to be less memory and cost-consuming, the ideal situation for using machine learning models on mobile.

- Minimal Latency: TensorFlow Lite decreases inference time, which means problems that depend on performance time for real-time performance are ideal use cases of TensorFlow Lite.

- User-friendly: TensorFlow Lite offers a relatively simple way for mobile developers to build applications on iOS and Android devices using Tensorflow machine learning models.

- Offline inference: Edge inference does not rely on an internet connection, which means that TFLite allows developers to deploy machine learning models in remote situations or in places where an internet connection can be expensive or scarce. For example, smart cameras can be trained to identify wildlife in remote locations and only transmit certain integral parts of the video feed.

Machine learning model-dependent tasks can be executed in areas far from wireless infrastructure. The offline inference capabilities of Edge ML are an integral part of most mission-critical computer vision applications that should still be able to run with temporary loss of internet connection (in autonomous driving, animal monitoring or security systems, and more).

Selecting the best Tensorflow Lite Model

Here is how to select suitable models for TensorFlow Lite deployment. For common applications like image classification or object detection, you might face choices among several TensorFlow Lite models varying in size, data input requirements, inference speed, and accuracy.

To make an informed decision, prioritize your primary constraint: model size, data size, inference speed, or accuracy. Generally, opt for the smallest model to ensure wider device compatibility and quicker inference times.

- If you’re uncertain about your main constraint, default to the model size as your deciding factor. Choosing a smaller model offers greater deployment flexibility across devices and typically results in faster inferences, enhancing user experience.

- However, remember that smaller models might compromise on accuracy. If accuracy is critical, consider larger models.

Pre-trained Models for TensorFlow Lite

Utilize pre-trained, open-source TensorFlow Lite models to quickly integrate machine learning capabilities into real-time mobile and edge device applications.

There is a wide list of supported TF Lite example apps with pre-trained models for various tasks:

- Autocomplete: Generate text suggestions using a Keras language model.

- Image Classification: Identify objects, people, activities, and more across various platforms.

- Object Detection: Detect objects with bounding boxes, including animals, on different devices.

- Pose Estimation: Estimate single or multiple human poses, applicable in diverse scenarios.

- Speech Recognition: Recognize spoken keywords on various platforms.

- Gesture Recognition: Use your USB webcam to recognize gestures on Android/iOS.

- Image Segmentation: Accurately localize and label objects, people, and animals on multiple devices.

- Text Classification: Categorize text into predefined groups for content moderation and tone detection.

- On-device Recommendation: Provide personalized recommendations based on user-selected events.

- Natural Language Question Answering: Use BERT to answer questions based on text passages.

- Super Resolution: Enhance low-resolution images to higher quality.

- Audio Classification: Classify audio samples, and use a microphone on various devices.

- Video Understanding: Identify human actions in videos.

- Reinforcement Learning: Train game agents, and build games using TensorFlow Lite.

- Optical Character Recognition (OCR): Extract text from images on Android.

How to Use TensorFlow Lite

As discussed in the previous paragraph, TensorFlow model frameworks can be compressed and deployed to an edge device or embedded application using TF Lite. There are two main steps to using TFLite: generating the TensorFlow Lite model and running inference. The official development workflow documentation can be found here. I will explain the key steps of using TensorFlow Lite in the following.

Data Curation for Generating a TensorFlow Lite Model

Tensorflow Lite models are represented with the .tflite file extension, which is an extension specifically for special efficient portable formats called FlatBuffers. FlatBuffers is an efficient cross-platform serialization library for various programming languages and allows access to serialized data without parsing or unpacking. This methodology allows for a few key advantages over the TensorFlow protocol buffer model format.

Advantages of using FlatBuffers include reduced size and faster inference, which enables Tensorflow Lite to use minimal compute and memory resources to execute efficiently on edge devices. In addition, you can also add metadata with human-readable model descriptions as well as machine-readable data. This is usually done to enable the automatic generation of pre-processing and post-processing pipelines during on-device inference.

Ways to Generate a TensorFlow Lite Model

There are a few popularized ways to generate a TensorFlow Lite model, which we will cover in the following section.

How to use an Existing Tensorflow Lite Model

There are a plethora of available models that have been pre-made by TensorFlow for performing specific tasks. Typical machine learning methods like segmentation, pose estimation, object detection, reinforcement learning, and natural language question-answering are available for public use on the Tensorflow Lite example apps website.

These pre-built models can be deployed as-is and require little to no modification. The TFLite example applications are great to use at the beginning of projects or when starting to implement TensorFlow Lite without spending time building new models from scratch.

How to Create a TensorFlow Lite Model

You can also create your own TensorFlow Lite model that serves a purpose offered by the app, using unique data. TensorFlow provides a model maker (TensorFlow Lite Model Maker). The Model Maker support library aids in tasks such as image classification, object detection, text classification, BERT question answering, audio classification, and recommendation (items are recommended using context information).

With the TensorFlow Model Maker, the process of training a TensorFlow Lite model using a custom dataset is straightforward. The feature takes advantage of transfer learning to reduce the amount of training data required as well as decrease overall training time. The model maker library allows users to efficiently train a Tensorflow Lite model with their own uploaded datasets.

Here is an example of training an image classification model with less than 10 lines of code (this is included in the TF Lite documentation but put here for convenience). This can be carried out once all necessary Model Maker packages are installed:

from tflite_model_maker import image_classifier

from tflite_model_maker.image_classifier import DataLoader

# Load input data specific to an on-device ML application.

data = DataLoader.from_folder(‘flower_photos/’)

train_data, test_data = data.split(0.9)

# Customize the TensorFlow model.

model = image_classifier.create(train_data)

# Evaluate the model.

loss, accuracy = model.evaluate(test_data)

# Export to Tensorflow Lite model and label file in `export_dir`.

model.export(export_dir=’/tmp/’)

In this example, the user would have their dataset called “flower photos” and use that to train the TensorFlow Lite model using the image classifier pre-made task.

Convert a TensorFlow Model into a TensorFlow Lite Model

You can create a model in TensorFlow and then convert it into a TensorFlow Lite model using the TensorFlow Lite Converter. The TensorFlow Lite converter applies optimizations and quantization to decrease model size and latency, leaving little to no loss in detection or model accuracy.

The TensorFlow Lite converter generates an optimized FlatBuffer format identified by the .tflite file extension using the initial TensorFlow model. The landing page of TensorFlow Lite Converter contains a Python API to convert the model.

The Fastest Way to Use TensorFlow Lite

To not develop everything around the Edge ML model from scratch, you can use a computer vision platform. Viso Suite is the end-to-end solution using TensorFlow Lite to build, deploy, and scale real-world applications.

The Viso Platform is optimized for Edge Computer Vision and provides full-edge device management, an application builder, and fully integrated deployment tools. The enterprise-grade solution helps to move faster from prototype to production, without the need to integrate and update separate computer vision tools manually. You can find an overview of the features here.

Learn more about Viso Suite here.

What’s Next With TensorFlow Lite?

Overall, lightweight AI model versions of popular machine learning libraries will greatly facilitate the implementation of scalable computer vision solutions by moving image recognition capabilities from the cloud to edge devices connected to cameras. By leveraging TensorFlow Lite (TFLite), it can help stay organized with collections saved and categorize content based on your preferences. TFLite’s streamlined deployment capabilities empower developers to categorize and deploy models across a wide range of devices and platforms, ensuring optimal performance and user experience.

Since Google developed and uses TensorFlow internally, the lightweight Edge ML model variant will be a popular choice for on-device inference.

- OpenPose provides an Edge ML model for pose detection

- Edge Intelligence: Deep Learning And Edge Computing

- The new Trend: Vision Transformers (ViT) in Image Recognition

To stay updated on the latest releases, news, and articles about TensorFlow Lite, follow the TensorFlow blog.