Automated object tracking is an important task in computer vision. Object trackers are an integral part of many computer vision applications that process the video stream of cameras. In this article, we will discuss state-of-the-art algorithms, different methods, applications, and software.

What is object tracking?

Object tracking is an application of deep learning where the program takes an initial set of object detections develops a unique identification for each of the initial detections and then tracks the detected objects as they move around frames in a video.

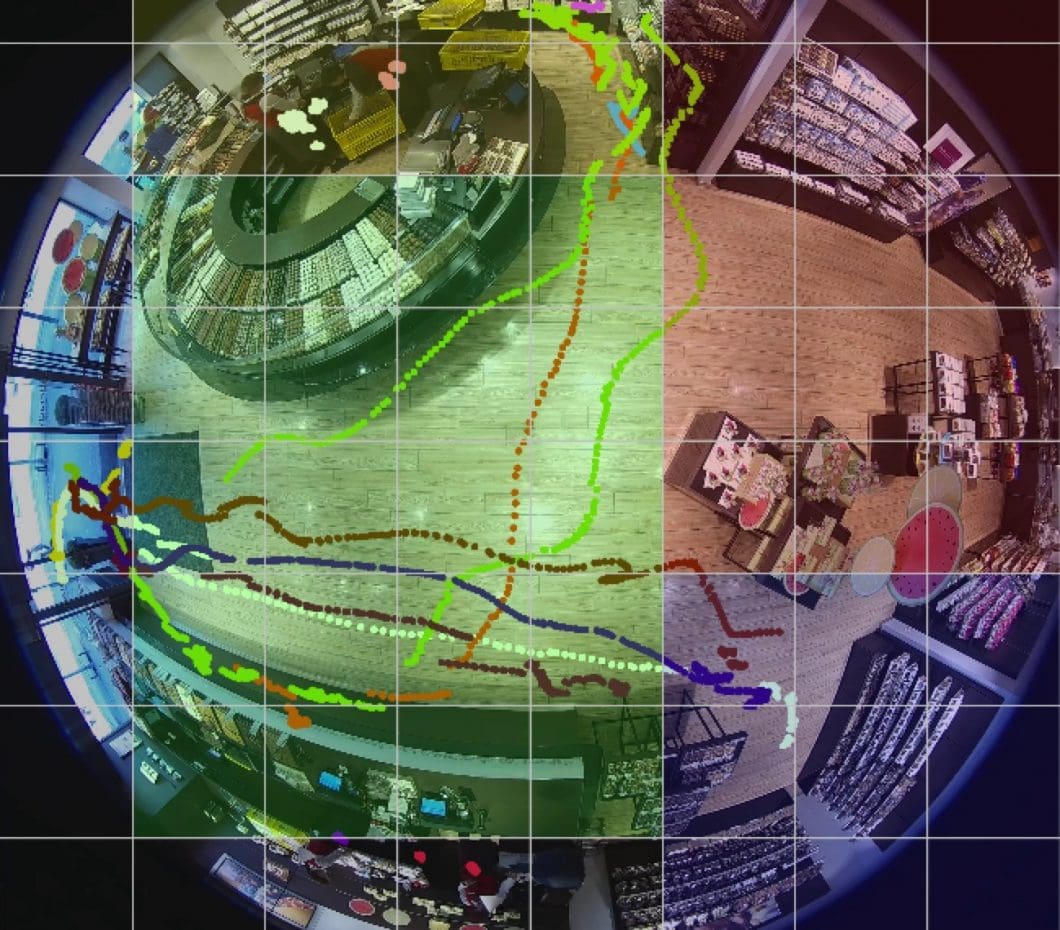

In other words, object tracking is the task of automatically identifying objects in a video and interpreting them as a set of trajectories with high accuracy.

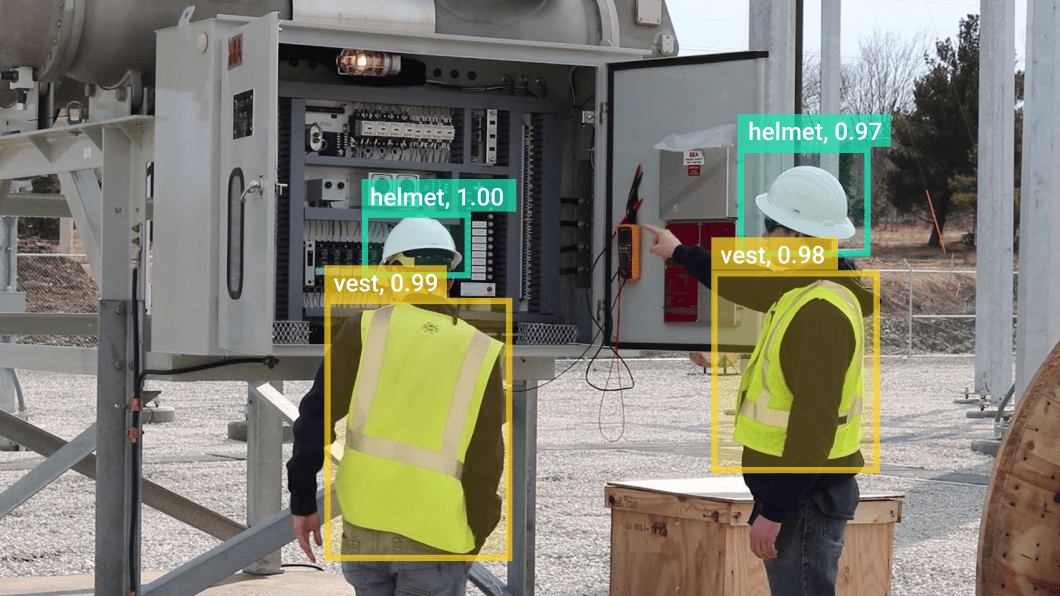

Often, there’s an indication around the object being tracked, for example, a surrounding square that follows the object, showing the user where the object is on the screen.

Uses and types of object tracking

Object tracking is used for a variety of use cases involving different types of input footage. Whether or not the anticipated input will be an image, video, or real-time video vs. a prerecorded video, impacts the algorithms used for creating applications.

The kind of input also impacts the category, use cases, and applications. Here, we will briefly describe a few popular uses and types of object tracking, such as video tracking, visual tracking, and image tracking.

Video tracking

Video tracking is an application of object tracking where moving objects are located within video information. Hence, video tracking systems can process live, real-time footage and also recorded video files.

The processes used to execute video tracking tasks differ based on which type of video input is targeted. This will be discussed more in-depth when we compare batch and online tracking methods later in this article.

Different video tracking applications play an important role in video analytics, in scene understanding for security and surveillance, military, transportation, and other industries. Today, a wide range of real-time computer vision and deep learning applications use video tracking methods. I recommend you to check out our extensive list of the most popular Computer Vision Applications.

Visual tracking

Visual tracking or visual target-tracking is a research topic in computer vision that is applied in a large range of everyday scenarios. The goal of visual tracking is to estimate the future position of a visual target that was initialized without the availability of the rest of the video.

Image tracking

Image tracking is meant for detecting two-dimensional images of interest in a given input. That image is then continuously tracked as they move in the setting. Hence, Image tracking is ideal for datasets with highly contrasting images (ex., black and white), asymmetry, few patterns, and multiple identifiable differences between the image of interest and other images in the image set.

Image tracking relies on computer vision to detect and augment images after image targets are predetermined. Explore our article about image augmentation.

Object tracking camera

Modern object-tracking methods can be applied to real-time video streams of basically any camera. Therefore, the video feed of a USB camera or an IP camera can be used to perform object tracking, by feeding the individual frames to a tracking algorithm. Frame skipping or parallelized processing are common methods to improve performance with real-time video feeds of one or multiple cameras.

Common challenges

The main challenges usually stem from issues in the image that make it difficult for models to effectively perform detections on the images. Here, we will discuss the few most common issues with the task of tracking objects and methods of preventing or dealing with these challenges.

Training and tracking speed

Algorithms for tracking objects are supposed to not only accurately perform detections and localize objects of interest but also do so in the least amount of time possible. Enhancing tracking speed is especially imperative for real-time object-tracking models.

To manage the time taken for a model to perform, the algorithm used to create the object tracking model needs to be either customized or chosen carefully. Fast R-CNN and Faster R-CNN can be used to increase the speed of the most common R-CNN approach.

Since CNNs (Convolutional Neural Networks) are commonly used for object detection, CNN modifications can be the differentiating factor between a faster model and a slower one. Design choices besides the detection framework also influence the balance between the speed and accuracy of an object detection model.

Background distractions

The backgrounds of input images or images used to train models also impact the accuracy of the model. Busy backgrounds of objects meant to be tracked can make it harder for small objects to be detected.

With a blurry or single-color background, it is easier for an AI system to detect and track objects. Backgrounds that are too busy, have the same color as the object, or are too cluttered can make it hard to track results for a small object or a lightly colored object.

Multiple spatial scales

Objects meant to be tracked can come in a variety of sizes and aspect ratios. These ratios can confuse the algorithms into believing objects are scaled larger or smaller than their actual size. The size misconceptions can negatively impact detection or detection speed.

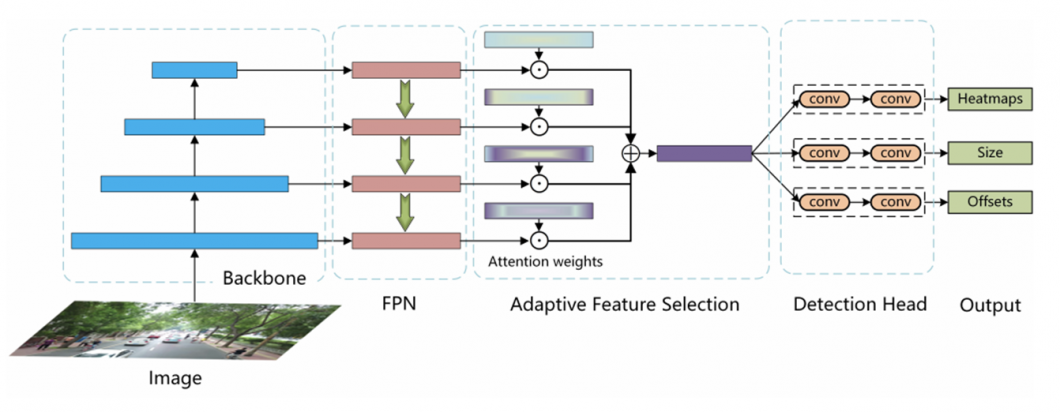

To combat the issue of varying spatial scales, programmers can implement techniques such as feature maps, anchor boxes, image pyramids, and feature pyramids.

- Anchor boxes: Anchor boxes are a compilation of bounding boxes that have a specified height and width. The boxes are meant to acquire the scale and aspect ratios of objects of interest. They are chosen based on the average object size of the objects in a given dataset. Anchor boxes allow various types of objects to be detected without having the bounding box coordinates altered during localization.

- Feature maps: A feature map is the output image of a layer when a Convolutional Neural Network (CNN) is used to capture the result of applying filters to that input image. Feature maps allow a deeper understanding of the features being detected by a CNN. Single-shot detectors have to take into account the issue of multiple scales because they detect objects with just one pass through a CNN framework. This will occur in a decrease for small images. Small images can lose signal during downsampling in the pooling layers, which is when the CNN was trained on a low subset of those smaller images. Even if the number of objects is the same, downsampling can occur because the CNN wasn’t able to detect the small images and count them toward the sample size. To prevent this, multiple feature maps can be used to allow single-shot detectors to look for objects within CNN layers, including earlier layers with higher-resolution images. Single-shot detectors (SSD) are still not an ideal option for small object tracking because of the difficulty they experience when detecting small objects. Tight groupings can prove especially difficult. For instance, overhead drone shots of a group of herd animals will be difficult to track using single-shot detectors.

- Image and feature pyramid representations: Feature pyramids, also known as multi-level feature maps because of their pyramidal structure, are a preliminary solution for object scale variation when using object tracking datasets. Hence, feature pyramids model the most useful information regarding objects of different sizes in a top-down representation and therefore make it easier to detect objects of varying sizes. Strategies such as image pyramids and feature pyramids are useful for preventing scaling issues. The feature pyramid is based on multi-scale feature maps, which use less computational energy than image pyramids. This is because image pyramids consist of a set of resized versions of one input image that are then sent to the detector at testing.

Occlusion

Occlusion has a lot of definitions. In medicine, occlusion is the “blockage of a blood vessel” due to the vessel merging to a close; in deep learning, it has a similar meaning. In AI vision tasks using deep learning, occlusion happens when multiple objects come too close together (merge) and overlap.

This causes issues for object tracking systems because the occluded objects are either tracked incorrectly or misidentified as a single object. The system can get confused and identify the initially tracked object as a new object.

Occlusion sensitivity prevents this misidentification by allowing the user to understand which parts of an image are the most important for the object-tracking system to classify. Occlusion sensitivity refers to a measure of the network’s sensitivity to occlusion in different data regions. It is done using small subsets of the original dataset.

Levels of object tracking

Object Tracking consists of multiple subtypes because it is such a broad application. Levels of object tracking differ depending on the number of objects being tracked.

Multiple Object Tracking (MOT)

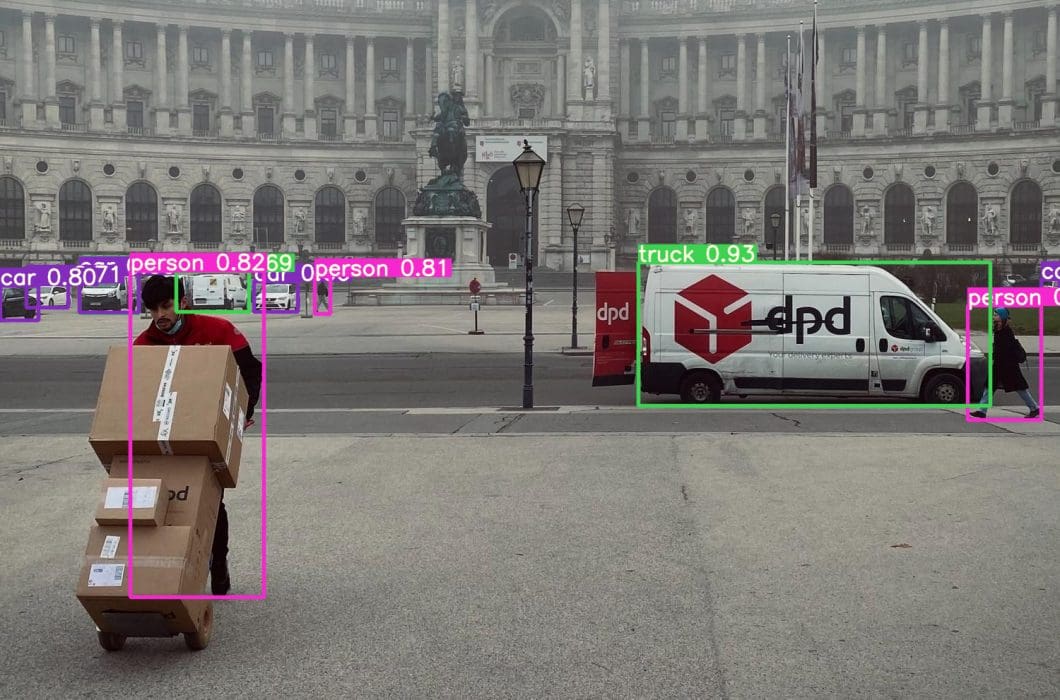

Multiple object tracking is defined as the problem of automatically identifying multiple objects in a video and representing them as a set of trajectories with high accuracy.

Hence, multi-object tracking aims to track more than one object in digital images. It is also called multi-target tracking, as it attempts to analyze videos to identify objects (“targets”) that belong to more than one predetermined class

Multiple object tracking is of great importance in autonomous driving, where it is used to detect and predict the behavior of pedestrians or other vehicles. Hence, the algorithms are often benchmarked on the KITTI tracking test. KITTI is a challenging real-world computer vision benchmark and image dataset, popularly used in autonomous driving.

Currently, the best-performing multiple object tracking algorithms are DEFT (88.95 MOTA, Multiple Object Tracking Accuracy), CenterTrack ( 89.44 MOTA), and SRK ODESA (90.03 MOTA).

Multiple Object Tracking (MOT) vs. General Object Detection

Object detection typically produces a collection of bounding boxes as outputs. Multiple object tracking often has little to no prior training regarding the appearance and number of targets. Bounding boxes are identified using their height, width, coordinates, and other parameters.

Meanwhile, MOT algorithms additionally assign a target ID to each bounding box. This target ID is known as a detection, and it is important because it allows the model to distinguish among objects within a class.

For example, instead of identifying all cars in a photo where multiple cars are present as just “car,” MOT algorithms attempt to identify different cars as being different from each other, rather than all falling under the “car” label. For a visual representation of this metaphor, refer to the image below.

![]()

Single Object Tracking

Single Object Tracking (SOT) creates bounding boxes that are given to the tracker based on the first frame of the input image. It is also sometimes known as Visual Object Tracking.

SOT implies that one singular object is tracked, even in environments involving other objects. Single Object Trackers are meant to focus on one given object rather than multiple. The object of interest is determined in the first frame, which is where the object to be tracked is initialized for the first time. The tracker is then tasked with locating that unique target in all other given frames.

SOT falls under the detection-free tracking category, which means that it requires manual initialization of a fixed number of objects in the first frame. These objects are then localized in consequent frames. A drawback of detection-free tracking is that it cannot deal with scenarios where new objects appear in the middle frames. SOT models should be able to track any given object.

Object tracking algorithms

Multiple Object Tracking (MOT) algorithm introduction

Most multiple object tracking algorithms incorporate an approach called tracking-by-detection. The tracking-by-detection method involves an independent detector that is applied to all image frames to obtain likely detections, and then a tracker, which is run on the set of detections. Hereby, the tracker attempts to perform data association (for example, linking the detections to obtain complete trajectories). The detections extracted from video inputs are used to guide the tracking process by connecting them and assigning identical IDs to bounding boxes containing the same target.

- Batch method. Batch-tracking algorithms use information from future video frames when deducing the identity of an object in a certain frame. Batch tracking algorithms use non-local information regarding the object. This methodology results in a better quality of tracking.

- Online method. While batch tracking algorithms access future frames, online tracking algorithms only use present and past information to come to conclusions regarding a certain frame.

Online tracking methods for performing MOT generally perform worse than batch methods because of the limitation of online methods staying constrained to the present frame. However, this methodology is sometimes necessary because of the use case.

For example, real-time problems requiring the tracking of objects, like navigation or autonomous driving, do not have access to future video frames, which is why online tracking methods are still a viable option.

Multiple Object Tracking algorithm stages

Most multiple object tracking algorithms contain a basic set of steps that remain constant as algorithms vary. Most of the so-called multi-target tracking algorithms share the following stages:

- Stage #1: Designation or detection: Targets of interest are noted and highlighted in the designation phase. The algorithm analyzes input frames to identify objects that belong to target classes. Bounding boxes are used to perform detections as part of the algorithm.

- Stage #2: Motion: Feature extraction algorithms analyze detections to extract appearance and interaction features. A motion predictor, in most cases, is used to predict subsequent positions of each tracked target.

- Stage #3: Recall: Feature predictions are used to calculate similarity scores between detection couplets. Those scores are then used to associate detections that belong to the same target. IDs are assigned to similar detections, and different IDs are applied to detections that are not part of pairs.

Some object-tracking models are created using these steps separately from each other, while others combine and use the steps in conjunction. These differences in algorithm processing create unique models where some are more accurate than others.

Software solution for object tracking

If you are looking to develop video analysis with object tracking for commercial projects, check out our enterprise computer vision platform, Viso Suite. It is used by large organizations worldwide to build, deploy, and scale object tracking systems with deep learning.

The end-to-end computer vision platform Viso Suite provides modular building blocks and visual development tools. This means that you can drag-and-drop modules to integrate any camera, deploy anywhere (Edge and Cloud), and use different machine learning models. To learn more, get the technical Whitepaper.

Popular object tracking algorithms

Convolutional Neural Networks (CNNs) remain the most used and reliable network for object tracking. However, multiple architectures and algorithms are being explored as well. Among these algorithms are Recurrent Neural Networks (RNNs), Autoencoders (AEs), Generative Adversarial Networks (GANs), Siamese Neural Networks (SNNs), and custom neural networks.

Although object detectors can be used to track objects if it is applied frame-by-frame, this is computationally limiting. Therefore, a rather inefficient method of performing object tracking. Instead, object detection should be applied once, and then the object tracker can handle every frame after the first. This is a more computationally effective and less cumbersome process of performing object tracking.

OpenCV object tracking

OpenCV object tracking is a popular method. This is because OpenCV has so many algorithms built in that are specifically optimized for the needs and objectives of object or motion tracking.

Specific OpenCV object trackers include the BOOSTING, MIL, KCF, CSRT, MedianFlow, TLD, MOSSE, and GOTURN trackers. Each of these trackers is best for different goals. For example, CSRT is best when the user requires a higher object tracking accuracy and can tolerate slower FPS throughput.

The selection of an OpenCV object tracking algorithm depends on the advantages and disadvantages of that specific tracker and the benefits:

- The KCF tracker is not as accurate compared to the CSRT but provides comparably higher FPS.

- The MOSSE tracker is very fast, but its accuracy is even lower than tracking with KCF. Still, if you are looking for the fastest object-tracking OpenCV method, MOSSE is a good choice.

- The GOTURN tracker is the only detector for deep learning-based object tracking with OpenCV. The original implementation of GOTURN is in Caffe, but it has been ported to the OpenCV Tracking API.

DeepSORT

DeepSORT is a good object tracking algorithm choice, and it is one of the most widely used object tracking frameworks. Appearance information is integrated within the algorithm, which vastly improves DeepSORT performance. Because of the integration, objects are trackable through longer periods of occlusion, reducing the number of identity switches.

For complete information on the inner workings of DeepSORT and specific algorithmic differences between DeepSORT and other algorithms, we check out the article “Object Tracking using DeepSORT in TensorFlow 2” by Anushka Dhiman.

Object tracking MATLAB

MATLAB is a numeric computing platform, which makes it different in its implementation compared to DeepSORT and OpenCV, but it is nevertheless a fine choice for visual tracking tasks.

The Computer Vision Toolbox in MATLAB provides video tracking algorithms, such as continuously adaptive mean shift (CAMShift) and Kanade-Lucas-Tomasi (KLT), for tracking a single object or for use as building blocks in a more complex tracking system.

MDNet

MDNet is a fast and accurate, CNN-based visual tracking algorithm inspired by the R-CNN object detection network. It functions by sampling candidate regions and passing them through a CNN. The CNN is typically pre-trained on a vast dataset and refined at the first frame in an input video.

Therefore, MDNet is most useful for real-time object tracking use cases. However, while it suffers from high computational complexity in terms of speed and space, it is still an accurate option.

The computation-heavy aspects of MDNet can be minimized by performing RoI (Region of Interest) Pooling. This is a relatively effective way of avoiding repetitive observations and accelerating inference.

What’s next?

Object Tracking is used to identify objects in a video and interpret them as a set of trajectories with high accuracy. Therefore, the main challenge is to hold a balance between computational efficiency and performance.

Check our article People Counting System: How To Make Your Own in Less Than 10 Minutes, where we detect and track people in real-time video footage.

If you enjoyed reading our complete guide to object tracking and want to dive into related topics, check out the following articles: