In 2022 E. Musk presented the latest prototype of the Tesla bot humanoid robot. It belongs to a new class of humanoid robots that are applicable in homes and factories. A lot of startups around the real world are developing Artificial Intelligence (AI)-based robots with different purposes, and they mainly focus on social interaction.

The huge progress in AI-enabled by deep learning enhances robots’ interactions with people in all environments. Low-cost 3D sensors, driven by gaming platforms, have enabled the development of 3D perception algorithms by multiple researchers worldwide. That enhances the design and adoption of home and service robots.

Tesla Bot Specifications

The Tesla bot Optimus is important because it is not revolutionary. Instead, it’s a normal technology development to solve business problems.

In its introduction, it stayed on a stand and waved to the audience, showing its wrist and hand range of motion. The CEO, Elon Musk said that the prototype contained battery packs, actuators, and other parts, and recently it is ready to walk.

Hardware Design

Tesla Bot is approximately the same size and weight as a human, weighing around 60 kg and height 170 cm. The robot can function for several hours without recharging. In addition, the Optimus robot can follow verbal instructions to perform various tasks, including complex jobs such as picking up items.

With such a construction, it can lift to 20 kg, but with stretched arms, the payload capacity is reduced to 10 kg. Optimus is designed to have hands that resemble those of a person, providing a high level of ability and flexibility.

The Tesla bot possesses two legs and has a maximum speed of 8km per hour. The Optimus incorporates 40 electromechanical actuators of which 12 are in the arms, 2 each in the neck and torso, 12 in the legs, and 12 in the hands.

Additionally, the robot has a screen on its face to present information needed in cognitive interaction. Some original Tesla features are embedded into the robot, such as a self-running computer, autopilot cameras, a set of AI tools, neural network planning, auto-labeling for objects, etc.

Sensors and Capabilities

Sensors act as the robot’s eyes, ears, and skin, constantly gathering data about the environment. Here’s the list of some important sensor types that Tesla bot possesses:

- Vision Sensors: To enhance perception, the robot owns cameras, which enable it to do precise tasks like image recognition and environment navigation. Advanced versions could utilize special navigation sensors (e.g. infrared cameras, LiDAR, or ultrasonic sensors) to help the robot map its surroundings and move autonomously.

- Audio Sensors: Microphones enable robots to detect and interpret sounds. This is necessary for responding to voice commands, identifying potential hazards (like alarms), and even detecting user emotions based on vocal cues.

- Movement Sensors: Gyroscopes and accelerometers track the robot’s position and movement, allowing for precise control and stable operation.

- Touch-force Sensors: Physical interaction sensors use piezoelectricity to measure electrical charges, because of the mechanical deformation (pressure, bending) in a given direction.

To perform actions, the Tesla bot needs actuators that translate the information received from sensors into physical movement. In addition to hydraulic, magnetic, and thermal actuators, these are the most common types of actuators that it can possess:

- Electrical Actuators: The most widespread type, utilizing electric motors to power various robot functions; from leg movement to the complex arm movements of a robotic chef.

- Pneumatic Actuators: Powered by compressed air, these actuators offer high power and speed, often used in applications requiring strong bursts of force, such as in manufacturing environments.

- Sensory Fusion: Sensors and actuators must operate together. Thus, a critical concept in robotics is sensory fusion. The data from multiple sensors are combined and processed to create a complete understanding of the environment. This allows robots to make more informed decisions and react to complex situations effectively.

Deployment of Tesla Bots in Homes

One of the main applications is to integrate the Tesla bot into households so that it performs specific tasks. The robot will build a complete understanding of its environment, enhanced by shared 3D scans of the home, and its perception capabilities.

Moreover, the bot will be accustomed to the profiles of household members, recognizing their habits, hobbies, and preferences, and fostering personalized interactions.

Tesla Bot’s AI and Interaction Capabilities

Communication robot humans utilize advanced verbal interfaces powered by Large Language Models (LLMs), which are flawless and intuitive. The Tesla bot will also interact with nearby edge devices, facilitating access to servers or cloud-based resources.

The robot will get regular model inference updates, and will stay current with the latest developments, ensuring high performance and adaptability:

- Natural Human-Computer Interaction (HCI): Interaction with Tesla bot will be natural and intuitive, with LLMs having a crucial role. Conversation will be in natural language, and the LLM will interpret our intents and provide timely information or perform tasks.

- Additionally, with the use of Large AI Models (LAM), the robot will learn to decipher body language and facial expressions, creating a more engaging user experience.

- AI Reasoning: The bot will be able to solve issues and make wise decisions thanks to its AI reasoning capabilities. This will include the robot’s planning and performing difficult tasks. It can adapt to new events, and learn from experience to improve its performance.

- Incorporating Human Feedback: By utilizing reinforcement learning, the bot can learn through trial and error, guided by human feedback. This will allow the bot to personalize its behavior to each user’s preferences and continuously improve its performance.

Challenges in Home Applications

The adoption of the Tesla bot within our homes faces both appealing possibilities and significant challenges. Here, we present the possible obstacles that should be resolved.

- Computation Resources: The AI-empowered Tesla robot needs significant computational resources. One possibility is edge computing, where the robot contains its processing power. The other option is cloud, so we need to balance between these options, to ensure optimal robot performance.

- Communication: The robot requires strong and stable communication lines to communicate and receive instructions efficiently. However, limitations in home networks can lead to delays, dropped connections, and poor performance.

- Robot Safety, Privacy, and Ethics: As robots become more sophisticated, ethical considerations are becoming important. Scientists should resolve issues about safety, privacy, data security, and potential bias in AI algorithms.

Additionally, the development of testing robots for potential unwanted outcomes is crucial to ensure their safe and responsible operation within our homes.

Tesla Bot Deployment in Manufacturing – Factories

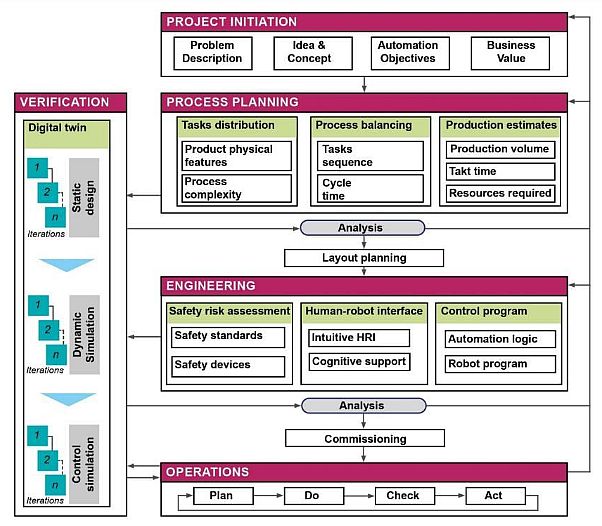

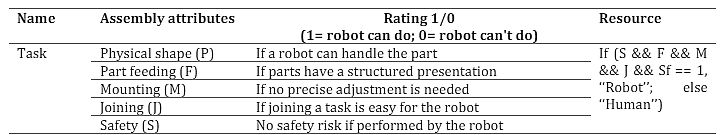

Researchers A. Malik et. al (2023) examined the application of the Tesla bot in an industrial, i.e. manufacturing environment. Their framework defines the tasks that the robot’s involvement can successfully solve.

They developed an initial concept of the automation system and documented the automated tasks. It helped them to make an initial financial assessment. The deliverable from this step was a list of tasks and objectives of the robot involvement. Deliverable also defines a success criterion for their automation project.

A task is suitable for a Tesla bot based on the physical characteristics of the parts/components. Characteristics can be shape and material features, variability (of tasks), parts presentation, and injury risks to humans.

Case Study and Simulation

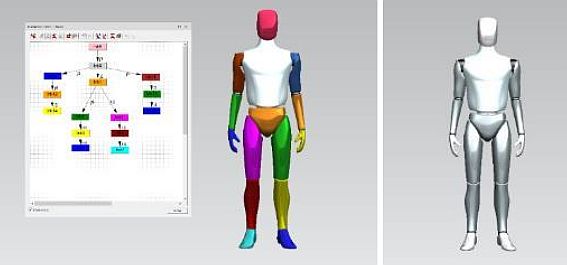

The set of robot duties included various automation arm-based tasks, as the robot has: i) two arms; ii) mobility; iii) collaboration ability with humans; and iv) safety features. The Tesla bot possesses six degrees of freedom, a payload capacity of 10 kg, and a reach of 1,000 mm for each arm. It also features a 5-finger human-like hand gripper.

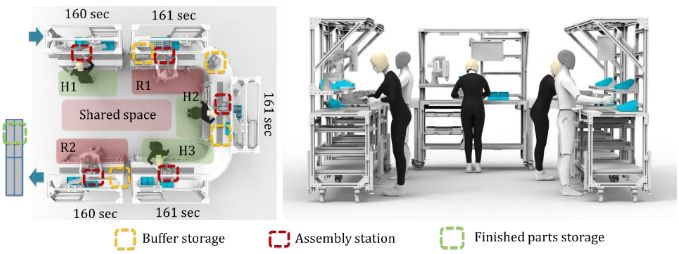

The proposed workplace contained five workstations, of which robots operated two and three were manual. Once an initial task identification has determined the types of tasks (robotic or manual), a refined process establishes a balance in the process.

Lean assembly balancing means the assignment of tasks to workstations and ensuring that no workstation becomes a bottleneck. It minimizes the idle time and compliance with the busy time. The figure and the diagram below show the proposed assembly workplace.

The simulation helped the researchers to perform the following.

- Design of the assembly workplaces and process balancing.

- Cycle time estimation for robotic tasks.

- Validation of robot kinematics to perform given assembly tasks.

- Economic estimates of using robots in assembly lines.

The researchers proved that the use of the Tesla Optimus bot can reduce up to 75% of manual assembly time. However, because of the task interdependence, they automated only 60% of the total tasks in the study. Additionally, they also automated the material handling tasks, which account for 60 minutes in total in a shift.

Potential Manufacturing Applications

Researchers envision the following Tesla bot manufacturing applications:

- Pick and place tasks: This is an important task group for robot applications. It involves picking the required parts from a source location and moving them to the place of action. Then, a placing of the parts in the required orientation can follow.

-

- Material handling: Lean practices treat material handling as a non-value-adding activity that should be minimized, which is sometimes difficult. Because of the need for flexibility, in recent years, mobile robots are getting these tasks.

- Assembly and screws driving: Many industrial products have intensive screw driving and other fastening applications. For example, a typical wind turbine has >6000 bolts. Therefore, screw driving as a repetitive process is quite suitable for assigning to a humanoid robot.

Implications for Human-Computer Interaction

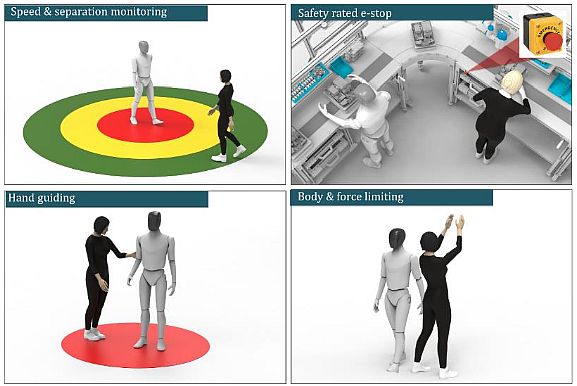

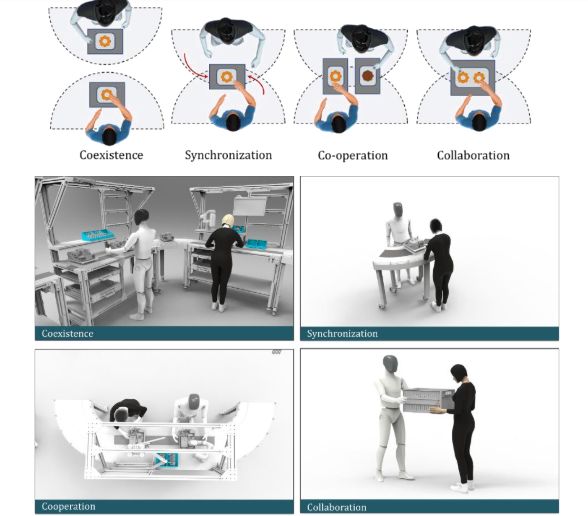

The modes of interaction between humans and robots depend on time and space sharing in a team scenario. In the case of Tesla Bot, there can be 4 forms of interaction:

- Coexistence: Humans and robots share the workspace. However, they have individual independent tasks. Because of the lack of communication between humans and robots, this form of interaction is useful in lean assembly workplaces.

- Cooperation: Humans and robots can have a common workspace, and they both are active at the same time. However, they have separate tasks.

- Collaboration: This is the highest level of collaboration, involving the most risk and most obstacles to achieve. It requires an active collaboration to accomplish a difficult task. For example, they both carry a load, or the operator holds a part, and the bot performs a task on it.

- Synchronized: In this form of collaboration, humans and robots can work on the same workpiece but in shifts. Therefore only one of them is active at a given time. For example, at an assembly line, where a robot lifts heavy objects, humans perform masterly tasks.

What’s Next for Tesla Bots?

Because of its flexibility, the Optimus Tesla robot can become a ubiquitous part of the home and manufacturing environments. The progress of autonomous robots will create intelligent home and work assistants. They will understand the individual needs of their users. Therefore, they will enhance the entire human-robot relationship.

Read our other blogs below to learn more about computer vision applications and tasks: