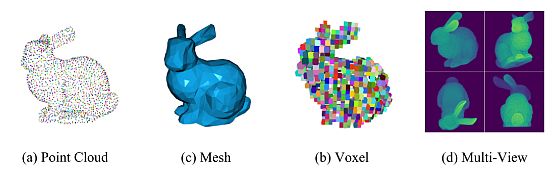

In many computer vision applications, engineers gather data manually. The Point Cloud Processing involves a set of tiny points in 3D space, i.e. points captured by a 3D laser scanner. Each point in the cloud contains rich information, such as three-dimensional coordinates (x, y, z), color information (r, g, b), surface vectors, etc.

These data represent the target’s spatial distribution and surface characteristics. Manual gathering often leads to the collection of inaccurate or missing data, time spent at a location, and higher customer expenses.

What are Point Clouds?

Point cloud data is a comprehensive digital elevation presentation of a three-dimensional object. High-tech instruments like 3D scanners, LiDAR, and photogrammetry AI software measure the x, y, and z coordinates and capture the object’s surface. Each of these points tells us about the object’s shape and structure.

E.g., when scanning a building, each point cloud represents a real point on the wall, window, stairway, or any surface the laser beam meets. The scanner combines the vertical and horizontal angles created by a laser beam to calculate the x, y, and z coordinates. Each point generates a set of 3D coordinate systems with RGB and intensity data.

A denser representation with points generates finer characteristics, such as texture and tiny features. The point cloud contains tiny points when we zoom on it. A region with more points will show the scanned environment more clearly.

How are Point Clouds Generated?

Point cloud creation means capturing an area by taking many point measurements using a 3D laser scanner. You can quickly build a cloud using a mobile mapping device, static-based Lidar, or mobile phones empowered with Lidar point cloud.

Static Scanning

Static scanning uses a Terrestrial Laser Scanner (TLS) attached to a tripod. It generates the point cloud by scanning a series of overlapping locations, ensuring it covers all angles of a mapped area. Therefore in the post-processing phase, the individual datasets merge to create one accurate point cloud.

Mobile Mapping

Mobile mapping performs a similar process, although less accurately. However, mobile mapping conducts scans on the move. The scanner is mounted to a vehicle or drone so that mobile scanning can produce better results. Therefore, a single cloud is the result of the post-processing of merging points from multiple scans.

Point Cloud Processing Methods

Computer vision algorithms provide point cloud processing functionalities that include: point cloud registration, shape fitting to 3-D point clouds, and the ability to read, write, store, display, and compare point clouds.

Point cloud processing methods build a map with registered point clouds, optimize the map to correct the drift, and perform map localization. Many of these methods utilize deep learning and Convolutional Neural Networks (CNNs) to create point cloud processing.

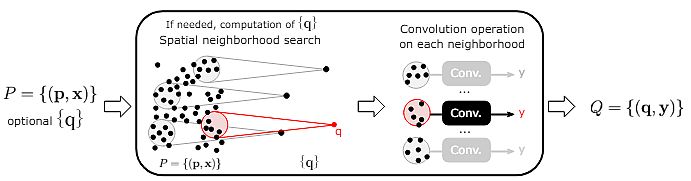

Deep Learning Convolutional-Based Method

Processing irregular, unstructured point cloud data remains a formidable challenge, despite the deep learning in processing structured 2D image data. Many studies attempt to use 3-dimensional CNNs to learn the volume representation of 3-dimensional point clouds. They are motivated by the remarkable success of CNNs on two-dimensional images.

Boulch et al. (2020) proposed a generalization of discrete CNNs. They intended to process the point cloud by replacing discrete kernels with continuous ones. This approach is straightforward and allows the use of variable point cloud sizes for designing neural networks similar to 2D CNNs.

- They conducted experiments with multiple architectures, emphasizing the flexibility of their approach. They obtained competitive results compared to other methods of shape classification, part segmentation, and semantic segmentation (for large-scale point clouds).

- Researchers utilized the proposed network design and the code version available in the official PointCNN repository at the time of research.

- They conducted trials on the ModelNet40 classification dataset for both frameworks. Their surface model performs training around 30% faster than PointCNN, while inference speeds are comparable.

- Moreover, the difference was significant on the ShapeNet segmentation dataset. For a batch size of 4, their segmentation framework was 5 times faster for training, and 3 times faster for testing.

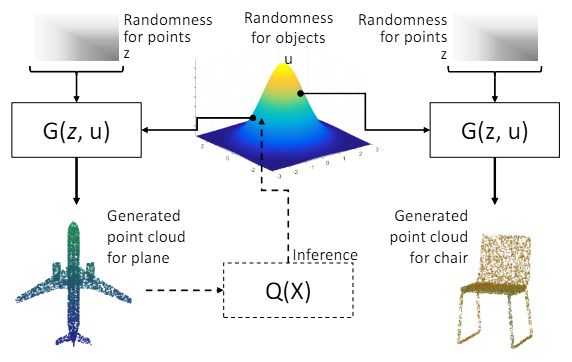

GAN-based Point Cloud Processing

Generative Adversarial Networks (GANs) have demonstrated promising results in learning different types of complex data distributions. Some researchers showed that a simple modification of the current GAN technique is unsuitable for point clouds. The reason for that is the constraints of the undefined discriminator.

L. Li et al.(2018) proposed a two-fold modification of the GAN learning algorithm to process point clouds (PC-GAN). Firstly, they adopted a hierarchical and interpretable sampling procedure combining concepts from implicit generative models and hierarchical Bayesian modeling.

A main part of their method is to train a posterior inference network for the hidden variables:

- Instead of using the new Wasserstein GAN objective, they proposed an intermediate objective. It resulted in a tighter Wasserstein distance estimate.

- They validated their results on the ModelNet40 benchmark dataset. They discovered that PC-GAN trained by the intermediate objective outperforms the current techniques on test data, measured by the distance between generated point clouds and real meshes.

- Moreover, PCGAN learns adaptable latent representations of point clouds as a byproduct. Also, in an object identification task, it can outperform other unsupervised learning methods.

- Lastly, they also researched generating unseen classes of objects and transforming images into a point cloud. They demonstrated the great generalization capabilities of PC-GAN.

Transformer-based Point Cloud Processing

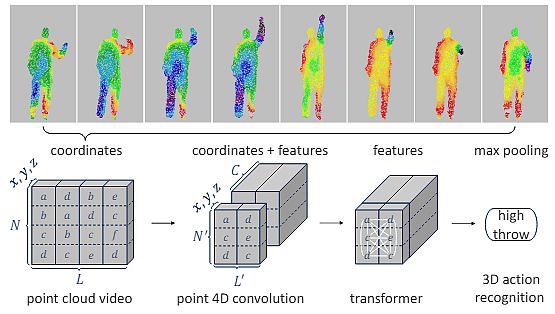

Transformer models have gained significant interest in 3D point cloud processing and have demonstrated remarkable performance across diverse 3D tasks. Fan et al. (2021) proposed a novel Point 4D Transformer network to process raw point cloud videos. Specifically, their P4Transformer consists of:

(i) Point 4D convolution to embed the spatiotemporal local structures presented in a point cloud video, and

(ii) Transformer to capture the appearance and motion information across the entire video. Also, it performs self-attention on the embedded local features.

- They reviewed the theory behind the transformer architecture and described the development and applications of 2D and 3D transformers.

- Moreover, the authors introduced the P4Transformer encoder to compute the features of local areas by capturing long-range relationships across the entire video.

- They utilized P4Transformer for 3D action recognition and 4D semantic segmentation from point clouds.

- It achieved higher results than PointNet++-based methods on many benchmarks (e.g., the MSR-Action3D).

Learned Gridification for Point Cloud Processing

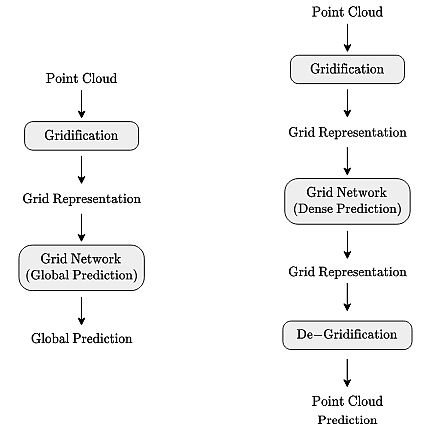

V.D. Linden et al (2023) proposed learnable gridification as the first step in a point cloud processing pipeline to transform the point cloud into a compact, regular grid.

To put it briefly, gridification is the process of connecting points in a point cloud to several points in a grid utilizing bilateral k-nearest neighbor connectivity. Researchers perform it via a convolutional message-passing layer operating on a bipartite graph. The proposed k-nearest neighbor allows for the construction of expressive yet compact grid representations.

- To evaluate their approach, researchers analyzed the expressive capacity of gridification and de-gridification on a toy point cloud reconstruction task.

- Subsequently, they constructed gridified networks and applied them to classification and segmentation tasks.

- They deployed gridified networks on ModelNet40: a synthetic dataset for 3D shape classification, consisting of 12,311 3D meshes of objects belonging to 40 classes.

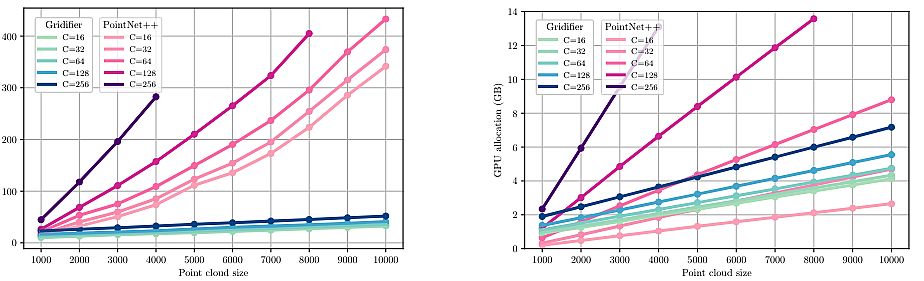

- They proved that gridified networks scale more favorably than native point cloud methods.

- Finally, they analyzed the computational and memory complexity of their gridified network by comparing it with theoretical analyses.

Applications of Point Clouds

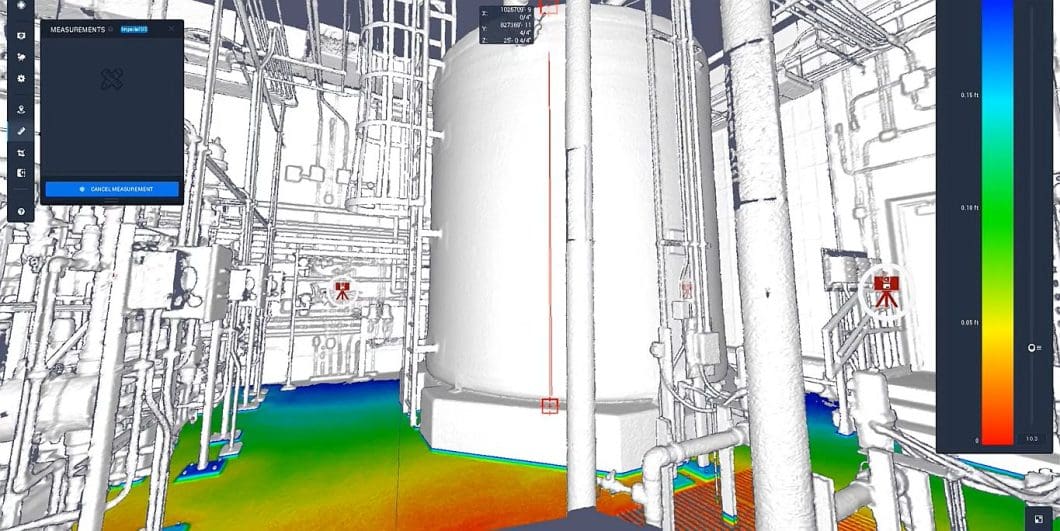

Point cloud technology has become a state-of-the-art tool with a wide range of applications in several industries in recent years. It involves the collection of data points in a 3-dimensional space, i.e., highly detailed representations of real-world environments.

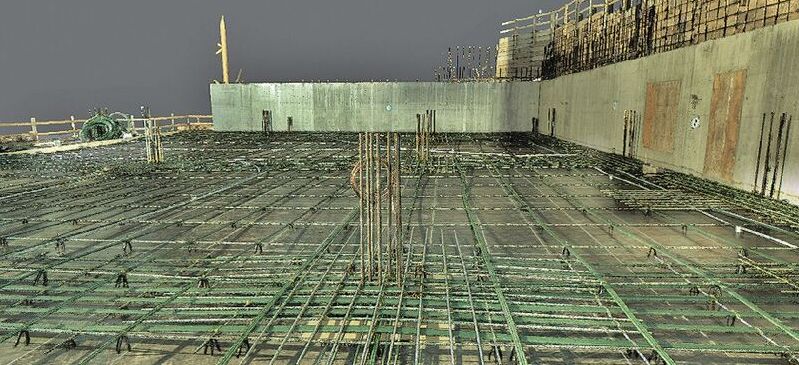

Architecture and Construction

Architects, builders, and designers can precisely measure the site and plan the project with the help of point cloud modeling. All team members have access to the information required to develop the project, thus improving communication and cooperation.

Construction businesses utilize point clouds to lay the basis for a building design. By using point clouds they create a 3D model of the old building, such as a historical site that requires particular attention.

3D Mapping and Urban Planning

Point cloud technology also transformed traditional mapping and urban planning. By capturing millions of sets of data points with laser scanners or photogrammetry it enables the creation of highly accurate 3D maps of landscapes, buildings, and infrastructure.

Point cloud data produces 3D models of all the structures, roads, and other features in cities. They specify the locations of objects and their heights. It looks like having a blueprint for metropolitan regions helps plan how cities evolve.

Virtual Reality and Augmented Reality

Virtual reality (VR) and augmented reality (AR) immersive experiences utilize point cloud data intensively. Users can explore virtual surroundings with exceptional realism by incorporating point cloud models into VR/AR settings.

Quality Control and Industry Inspection

In an industrial environment, point cloud generation plays an important role in inspection and quality control processes. Capturing accurate 3D representations of manufactured objects enables precise measurements, defect detection, and structural analysis.

Point clouds enable the identification of safety risks, proactive safety management, aiding with safety in construction operations, recognition of construction machines’ blind spots, etc.

Robotics and Autonomous Vehicles

The development of robotic systems and autonomous vehicles (AVs) depends heavily on point cloud technology. LiDAR sensors let autonomous vehicles (AVs) scan and navigate complex environments. They utilize point cloud data to identify obstacles, determine the best route, and prevent collisions.

Point Cloud Processing Recap

The goal of point cloud technology is to enable companies in the industrial sector to collect data smoothly. With the use of this technology, the teams shorten their project cycle times and get high-quality, faster outcomes. Therefore, it provides great advantages and benefits in carrying out many engineering projects.