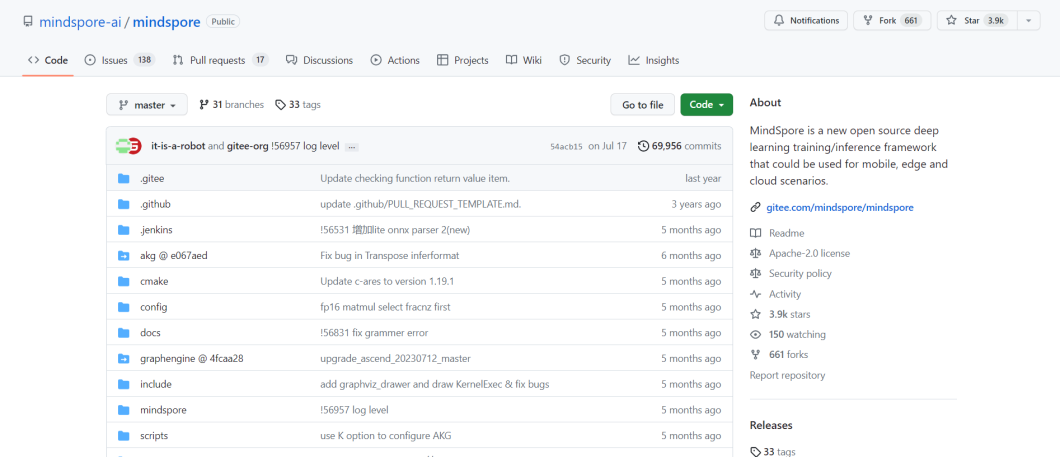

Huawei’s Mindspore is an open-source deep learning framework for training and inference written in C++. Notably, users can implement the framework across mobile, edge, and cloud applications. Operating under the Apache-2.0 license, MindSpore AI allows users to use, modify, and distribute the software.

MindSpore offers a comprehensive developer platform to develop, deploy, and scale artificial intelligence models. MindSpore lowers the barriers to starting by providing a unified programming interface, Python compatibility, and visual tools.

What is MindSpore?

At its core, the MindSpore open-source project is a solution that combines ease of development with advanced capabilities. It accelerates AI research and prototype development. The integrated approach promotes collaboration, innovation, and responsible AI practices with deep learning models.

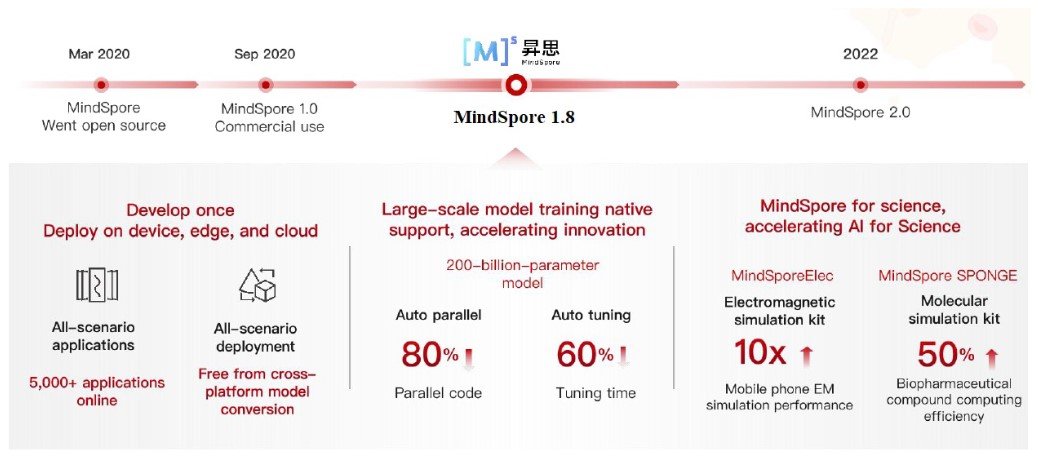

Today, MindSpore is widely used for research and prototyping projects across ML Vision, NLP, and Audio tasks. Key benefits include all-scenario applications with an approach of developing once and deploying anywhere.

Mindspore Architecture: Understanding Its Core

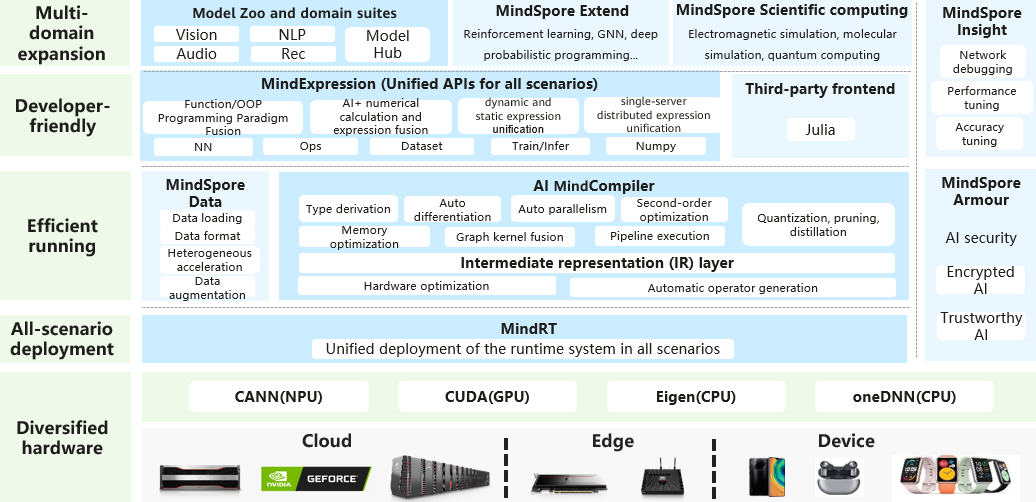

Huawei’s MindSpore contains a modular and efficient architecture for neural network training and inference.

- Computational Graph. The Computational Graph is a dynamic and versatile representation of neural network operations. This graph forms the backbone of model execution, promoting flexibility and adaptability during the training and inference phases.

- Execution Engine. The Execution Engine translates the computational graph into actionable commands. With a focus on optimization, it ensures seamless and efficient execution of neural network tasks across AI hardware architectures.

- Operators and Kernels. The MindSpor architecture contains a library of operators and kernels, each optimized for specific hardware platforms. These components form the building blocks of neural network operations, contributing to the framework’s speed and efficiency.

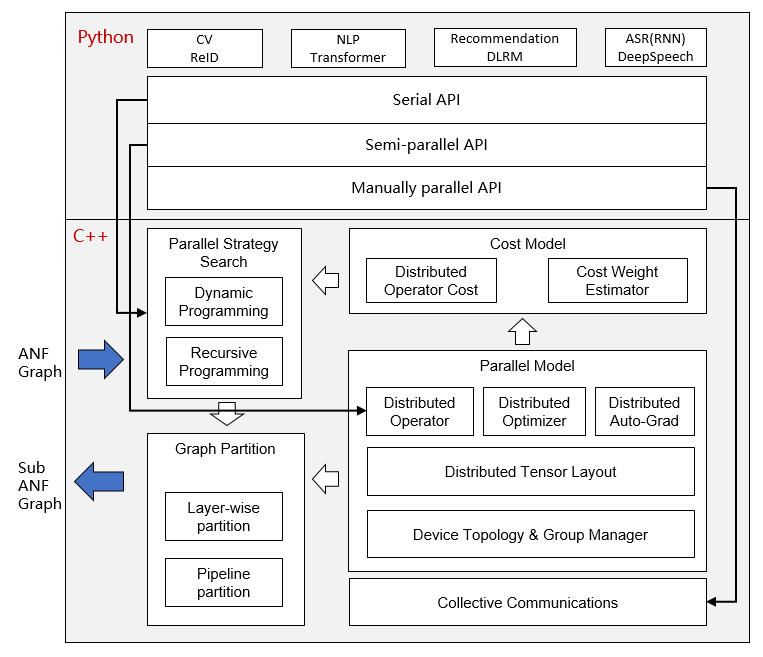

- Model Parallelism. MindSpore implements an automatic parallelism approach, seamlessly integrating training data sets, models, and hybrid parallelism. Each operator is intricately split into clusters, enabling efficient parallel operations without entering into complex implementation details. With a commitment to a Python-based development environment, users can focus on top-level API efficiency while taking advantage of automatic parallelism.

- Source Transformation (ST). Evolving from the functional programming framework, ST performs an automatic differential transformation on the intermediate expression during the compilation process. Source Transformation supports complex control flow scenarios, higher-order functions, and closures.

Source Transformation

MindSpore, an advanced computing framework, utilizes a method called automatic differentiation based on Source Transformation (ST). This approach is specifically designed to enhance the performance of neural networks by enabling automatic differentiation of control flows and incorporating static compilation optimization. Simply put, it makes complex neural network computations more efficient and effective.

The core of MindSpore’s automatic differentiation lies in its similarity to symbolic differentiation found in basic algebra. It uses a system called Intermediate Representation (IR), which acts as a middle step in calculations. This representation mirrors the concept of composite functions in algebra, where each basic function in IR corresponds to a primitive operation. This alignment allows MindSpore to construct and manage complex control flows in computations, akin to handling intricate algebraic functions.

To better understand this, imagine how derivatives are calculated in basic algebra. MindSpore’s automatic differentiation, through the use of Intermediate Representations, simplifies the process of dealing with complex mathematical functions.

Each step in the IR correlates with fundamental algebraic operations, allowing the framework to efficiently tackle more sophisticated and complex tasks. This makes MindSpore not only powerful for neural network optimization but also versatile in handling a range of complex computational scenarios.

A key takeaway from Mindspore’s architecture is its modular design. This enables users to customize neural networks for a variety of tasks, from Image Recognition to Natural Language Processing (NLP). This adaptability means that the framework can integrate into many environments. Thus, making it applicable to a wide range of computer vision applications.

Optimization Techniques

Optimization techniques are necessary for MindSpore’s functionality as they enhance model performance and contribute to resource efficiency. For AI applications where computational demands are high, MindSpore’s optimization strategies ensure that neural networks operate seamlessly. In turn, delivering high-performance results while conserving valuable resources.

- Quantization. MindSpore uses quantization, which transforms the precision of numerical values within neural networks. By reducing the bit-width of data representations, the framework not only conserves memory but also accelerates computational speed.

- Pruning. Through pruning, MindSpore removes unnecessary neural connections to streamline model complexity. This technique enhances the sparsity of neural networks, resulting in reduced memory footprint and faster inference. As a result, MindSpore crafts leaner models without compromising on predictive accuracy.

- Weight Sharing. MindSpore takes an innovative approach to parameter sharing, which optimizes model storage and speeds up computation. By combining shared weights, it ensures more efficient memory use and accelerates the training process.

- Dynamic Operator Fusion. Dynamic Operator Fusion orchestrates the integration of multiple operations for improved computational efficiency. By combining sequential operations into a single, optimized kernel, the deep learning framework minimizes memory overhead. Thus, enabling faster and more efficient neural network execution.

- Adaptive Learning Rate. Adaptive models dynamically adjust learning rates during model training. MindSpore adapts to the dynamic nature of neural network training and overcomes challenges posed by varying gradients. This allows for optimal convergence and model accuracy.

Native Support for Hardware Acceleration

Hardware acceleration is a game-changer for AI performance. MindSpore leverages native support for various hardware architectures, such as GPUs, NPUs, and Ascend processors, optimizing model execution and overall AI efficiency.

The Power of GPUs and Ascend Processors

MindSpore seamlessly integrates with GPUs and Ascend processors, leveraging their parallel processing capabilities. This integration enhances both training and inference by optimizing the execution of neural networks, establishing MindSpore as a solution for computation-intensive AI tasks.

Distributed Training

We can highlight MindSpore’s scalability with its native support for hardware acceleration, extending to distributed training. This allows neural network training to scale across multiple devices. In turn, the expedited development lifecycle makes MindSpore suitable for large-scale AI initiatives.

Model Parallelism

MindSpore incorporates advanced features like model parallelism, which distributes neural network computations across different hardware devices. By partitioning the workload, MindSpore optimizes computational efficiency, leveraging diverse hardware resources to their full potential.

The model parallelism approach ensures not only optimal resource use but also acceleration of AI model development. This provides a significant boost to performance and scalability in complex computing environments.

Real-Time Inference with FPGA Integration

In real-time AI applications, MindSpore’s native support extends to FPGA integration. This integration with Field-Programmable Gate Arrays (FPGAs) facilitates swift and low-latency inference. In turn, positions MindSpore as a solid choice for applications demanding rapid and precise predictions.

Elevating Edge Computing with MindSpore

MindSpore extends its native support for hardware acceleration to edge computing. This integration ensures efficient AI processing on resource-constrained devices, bringing AI capabilities closer to the data source and enabling intelligent edge applications.

Ease of ML Development

The MindSpore platform provides a single programming interface that helps to streamline the development of computer vision models. In turn, this allows users to work across different hardware architectures without extensive code changes.

By leveraging the popularity of the Python programming language in the AI community, MindSpore ensures compatibility, making it accessible to a broad spectrum of developers. The use of Python, in combination with the MindSpore features, was built to accelerate the development cycle.

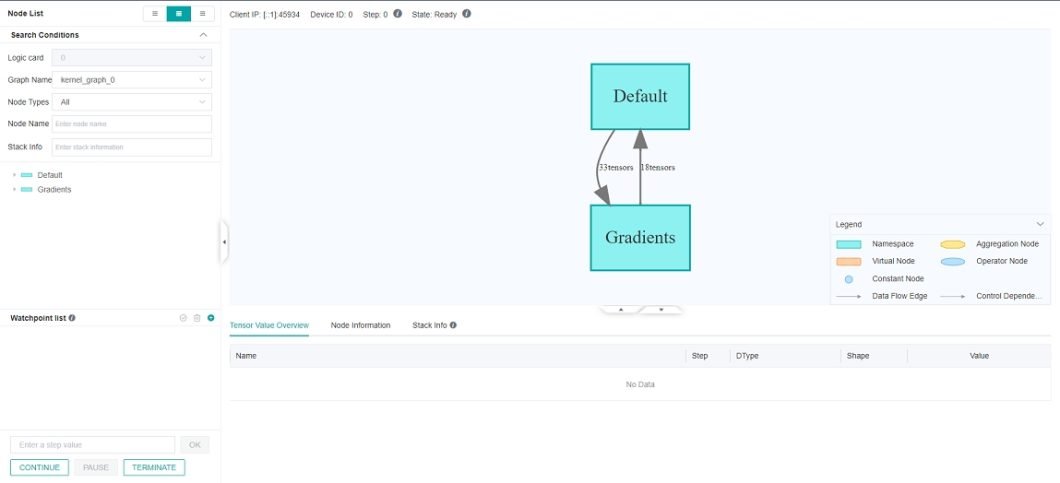

Visual Interface and Simplified Elements

MindSpore enables developers to design and deploy models with a simplified approach. Thus, allowing for collaboration between domain experts and AI developers. The AI tool also facilitates visual model design through tools like ModelArts. This makes it possible for developers to visualize and expedite the development of complex data processing and custom-trained models.

If you need no code for the full lifecycle of computer vision applications, check out our end-to-end platform, Viso Suite.

Operators and Models

Moreover, MindSpore has compiled a repository of pre-built operators and models. For prototyping, developers can start from scratch and move ahead quickly when developing new models. This is especially evident for common tasks in computer vision, natural language processing, audio AI, and more. The platform incorporates auto-differentiation, automating the computation of gradients in the training process and simplifying the implementation of advanced machine learning models.

Seamless integration with industry-standard deep learning frameworks like TensorFlow or PyTorch allows developers to use existing models. Meaning that they can seamlessly transition to MindSpore.

The MindSpore Hub offers a centralized repository for models, data, and scripts, creating a collaborative ecosystem where developers can access and share resources. Designed with cloud-native principles, MindSpore places the power of cloud resources in users’ hands. By doing this, Huawei AI enhances scalability and expedites model deployment in cloud environments.

Upsides and Risks to Consider

Popular in the Open Source Community

MindSpore actively contributes to the open-source AI community by promoting collaboration and knowledge sharing. By engaging with developers and data scientists worldwide, Huawei AI pushes the AI hardware and software application ecosystem forward. At this time, the framework’s repository on GitHub has more than 465 open-source contributors and 4’000 stars.

The modular architecture of MindSpore offers users the flexibility to customize their approach to various ML tasks. The integrated set of tools is maintained and bolstered by novel optimization techniques, automatic parallelism, and hardware acceleration that enhance model performance.

Potential Risks for Business Users

In 2019, the U.S. took action against Huawei, citing security concerns and implementing export controls on U.S. technology sales to the company. These measures were driven by fears that Huawei’s extensive presence in global telecommunications networks could potentially be exploited for espionage by the Chinese government.

EU officials have reservations about Huawei, labeling it a “high-risk supplier.” Margrethe Vestager, the Competition Commissioner, confirmed the European Commission’s intent to adjust Horizon Europe rules to align with their assessment of Huawei as a high-risk entity.

The Commission’s concerns regarding Huawei and ZTE, another Chinese telecom company, have led it to endorse member states’ efforts to limit and exclude these suppliers from mobile networks. To date, ten member states have imposed restrictions on telecom supplies, driven by concerns over espionage and overreliance on Chinese technology.

While Brussels lacks the authority to prevent Huawei components from entering member state networks, it is taking steps to protect its communications by avoiding Huawei and ZTE components. Furthermore, it plans to review EU funding programs in light of the high-risk status.

Beyond direct security threats, there is also a potential for supplier and insurance risks that could emerge when businesses or customers are exposed to potential risks.