This article will review the Intel Neural Compute Stick 2 (NCS 2) based on the Intel Movidius Myriad X chip. Learn about the advantages of using the NCS 2 for Edge AI, computing Artificial Intelligence tasks on the edge.

What is Intel Neural Compute Stick 2?

The edge is becoming an increasingly popular destination for deploying computer vision or deep learning models. Edge Computing provides advantages such as local data processing, filtered data transfer to the cloud, or faster decision-making.

The Intel Neural Compute Stick 2 is powered by the Intel Movidius X VPU to power on-device AI applications at high performance with ultra-low power consumption. With new performance enhancements, the Intel Movidius Myriad X VPU is a power-efficient solution revolving around edge computing that brings computer vision and AI applications to edge devices such as connected computers, drones, smart cameras, smart homes, security, VR/AR headsets, and 360-degree cameras.

The NCS 2 is a small, fanless neural network training and deployment device that can be used for AI programming at the edge.

AI accelerators like the Intel Stick 2 VPU are useful for accelerating data-intensive deep learning inference on edge devices in a very cost-effective way. These accelerators work by assisting the edge device computer processing unit (CPU) by taking over the mathematical burden needed for running deep learning models.

Edge accelerators allow deep learning models to be run at low costs, with low power consumption, and at faster speeds. The benefits are primarily measured using throughput, value, latency, and efficiency.

Intel Movidius Myriad X – High-Performance Computer Vision Inferencing

The Intel Movidius Myriad X Vision Processing Unit (VPU) is Intel’s first VPU to feature the Neural Compute Engine — a dedicated hardware accelerator for deep neural network inference. The Movidius Myriad X VPU is sold as a chip embedded in a Neural Compute Stick (similar to a USB drive) specifically built for processing images and video inputs. The USB containment of the chip allows it to be easily compatible with a Raspberry Pi or Intel NUC device (any popular computing architecture, such as x86 PCs).

The Intel Movidius Neural Compute Stick engine is a chip-form hardware piece designed to run deep neural networks at high speed and low power without compromising accuracy, enabling computer vision processes in real time.

This engine is implemented within the USB casing along with Movidius Myriad X. When used concurrently, the Neural Compute Engine, 16 powerful SHAVE cores, and high-throughput memory fabric make the Intel Movidius Myriad X neural compute stick ideal for training and deploying deep neural networks and computer vision products.

The benchmarks show the relative success rates of various machine learning algorithms on different inference engine samples. For the popular SSD300-CF (Caffe backend), the throughput rate is directly proportional to the accuracy, while the latency rate is inversely proportional to quality.

Accordingly, the Movidius accelerator achieves a higher throughput for the SSD300-CF than any of the Core i3 to i9s (except for the Intel Core i9-10920X). In addition, it has lower latency than any inferencing engine, which makes it a relatively robust platform for running artificial intelligence computer vision models.

OpenVINO with Intel Movidius Neural Compute Stick 2

The Intel Distribution of OpenVINO (Open Visual Inference and Neural Network) allows computer vision models trained in the cloud to be run at the edge. The OpenVINO developer’s toolkit contains a full suite of development and deployment tools best used in conjunction with Movidius Myriad X.

The toolkit facilitates faster inference of deep learning models by creating cost-effective and robust computer vision applications. If you want to learn more about the toolkit, I recommend reading our full overview of OpenVINO. You can easily get started with “install OpenVINO.”

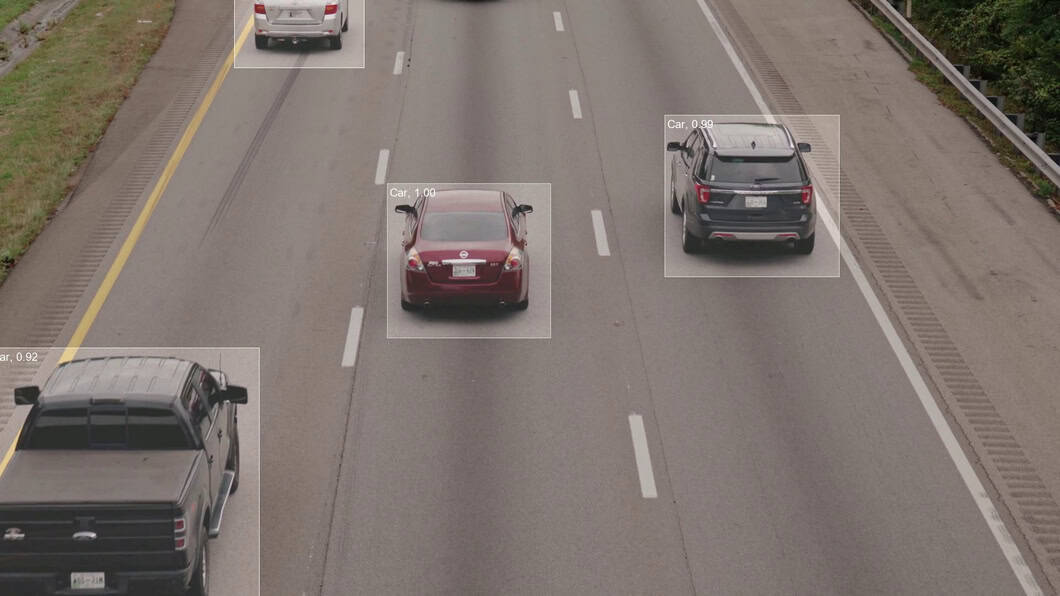

Supporting numerous deep learning models out of the box, the toolkit expedites the computer vision application production process by cutting down on raw creation and setup time. The pre-trained models, which range from YOLO (You Only Look Once) to R-CNN and ResNet, allow developers to create models that carry out complex computer vision applications. The applications include face detection, person detection, vehicle detection, and people counting.

The Myriad Development Kit (MDK) further includes necessary development tools, frameworks, and APIs to implement custom vision, imaging, and deep neural network workloads on the chip. For example, existing CNN models can be converted into OpenVINO Intermediate Representation (IR), which drastically reduces the model’s size, optimizing it for inference.

Intel Neural Compute Stick 2 (Intel NCS2) Review

Pros: Optimized for AI at the edge

- The Vision Processing Unit Intel NCS 2 can easily be attached to an Ubuntu 16.04 PC or Raspberry Pi running Raspbian Stretch OS. This makes it very easy and affordable to get started.

- The NCS is optimized for computer vision tasks with OpenVINO to build Edge AI solutions. On the other hand, Machine Learning models are executed on-device.

- Detailed tutorials and instructions make it easy to set up a whole bunch of demos, for example, for real-time object detection.

- As an edge computing solution, it offers all the benefits of edge AI hardware. These include privacy through local processing, performance due to low latency, and reliability (decentralized, does not depend on network connections).

- The Intel Movidius NCS features 16 Programmable 128-bit VLIW Vector Processors. This allows the users to simultaneously run multiple imaging and vision application pipelines. The 16 vector processors optimize the system for flexibility surrounding computer vision workloads. As a member of the Movidius VPU family, the Movidius Myriad X VPU is known for extremely low, decreased power consumption.

- Based on the maximum performance of operations-per-second across all available compute units, the Intel Neural Compute Stick 2 achieves a total performance of over 4 trillion operations-per-second. The enhanced vision accelerators make the Myriad X very useful for performing demanding computer vision tasks. For example, 20 accelerators allow the Neural Compute Stick to perform tasks such as optical flow and stereo depth without introducing additional compute overhead.

- The new stereo depth accelerator on Movidius Myriad X can concurrently process 6 camera inputs (3 stereo pairs), each running 720p resolution at a 60 Hz frame rate. This is comparable and competitive with edge accelerators such as Google’s Coral TPU.

- Very easy-to-use interface, plug-and-play into any USB port of computing devices (from USB 2.0).

- You can combine multiple NCS 2 sticks and distribute the workload. Therefore, you can use USB hubs or docking stations in case you don’t have enough USB ports available.

Cons: Things You Need to Know

- We perceived the Neural Computer Stick 2 as rather challenging to debug, with limited APIs available.

- Due to its passive cooling design, we faced issues when using the NCS2 in places with limited airflow and high air temperatures (above 50 C).

- While the NCS is widely used by professionals and beginners, basic programming knowledge is recommended to efficiently use it beyond following the detailed tutorials and building a serious AI product.

- On real-time object detection benchmarks, the similarly priced Google Coral AI accelerator achieves better inference times.

- You still need an edge computing device to operate the NCS. If you are willing to spend slightly more, you get an entire single-board computer, such as an NVIDIA Jetson Nano.

How to Use the NCS 2

The Traditional Way: Learn it Yourself/Prototyping

The OpenVINO toolkit offers development tools to deploy applications and solutions for computer vision across Intel hardware, using the NCS 2. Read more about the distribution of the OpenVINO toolkit and how it can be used to perform CNN-based deep learning inference on the edge, with one or multiple NCS devices to distribute the workload. Intel offers extensive documentation for setting up OpenVINO on a device to which you can connect one or multiple NCS devices.

Computer Vision Platforms: Ready to Use/Production

Most probably, the easiest way to use the Neural Compute Stick and other VPU devices for Computer Vision is AI Low-Code Platforms. You can benefit from OpenVINO install integrations and built-in support for the latest algorithms and frameworks (YOLO, TensorFlow, PyTorch). Platforms make it easier to develop complete AI applications using the Neural Compute Stick. Also, you don’t need to start from scratch to perform video pre-processing tasks and create workflows to integrate processed output with third-party systems.

We at viso.ai power the End-to-End AI platform for Computer Vision named Viso Suite. It’s probably the fastest way to build a computer vision prototype and scale it into production on scalable infrastructure. Hence, we do take care of the cloud and deployment infrastructure, container management, DevOps tasks, and security.

What’s Next?

If you enjoyed this article, we suggest you read more about:

- Computer vision applications that can run on Intel Neural Compute Stick 2

- Overview of other AI hardware accelerators, such as the Google Coral TPU

- Read everything you need to know about YOLOv3