In many computer vision applications (e.g. robot motion and medical imaging) there is a need to integrate relevant information from multiple images into a single image. Such image fusion will provide higher reliability, accuracy, and data quality.

Multiview fusion improves the image with higher resolution and also recovers the 3D representation of a scene. Multimodal fusion combines images from different sensors and is referred to as multi-sensor fusion. Its main applications include medical imagery, surveillance, and security.

Levels of Image Fusion

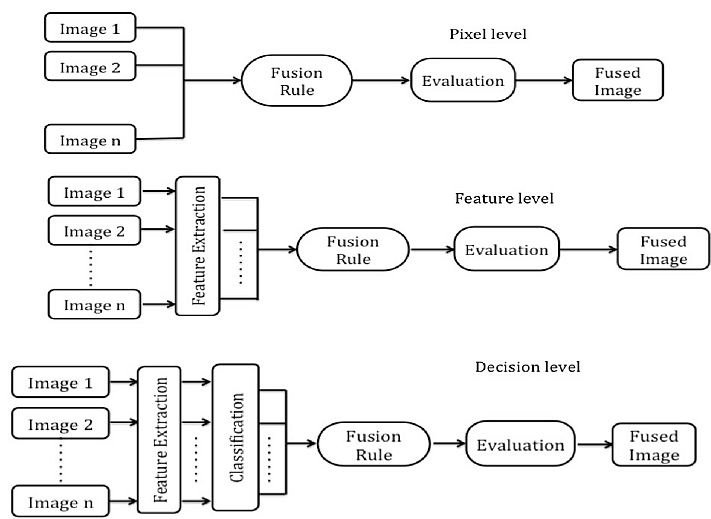

Engineers perform Image Fusion (IF) at three levels based on the stage of fusion accomplishment.

- Pixel Level IF. This image fusion method is at a low level and it is simple to perform. It contains the features of two input images and generates an average, single resultant image.

- Feature Level IF. It justifies the image features (size, color) from multiple sources, thus generating the enhanced image after feature extraction.

- Block (Region) Based IF. This is a high-level technique. It utilizes multistage representation and calculates measurements according to the regions.

Types of Image Fusion

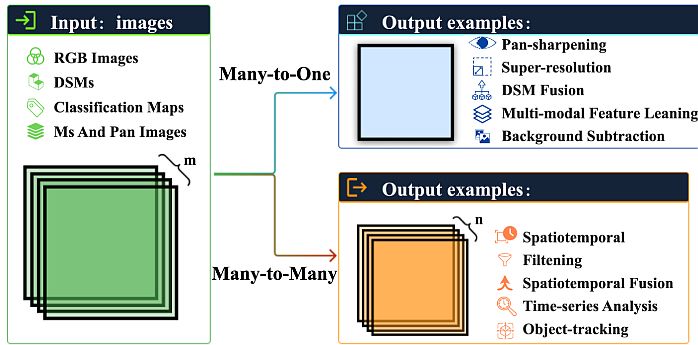

Single-sensor IF

Single-sensor image fusion captures the real world as a sequence of images. The algorithm combines a set of images and generates a new image with optimal information content. E.g. in different lighting conditions, a human operator may not be able to detect objects but highlights them in the resultant fused image.

The drawbacks of this method are the limitations of the imaging sensor that is used in some sensing areas. The conditions in which the sensor capability restricts the system functions (dynamic range, resolution, etc.). For example, some sensors are good for illuminated environments (daylight) but are not suitable for night and fog conditions.

Multi-sensor IF

A multi-sensor image fusion method merges the images from several sensors to form a composite image. E.g. an infrared camera and a separate digital camera produce their individual images and by merging, the final fused image is produced. This approach overcomes the single-sensor problems.

This method generates the merged information from several images. The digital camera is suitable for daylight conditions; the infrared camera is good in weakly illuminated environments. So the method has applications in the military and also in object detection, robotics, and medical imaging.

Multiview IF

In this method, images have multiple or different views at the same time. This method utilizes images from different conditions like visible, infrared, multispectral, and remote sensing. Common methods of image fusion include object-level fusion, weighted pixel fusion, and fusion in the transform domain.

Multi-focus IF

This method processes images from 3D views with their focal length. It splits the original image into regions so that every region is in focus for at least one channel of the image.

How to Implement Image Fusion?

Researchers implement image fusion in multiple ways, and here we present the most common methods.

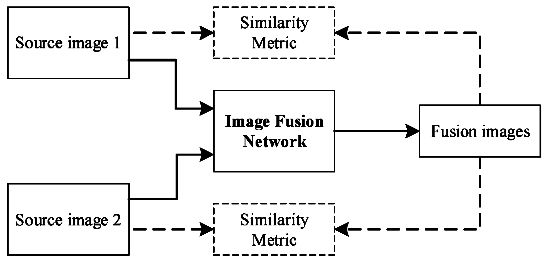

Convolutional Neural Network

Zhang et al. (2021) created a CNN-based fusion framework to extract features and reconstruct images by using a carefully designed loss function. They utilized CNN as part of the overall fusion framework to perform activity-level monitoring and feature integration.

In their case of CNN for fusion, they combined a loss function with a classified CNN to perform medical IF. In addition, they embedded the fusion layer in the training process. Therefore, CNN reduces the constraints caused by manually designed fusion rules (maximum, minimum, or average).

Also, the researchers introduced other approaches:

- A CNN-based end-to-end fusion framework, to avoid the drawbacks of manual rules.

- Their CNN defines the objective function for IF with better precision and preservation of texture structure.

- Zhang et al. modeled IF with gradient preservation, thus designing a general loss function for multiple fusion tasks.

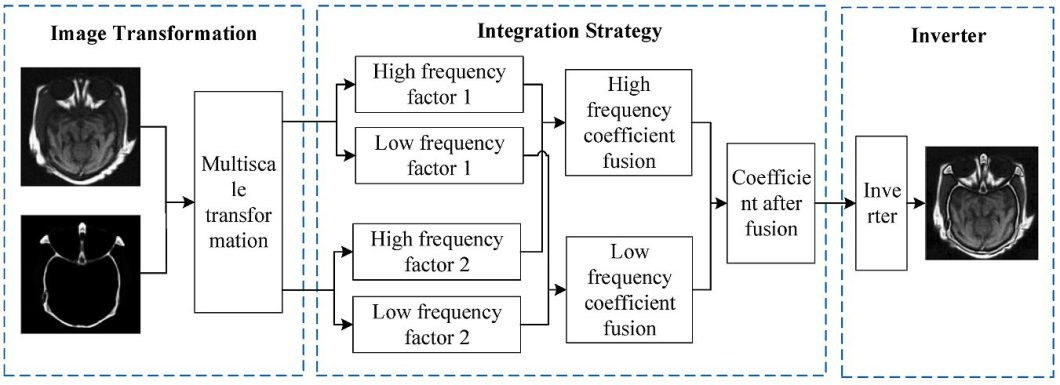

Multiscale Transformation

Ma et al. (2023) performed the fusion process by using a multiscale transformation:

- They decomposed the image separately to obtain different frequency levels, i.e., high-frequency and low-frequency sub-bands.

- The team designed the optimal fusion calculation method as the fusion strategy. They utilized different characteristics of the high-frequency and low-frequency sub-bands.

- To generate the fused image, they inverted the final fusion coefficients.

- The researchers applied wavelet transform and geometric transform without subsampling in multiple scales and multiple directions.

- Their multiscale transform-based fusion method introduces a fusion strategy according to the characteristics of different sub-bands. Thus, the fused image is rich in detailed information and low in redundancy.

- The choice of a decomposition method and fusion rules is an important part of the fusion process. They determine whether the fused image can contain additional information than the original image.

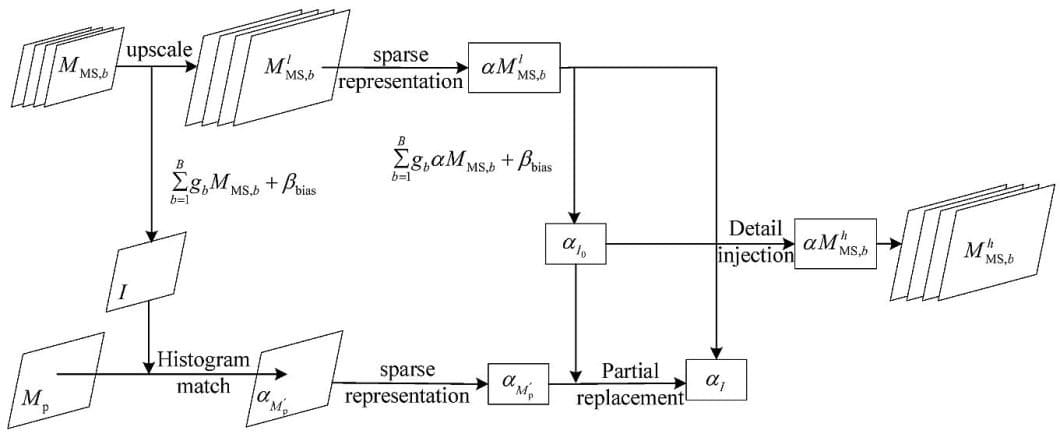

Sparse Representation Model for IF

Compared to traditional multiscale transform, sparse representation has two main differences. The multiscale fusion method uses a preset basis function, which ignores some important features of the source image. The sparse representation learns over a complete feature set, which can better express and extract images.

In addition, the multiscale transform-based fusion method decomposes images into multiple layers, but the requirements for noise and registration are quite strict. The sparse representation uses a sliding window technique to segment the image into multiple overlapping segments, which improves robustness.

The sparse representation method improves the problems of insufficient feature information and high registration requirements in the multiscale transformation. However, it still has some drawbacks, which are mainly present in the two aspects below.

- The signal representation capability of the overcomplete dictionary is limited, which leads to the loss of image texture details.

- Because of the sliding window, there’s an overlapping small block, which lowers the operational efficiency of the algorithm.

Applications of Image Fusion

The four main IF use cases are:

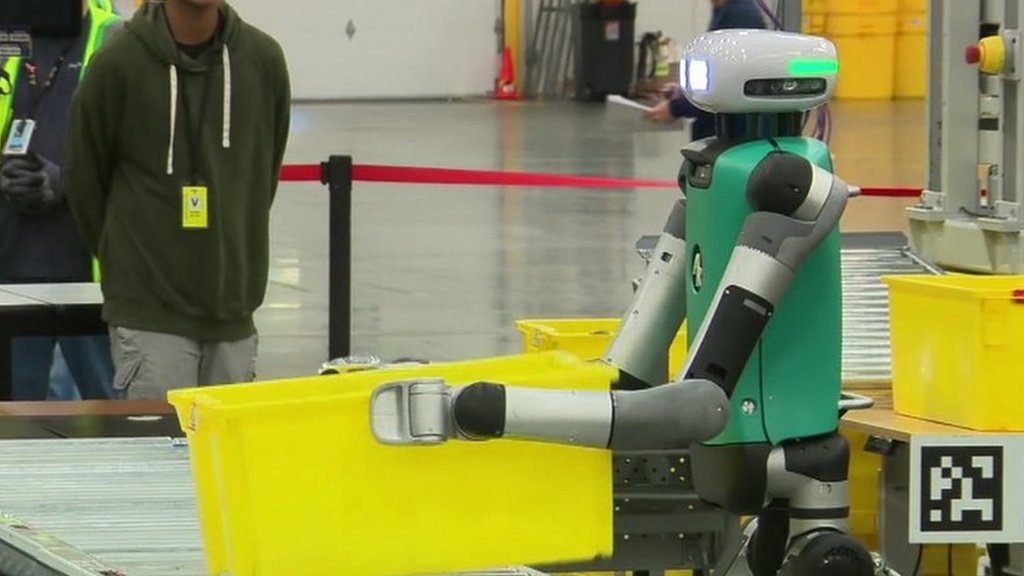

Robotic Vision

The robotic motion utilizes the fusion of infrared and visible images. Robots use infrared images to distinguish the target from the background, because of the difference in thermal radiation. Therefore, the illumination and weather conditions do not affect the fusion. However, infrared images don’t provide texture detail.

For their computer vision tasks, robots utilize visible light images. Because of the influence of the data collection environment, visible images may not show important targets. Infrared and visible light fusion methods overcome this drawback of a single image, thus extracting information.

The fusion images are usually clearer than the infrared images. In addition, robots perform a fusion of visible and infrared images, such as for autonomous driving and face recognition.

Medical Imagery

Today, medical imagery generates various types of medical images to help doctors diagnose diseases or injuries. Each type of image has its specific intensity. Therefore, IF has a high clinical application in the field of medical imaging modalities.

Medical imagery researchers combine redundant information and related information from different medical images to create fused medical images. Thus, they provide quality information-inspired image diagnosis for their medical examinations.

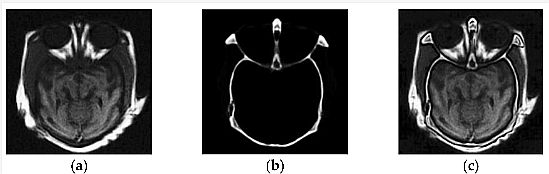

The figure shows an example of image fusion for medical diagnostics by combining Computed Tomography (CT) and MRI. The data comes from a brain image dataset of combined tomography and magnetic resonance imaging (MedPix dataset).

Doctors use CT to analyze bone structures with high domain resolution, and MRI to detect soft tissues, such as the heart, eyes, and brain. MRI and CT are combined with image fusion technology to increase accuracy and medical applicability.

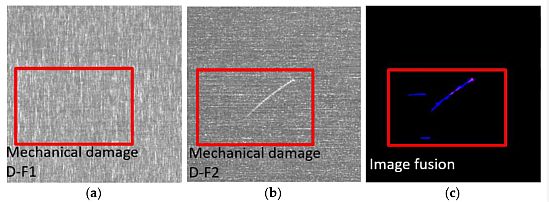

Defect Detection in Industry

Because of the constraints of industrial production conditions, workpiece defects are difficult to avoid. Typical defects include debris, porosity, and cracks inside the workpiece.

These defects increase during the use of the workpiece and affect its performance. Therefore they cause the workpiece to fail, shortening its service life, and threatening the safety of the machine.

The current defect detection algorithms are generally divided into two groups:

- Defect area segmentation, where all potential defect areas are segmented from a single image.

- To detect different types of defects – manufacturers apply manually designed features. They are only applicable to specific defect detection, i.e. sizes of defects, diverse shapes, and complex background areas.

Agricultural Remote Sensing

Image fusion technology is also widely used in the field of agricultural remote sensing. By using agricultural remote sensing technology, farmers select the environment for the adaptation of plants and the detection of plant diseases.

Existing fusion technologies, including equipment such as ranging and optical detection, synthetic radar, and medium-resolution imaging spectrometers, all have applications in image fusion.

Researchers utilize a region-based fusion scheme for combining panchromatic, multispectral, and synthetic aperture radar images. In addition, some farmers combine spectral information, radar range data, and optical detection.

Advantages and Drawbacks of IF

| Advantages | Drawbacks |

|---|---|

| Image fusion reduces data storage and data transmission. | The processing of data is quite slow when images are fuzzy. |

| The price of IF is rather low and requires simple steps to perform fusion. | Fusion is sometimes complex and expensive because of the feature extraction and integration steps. |

| Teams use image fusion for image identification and registration. | It requires time and effort to define and select the proper features for each use case. |

| It can produce a high-resolution output from foggy multiscale images. | In the image fusion process, there are large chances of data loss. |

| The fused resulting images are easy to interpret and can be in color. | In single-sensor fusion, images can be blurry in poor weather conditions. |

| It increases situational and conditional awareness. | In night-condition photos, it is difficult to perform image fusion. |

| Image fusion enables one to read small signs in different images (applications). | For good visualization of images, it requires multi-sensor or multi-view fusion. |

| Image enhancement from different perspectives leads to better contrast. |

A Brief Recap

Image fusion is an important technique for the integration and evaluation of data from multiple sources (sensors). It has many applications in computer vision, medical imaging, and remote sensing.

Image fusions with complex nonlinear distortions contribute to the robustness of the most complex computer vision methods.

Here are some additional resources to read more about computer vision tasks and learn more about the tasks performed in IF.

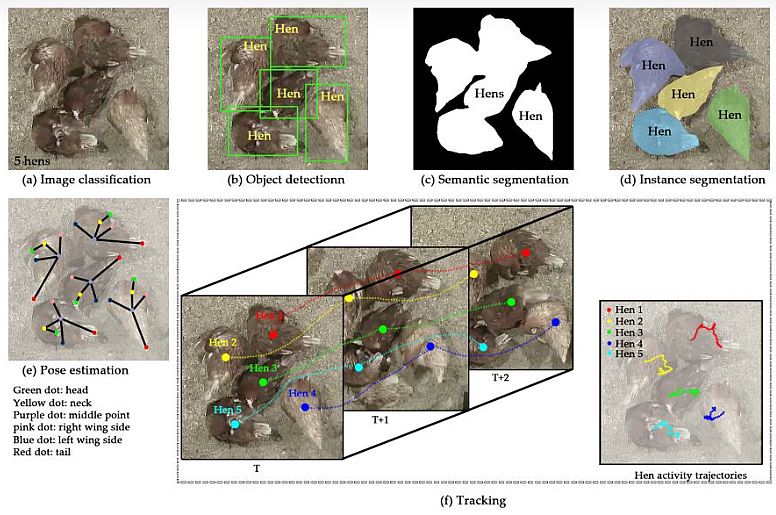

- Object Localization and Image Localization

- Grounded-SAM Explained: A New Image Segmentation Paradigm?

- Image Registration and Its Applications

- Image Data Augmentation for Computer Vision

- Image Annotation: Best Software Tools and Solutions

- Machine Vision – What You Need to Know (Overview)