AI emotion recognition is a very active current field of computer vision research that involves facial emotion detection and the automatic assessment of sentiment from visual data and text analysis. Human-machine interaction is an important area of research where machine learning algorithms with visual perception aim to gain an understanding of human interaction.

We’ll cover the following technology, trends, examples, and applications:

- What is Emotion AI?

- How does visual AI Emotion Recognition work?

- Facial Emotion Recognition Datasets

- What Emotions Can AI Detect?

- State-of-the-art emotion AI Algorithms

- Outlook, current research, and applications

What Is AI Emotion Recognition?

What is Emotion AI?

Emotion AI, also called Affective Computing, is a rapidly growing branch of Artificial Intelligence allowing computers to analyze and understand human language and nonverbal signs such as facial expressions, body language, gestures, and voice tones to assess their emotional state. Hence, deep neural network face recognition and visual Emotion AI analyze facial appearances in images and videos using computer vision technology to analyze an individual’s emotional status.

Visual AI Emotion Recognition

Emotion recognition is the task of machines trying to analyze, interpret, and classify human emotion through the analysis of facial features.

Among all the high-level vision tasks, Visual Emotion Analysis (VEA) is one of the most challenging tasks for the existing affective gap between low-level pixels and high-level emotions. Against all odds, visual emotion analysis is still promising as understanding human emotions is a crucial step towards strong artificial intelligence. With the rapid development of Convolutional Neural Networks (CNNs), deep learning has become the new method of choice for emotion analysis tasks.

How AI Emotion Recognition and Analysis Works

On a high level, an AI emotion application or vision system includes the following steps:

- Step #1: Acquire the image frame from a camera feed (IP, CCTV, USB camera).

- Step #2: Preprocessing of the image (cropping, resizing, rotating, color correction).

- Step #3: Extract the important features with a CNN model

- Step #4: Perform emotion classification

The basis of emotion recognition with AI is based on three sequential steps:

1. Face Detection in Images and Video Frames

In the first step, the video of a camera is used to detect and localize the human face. The bounding box coordinate is used to indicate the exact face location in real-time. The face detection task is still challenging, and it’s not guaranteed that all faces are going to be detected in a given input image, especially in uncontrolled environments with challenging lighting conditions, different head poses great distances, or occlusion.

2. Image Preprocessing

When the faces are detected, the image data is optimized before it is fed into the emotion classifier. This step greatly improves the detection accuracy. The image preprocessing usually includes multiple substeps to normalize the image for illumination changes, reduce noise, perform image smoothing, image rotation correction, image resizing, and image cropping.

3. Emotion Classification AI Model

After pre-processing, the relevant features are retrieved from the pre-processed data containing the detected faces. There are different methods to detect numerous facial features. For example, Action Units (AU), the motion of facial landmarks, distances between facial landmarks, gradient features, facial texture, and more.

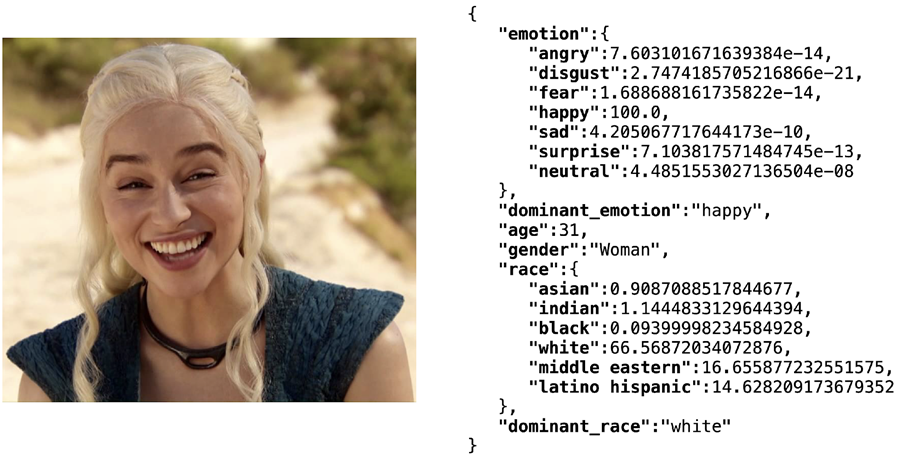

Generally, the classifiers used for AI emotion recognition are based on Support Vector Machines (SVM) or Convolutional Neural Networks (CNN). Finally, the recognized human face is classified based on facial expression by assigning a pre-defined class (label) such as “happy” or “neutral.”

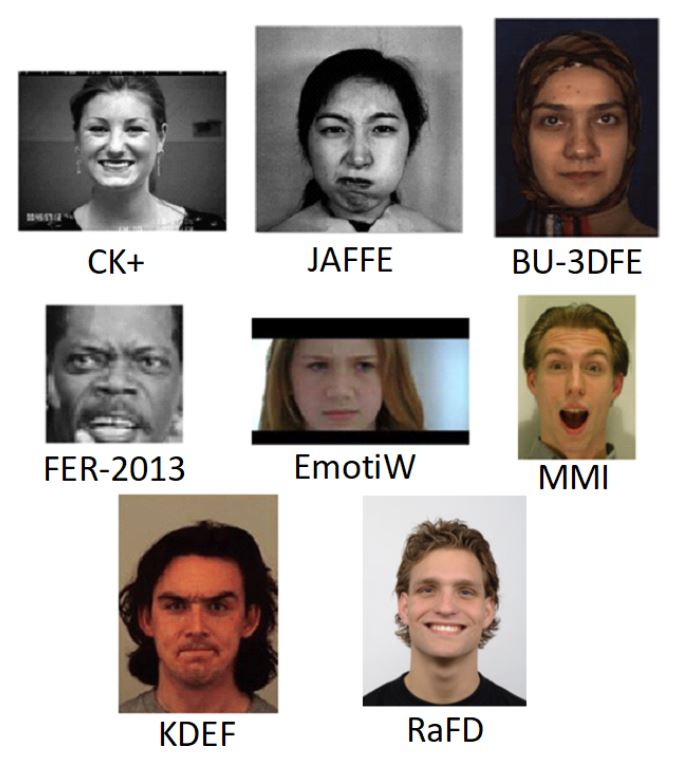

Facial AI Emotion Recognition Datasets

Most databases of emotion images are built on 2D static images or 2D video sequences; some contain 3D images. Since most 2D databases only contain frontal faces, algorithms only trained on those databases show poor performance for different head poses.

The most important databases for visual emotion recognition include:

- Extended Cohn–Kanade database (CK+): 593 videos, Posed Emotion, Controlled Environment

- Japanese Female Facial Expression Database (JAFFE): 213 images, Posed Emotion, Controlled Environment

- Binghamton University 3D Facial Expression database (BU-3DFE): 606 videos, Posed and Spontaneous Emotion, Controlled Environment

- Facial Expression Recognition 2013 database (FER-2013): 35’887 images, Posed and Spontaneous Emotion, Uncontrolled Environment

- Emotion Recognition in the Wild database (EmotiW): 1’268 videos and 700 images, Spontaneous Emotion, Uncontrolled Environment

- MMI database: 2’900 videos, Posed Emotion, Controlled Environment

- eNTERFACE’05 Audiovisual Emotion database: 1’166 videos, Spontaneous Emotion, Controlled Environment

- Karolinska Directed Emotional Faces database (KDEF): 4’900 images, Posed Emotion, Controlled Environment

- Radboud Faces Database (RaFD): 8’040 images, Posed Emotion, Controlled Environment

What Emotions Can AI Detect?

The emotions or sentiment expressions that an AI model can detect depend on the trained classes. Most emotion or sentiment databases are labeled with the following emotions:

- Emotion #1: Anger

- Emotion #2: Disgust

- Emotion #3: Fear

- Emotion #4: Happiness

- Emotion #5: Sadness

- Emotion #6: Surprise

- Emotion #7: Neural expression

State-of-the-Art in AI Emotion Recognition Analysis Technology

The interest in facial emotion recognition is growing increasingly, and new algorithms and methods are being introduced. Recent advances in supervised and unsupervised machine learning techniques brought breakthroughs in the research field, and more and more accurate systems are emerging every year. However, even though progress is considerable, emotion detection is still a very big challenge.

Before 2014 – Traditional Computer Vision

Several methods have been applied to deal with this challenging yet important problem. Early traditional methods aimed to design hand-crafted features manually, inspired by psychological and neurological theories. The features included color, texture, composition, emphasis, balance, and more.

The early attempts that focused on a limited set of specific features failed to cover all important emotional factors and did not achieve sufficient results on large-scale datasets. Unsurprisingly, modern deep learning methods outperform traditional computer vision methods.

After 2014 – Deep Learning Methods for AI Emotion Analysis

Deep learning algorithms are based on neural network models where connected layers of neurons are used to process data similarly to the human brain. Multiple hidden layers are the basis of deep neural networks to analyze data functions in the context of functional hierarchy. Convolutional neural networks (CNNs) are the most popular form of artificial neural networks for image processing tasks.

CNN achieves overall good results in AI emotion recognition tasks. For emotion recognition, the widely used CNN backbones, including AlexNet, VGG-16, and ResNet50, are initialized with pre-trained parameters on ImageNet and then fine-tuned on FI.

Since 2020 – Specialized Neural Networks for Visual Emotion Analysis

Most methods are based on convolutional neural networks that learn sentiment representations from complete images, although different image regions and image contexts can have a different impact on evoked sentiment.

Therefore, researchers developed specific neural networks for visual emotional analysis based on CNN backbones, namely MldrNet or WSCNet.

The novel method (developed in mid-2020) is called “Weakly Supervised Coupled Convolutional Network”, or WSCNet. The method automatically selects relevant soft proposals given weak annotations such as global image labels. The emotion analysis model uses a sentiment-specific soft map to couple the sentiment map with deep features as a semantic vector in the classification branch. The WSCNet outperforms the state-of-the-art results on various benchmark datasets.

Comparison of State-of-the-art methods for AI emotion analysis

There is a common discrepancy in accuracy when testing in controlled environment databases compared to wild environment databases. Hence, it is difficult to translate the good results in controlled environments (CK+, JAFFE, etc.) to uncontrolled environments (SFEW, FER-2013, etc.). For example, a model obtaining 98.9% accuracy on the CK+ database only achieves 55.27% on the SFEW database. This is mainly due to head pose variation and lighting conditions in real-world scenarios.

The classification accuracy of different methods of emotion analysis can be compared and benchmarked using a large-scale dataset such as the FI with over 3 million weakly labeled images.

- Algorithm #1: SentiBank (Hand-crafted), 49.23%

- Algorithm #2: Zhao et al. (Hand-crafted), 49.13%

- Algorithm #3: AlexNet (CNN, fine-tuned), 59.85%

- Algorithm #4: VGG-16 (CNN, fine-tuned) 65.52%

- Algorithm #5: ResNet-50 (CNN, fine-tuned) 67.53%

- Algorithm #6: MldrNet, 65.23%

- Algorithm #7: WILDCAT, 67.03%

- Algorithm #8: WSCNet, 70.07%

AI Emotion Recognition on Edge Devices

Deploying emotion recognition models on resource-constrained edge devices is a major challenge, mainly due to their computational cost. Edge AI requires deploying machine learning to edge devices where an amount of textual data is produced that cannot be processed with server-based solutions.

Highly optimized models allow running AI emotion analysis on different types of edge devices, namely edge accelerators (such as an Nvidia Jetson device) and even smartphones. The implementation of real-time inference solutions using scalable Edge Intelligence is possible but challenging due to multiple factors:

- Pre-training on different datasets for emotion recognition can improve performance at no additional cost after deployment.

- Dimensionality reduction achieves a trade-off between performance and computational requirements.

- Model shrinking with pruning and model compression strategies are promising solutions. Even deploying trained models on embedded systems remains a challenging task. Large, pre-trained models cannot be deployed and customized due to their large computational power requirements and model size.

Decentralized, Edge-based sentiment analysis and emotion recognition allow solutions with private data processing (no data offloading of visuals). However, privacy concerns still arise when emotion analysis is used for user profiling.

Outlook and current research in Emotion Recognition

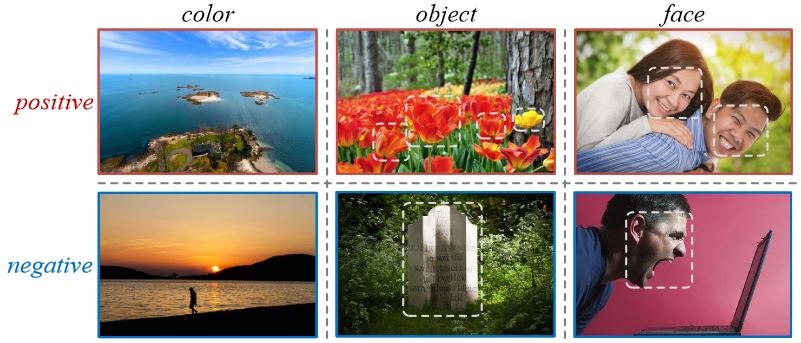

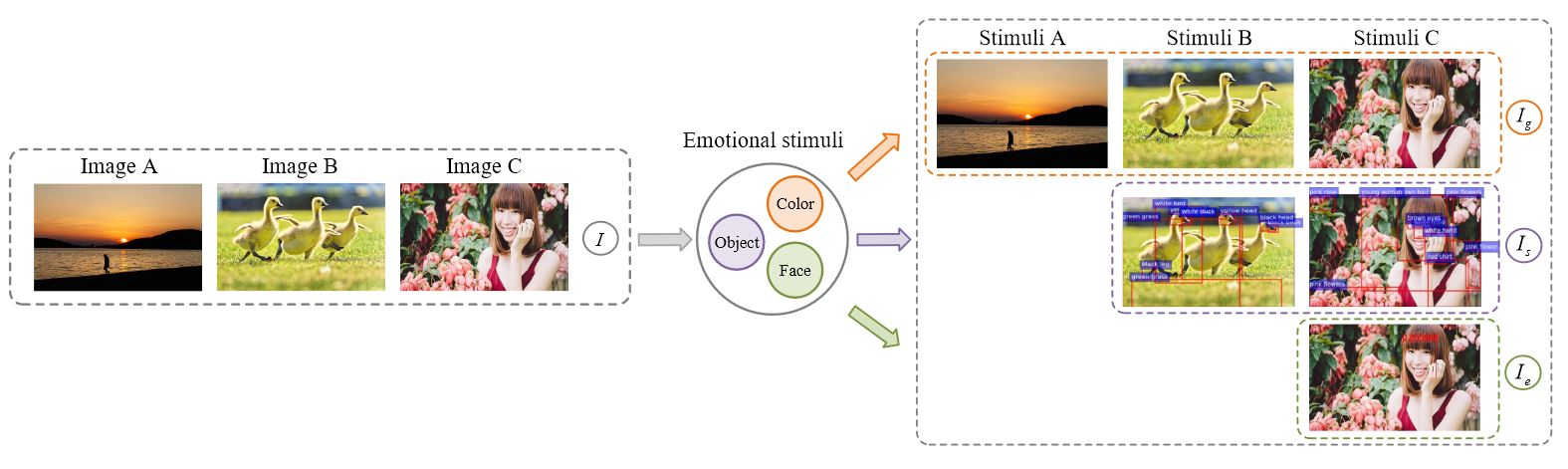

The latest 2021 market research on Visual Emotion Analysis involves stimulus-aware emotion recognition that outperforms state-of-the-art methods on visual emotion datasets. The method detects an entire set of emotional stimuli (such as color, object, or face) that can evoke different emotions (positive, negative, or neutral).

While the approach is comparably complex and computationally resource-intensive, it achieves slightly higher accuracy on the FI dataset compared to the WSCNet (72% accuracy).

The approach detects external factors and stimuli based on psychological theory to analyze color, detected objects, and facial emotion in images. As a result, an effective image is analyzed as a set of emotional stimuli that can be further used for emotion prediction.

Applications of AI Emotion Recognition and Sentiment Analysis

There is a growing demand for various types of sentiment analysis in the AI and computer vision market. While it is not currently popular in large-scale use, solving visual emotion analysis tasks is expected to greatly impact real-world applications.

Opinion Mining and Customer Service Analysis

Opinion mining, or sentiment analysis, aims to extract people’s opinions, attitudes, and specific emotions from data. Conventional sentiment analysis concentrates primarily on text data or context (for example, customers’ online reviews or news articles). However, visual sentiment recognition is beginning to receive attention since visual content such as images and videos became popular for self-expression and product reviews on social networks. Opinion mining is an important strategy of smart advertising as it can help companies measure their brand reputation and analyze the reception of their product or service offerings.

Additionally, customer service can be improved bilaterally. First, the computer vision system may analyze the sentiments of the representative and provide feedback on how they can improve their interactions. Additionally, customers can be analyzed in stores or during other interactions with staff to understand whether their shopping experience was overall positive or negative or if they experienced happiness or sadness. Thus, customer sentiments can be turned into pointers to be provided in the retail sector of how the customer experience can be improved.

Medical Sentiment Analysis

Medical sentiment classification concerns the patient’s health status, medical conditions, and treatment. Its analysis and extraction have multiple applications in mental disease treatment, remote medical services, and human-computer interaction.

Emotional Relationship Recognition

Recent research developed an approach to recognize the emotional state of people to perform pairwise emotional relationship recognition. The challenge is to characterize the emotional relationship between two interacting characters using AI-based video analytics.

What’s Next for AI Emotion Recognition and Sentiment Analysis With Computer Vision?

Sentiment analysis and emotion recognition are key tasks to build empathetic systems and human-computer interaction based on user emotion. Since deep learning solutions were originally designed for servers with unlimited resources, real-world deployment to edge devices is a challenge (Edge AI). However, real-time inference of emotion recognition systems allows the implementation of large-scale solutions.

If you are looking for an enterprise-grade computer vision platform to deliver computer vision rapidly with no code and automation, check out Viso Suite. Industry leaders use it to build, deploy, monitor, and maintain their AI applications. Get a demo for your organization.

Read More about AI Emotion Recognition

Read more, and check out related articles: