MediaPipe is an open-source framework from Google for building pipelines to perform computer vision inference over arbitrary sensory data such as video or audio. Using MediaPipe, such a perception pipeline can be built as a graph of modular components.

MediaPipe is currently in alpha at v0.7, and there may still be breaking API changes. Stable APIs are expected by v1.0.

What is a Computer Vision Pipeline?

In computer vision pipelines, those components include model inference, media processing algorithms, data transformations, etc. Sensory data such as video streams enter the graph, and perceived descriptions such as object localization or face-keypoint streams exit the graph.

Who Can Use MediaPipe?

MediaPipe was built for AI and machine learning (ML) teams and software developers who implement production-ready ML applications, or students and researchers who publish code and prototypes as part of their research work.

What is MediaPipe Used for?

The MediaPipe framework is mainly used for rapid prototyping of perception pipelines with AI models for inference and other reusable components. It also facilitates the deployment of computer vision applications into demos and applications on different hardware platforms.

The configuration language and evaluation tools enable teams to incrementally improve computer vision pipelines.

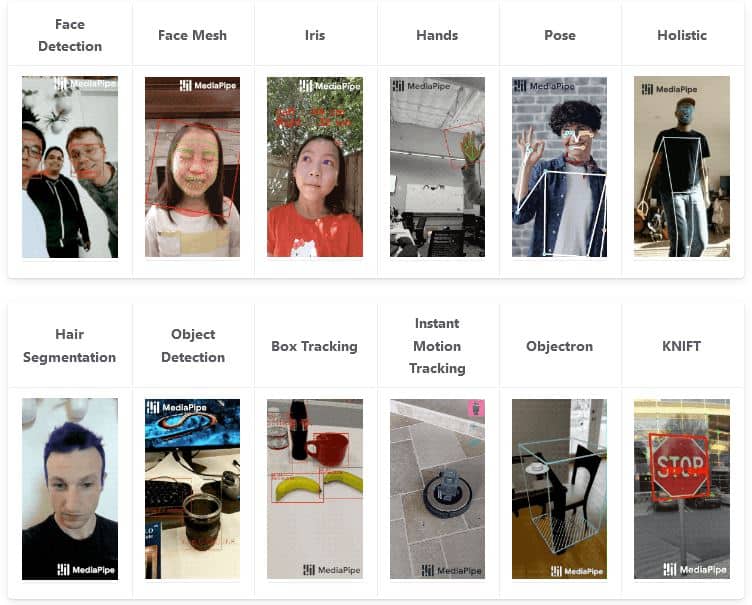

What ML Solutions Can You Build in MediaPipe?

| C++ | JS | Python | Android | iOS | Coral | |

|---|---|---|---|---|---|---|

| Face Detection | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ |

| Face Mesh | ✔ | ✔ | ✔ | ✔ | ✔ | |

| Iris Detection | ✔ | ✔ | ✔ | |||

| Hands Detection | ✔ | ✔ | ✔ | ✔ | ✔ | |

| Pose Detection | ✔ | ✔ | ✔ | ✔ | ✔ | |

| Holistic | ✔ | ✔ | ✔ | ✔ | ✔ | |

| Selfie Segmentation | ✔ | ✔ | ✔ | ✔ | ✔ | |

| Hair Segmentation | ✔ | ✔ | ||||

| Object Detection | ✔ | ✔ | ✔ | ✔ | ||

| Box Tracking | ✔ | ✔ | ✔ | |||

| Instant Motion Tracking | ✔ | |||||

| Objectron (3D) | ✔ | ✔ | ✔ | ✔ | ||

| KNIFT | ✔ | |||||

| AutoFlip | ✔ | |||||

| MediaSequence | ✔ | |||||

| YouTube 8M | ✔ |

Most MediaPipe solutions use different TFLite models (TensorFlow Lite) for hand, iris, or face landmarks, palm detection, pose detection, Template-based Feature Matching (KNIFT), or 3D Object Detection (Objectron) tasks.

What are the Advantages of MediaPipe?

- End-to-end acceleration: Use common hardware to build in fast ML inference and video processing, including GPU, CPU, or TPU.

- Build once, deploy anywhere: The unified framework is suitable for Android, iOS, desktop, edge, cloud, web, and IoT platforms.

- Ready-to-use solutions: Prebuilt ML solutions demonstrate the full power of the MediaPipe framework.

- Open source and free: The framework is licensed under Apache 2.0, fully extensible, and customizable.

How to Use MediaPipe

Start Developing with MediaPipe

MediaPipe provides example code and demos for MediaPipe in Python and MediaPipe in JavaScript. The MediaPipe solutions can be run with only a few lines of code.

To further customize the solutions and build your own, use MediaPipe in C++, Android, and iOS. To get started, install MediaPipe and start building example applications in C++ or on mobile. You can find the MediaPipe source code in the MediaPipe Github repo.

Can I use MediaPipe on Windows?

Currently, MediaPipe supports Ubuntu Linux, Debian Linux, macOS, iOS, and Android. The MediaPipe framework is based on a C++ library (C++ 11), making it relatively easy to port to additional platforms.

Business Applications – MediaPipe with Viso Suite

The Viso Suite enterprise computer vision platform seamlessly integrates MediaPipe. The Viso platform adds features to accelerate development, infrastructure scaling, edge device management, model training, and more to cover the entire AI vision application lifecycle beyond the features of MediaPipe in the business processes.

AI Models vs. Applications

Typically, image or video input data is fetched as separate streams and analyzed using deep neural networks such as TensorFlow, PyTorch, CNTK, or MXNet. Such models process data in a simple and deterministic method: one input generates one output, which allows very efficient processing execution.

MediaPipe, on the other hand, operates at a much higher-level of semantics and allows more complex and dynamic behavior. For example, one input can generate zero, one, or multiple outputs, which cannot be modeled with neural networks. Video processing and AI perception require streaming processing compared to batching methods.

OpenCV 4.0 introduced the Graph API to build sequences of OpenCV image processing operations in the form of a graph. In contrast, MediaPipe allows operations on arbitrary data types and has native support for streaming time-series data, making it much more suitable for analyzing audio and sensor data.

The Concept of MediaPipe

MeidaPipe Framework consists of three main elements:

- A framework for inference from sensory data (audio or video)

- A set of tools for performance evaluation, and

- Reusable components for inference and processing (calculators)

The main components of MediaPipe:

- Packet: The basic data flow unit is called a “packet.” It consists of a numeric timestamp and a shared pointer to an immutable payload.

- Graph: Processing takes place inside a graph, which defines the flow paths of packets between nodes. A graph can have any number of inputs and outputs, and branch or merge data.

- Nodes: Nodes are where the bulk of the graph’s work takes place. They are also called “calculators” (for historical reasons) and produce or consume packets. Each node’s interface defines several input and output ports.

- Streams: A stream is a connection between two nodes that carries a sequence of packets with increasing timestamps.

There are more advanced components, such as Side packets, Packet ports, Input policies, etc. To visualize a graph, copy and paste the graph into the MediaPipe Visualizer.

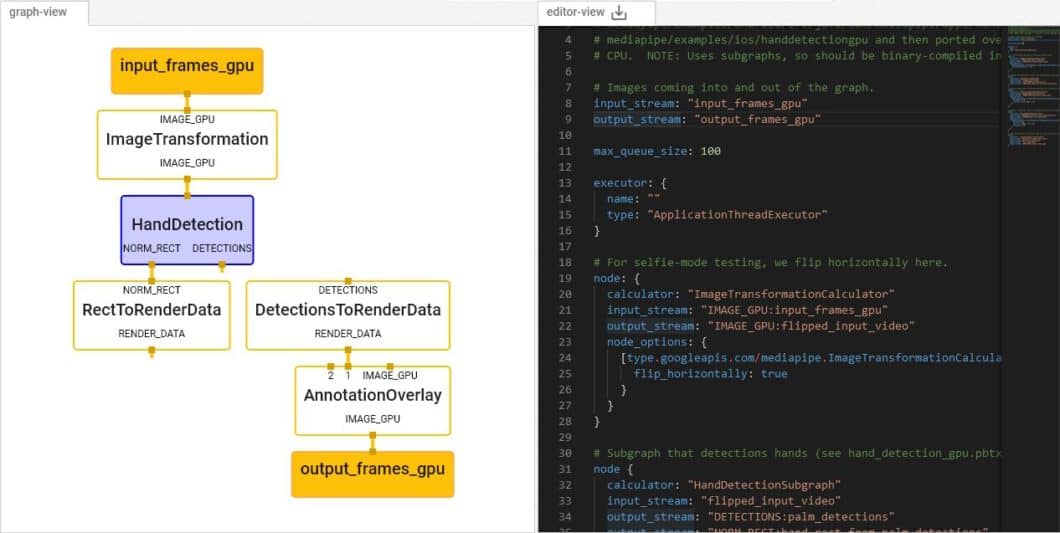

MediaPipe Architecture

MediaPipe allows a developer to prototype a pipeline incrementally. A vision pipeline is defined as a directed graph of components, where each component is a node (“Calculator”). In the graph, the calculators are connected by data “Streams.” Each stream represents a time series of data “Packets.”

Together, the calculators and streams define a data-flow graph. The packets that flow across the graph are collated by their timestamps within the time series. Each input stream maintains its queue to allow the receiving node to consume the packets at its own pace.

The pipeline can be refined incrementally by inserting or replacing calculators anywhere in the graph. Developers can also define custom calculators. While the graph executes calculators in parallel, each calculator executes on at most one thread at a time. This constraint ensures that the custom calculators can be defined without specialized expertise in multithreaded programming.

GPU Support

MediaPipe supports GPU computing and rendering nodes and allows a combination of multiple GPU nodes and mixing them with CPU-based nodes. There are several GPU APIs on mobile platforms (OpenGL ES, Metal, Vulkan).

There is no single cross-API GPU abstraction. Individual nodes can be written using different APIs, allowing them to take advantage of platform-specific features when needed. This enables GPU and CPU nodes to provide advantages of encapsulation and composability while maintaining efficiency.

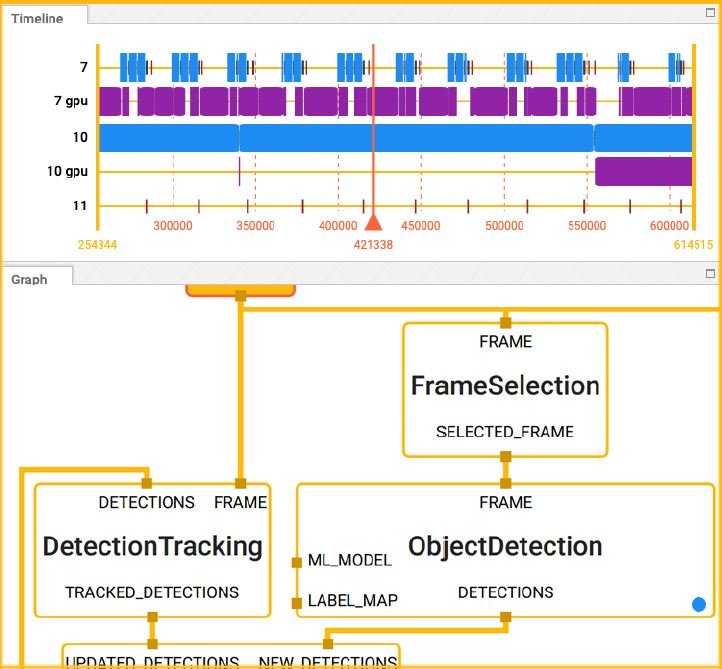

Tracer Module

The MediaPipe tracer module records timing events across the graph to record events with several data fields (time, packet timestamp, data ID, node ID, stream ID). The tracer also reports histograms of various resources, such as the elapsed CPU time across each calculator and stream.

The tracer module records timing information on demand and can be enabled using a configuration setting (in GraphConfig). The user can also completely omit the tracer module code using a compiler flag.

The recorded timing data enables reporting and visualization of individual packet flows and individual calculator executions. The recorded timing data helps diagnose several problems, such as unexpected real-time delays, memory accumulation due to packet buffering, and collating packets at different frame rates.

The aggregated timing data can be used to report average and extreme latencies for performance tuning. Also, the timing data can be explored to identify the nodes along the critical path, whose performance determines end-to-end latency.

Visualizer Tool

The MediaPipe visualizer is a tool for understanding the topology and overall behavior of the pipelines. It provides a timeline view and a graph view. In the timeline view, the user can load a pre-recorded trace file and see the precise timings of data as it moves through threads and calculators (nodes).

In the graph view, the user can visualize the topology of a graph at any point in time, including the state of each calculator and the packets being processed or being held in its input queues.

The Bottom Line

Google MediaPipe provides a framework for cross-platform, customizable machine learning solutions for live and streaming media to deliver live ML anywhere. The powerful tool is suitable for creating computer vision pipelines and complex applications.

MediaPipe is fully integrated into the Viso Suite platform and provides an end-to-end solution for AI vision applications. With Viso Suite, businesses can take full advantage of MediaPipe while covering the entire lifecycle of computer vision in one unified solution, with no code and automated infrastructure.

Explore other articles about cutting-edge computer vision

- YOLOv7: The Most Powerful Object Detection Algorithm

- Supervised vs Unsupervised Learning for Computer Vision

- Image Data Augmentation for Computer Vision

- Natural Language Processing (NLP), an Introduction