ONNX (Open Neural Network Exchange) is an open standard for computer vision and machine learning models. The ONNX standard provides a common format enabling the transfer of models between popular machine learning frameworks. It promotes interoperability between different deep learning frameworks for simple model sharing and deployment.

This interoperability is crucial for developers and researchers working with a large amount of real-time data. It allows for the use of models across different frameworks, omitting the need for retraining or significant modifications.

Key Aspects of ONNX

- Format Flexibility. ONNX supports a wide range of model types, including deep learning and traditional machine learning.

- Framework Interoperability. Models trained in one framework can be exported to ONNX format and imported into another compatible framework. This is particularly useful for deployment or for continuing development in a different environment.

- Performance Optimizations. ONNX models can benefit from optimizations available in different frameworks and efficiently run on various hardware platforms.

- Community-Driven. A community of companies and individual contributors supports the open-source project. This ensures a constant influx of updates and improvements.

- Tools and Ecosystem. Numerous tools are available for converting, visualizing, and optimizing ONNX models. Developers can also find libraries for running these models on different AI hardware, including GPUs and CPUs.

- Versioning and Compatibility. ONNX is regularly updated with new versions. Each version maintains a level of backward compatibility to ensure that models created with older versions remain usable.

This standard is particularly beneficial when flexibility and interoperability between different tools and platforms are essential. We can export a research model to ONNX for deployment in a production environment of a different framework.

Real-World Applications of ONNX

ONNX can be seen as a Rosetta stone of artificial intelligence (AI). It offers unparalleled flexibility and interoperability across frameworks and tools.

- Healthcare. In medical imaging, deep learning models can help diagnose diseases from MRI or CT scans. A model trained in TensorFlow for tumor detection can be converted to ONNX for deployment in clinical diagnostic tools of a different framework.

- Automotive. Autonomous vehicle systems and self-driving cars can have integrated object detection models for real-time driving decisions. It also gives a level of interoperability regardless of the original training environment.

- Retail. Recommendation systems trained in one framework can be deployed in diverse e-commerce platforms. This allows retailers to enhance customer engagement through personalized shopping experiences.

- Manufacturing. In predictive maintenance, models can forecast equipment failures. You can train it in one framework and deploy it in factory systems using another, ensuring operational efficiency.

- Finance. Fraud detection models developed in a single framework can be easily transferred for integration into banking systems. This is a vital component in implementing robust, real-time fraud prevention.

- Agriculture. ONNX assists in precision farming by integrating crop and soil models into various agricultural management systems, aiding in efficient resource utilization.

- Entertainment. ONNX can transfer behavior prediction models into game engines. This has the potential to enhance player experience through AI-driven personalization and interactions.

- Education. Adaptive learning systems can integrate AI models that personalize learning content, allowing for different learning styles across various platforms.

- Telecommunications. ONNX streamlines the deployment of network optimization models. This allows operators to optimize bandwidth allocation and streamline customer service in telecom infrastructures.

- Environmental Monitoring. ONNX supports climate change models, allowing for the sharing and deployment across platforms. Environmental models are notorious for their complexity, especially when combining them to make predictions.

Popular Frameworks and Tools Compatible with ONNX

ONNX’s ability to easily interface with frameworks and tools already used in different applications highlights its value.

This compatibility ensures that AI developers can leverage the strengths of diverse platforms while maintaining model portability and efficiency.

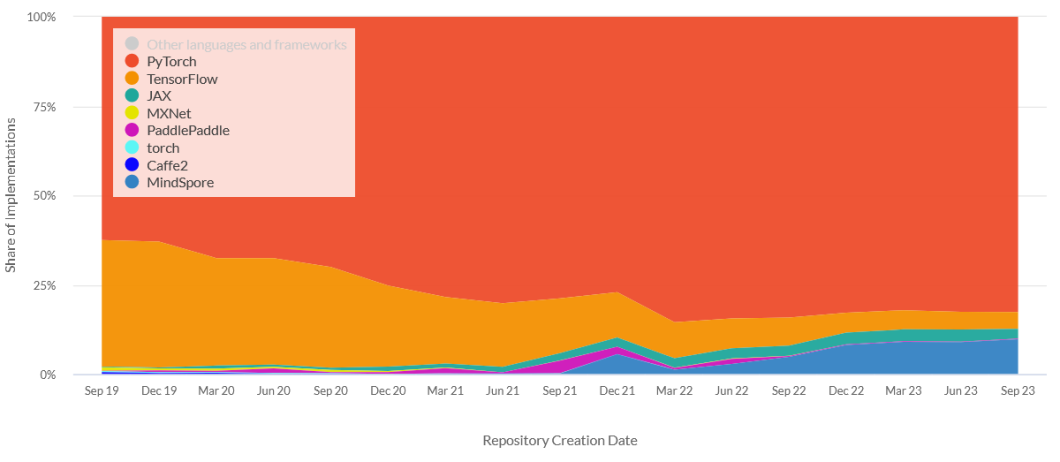

- PyTorch. A widely used open-source machine learning library from Facebook. Pytorch’s popularity stems from its ease of use and dynamic computational graph. The community favors PyTorch for research and development because of its flexibility and intuitive design.

- TensorFlow. Developed by Google, TensorFlow is a comprehensive framework. TensorFlow offers both high-level and low-level APIs for building and deploying machine learning models.

- Microsoft Cognitive Toolkit (CNTK). A deep learning framework from Microsoft. Known for its efficiency in training convolutional neural networks, CNTK is especially notable in speech and image recognition tasks.

- Apache MXNet. A flexible and efficient open-source deep learning framework supported by Amazon. MXNet deploys deep neural networks on many platforms, from cloud infrastructure to mobile devices.

- Scikit-Learn. A popular library for traditional machine learning algorithms. While not directly compatible, models from Scikit-Learn can be converted with sklearn-onnx.

- Keras. A high-level neural network API, Keras operates on top of TensorFlow, CNTK, and Theano. It focuses on enabling fast experimentation.

- Apple Core ML. Models can be converted from other frameworks to ONNX to Core ML format for integration into iOS applications.

- ONNX Runtime. A cross-platform, high-performance scoring engine. It optimizes model inference across hardware and is crucial for deployment.

- NVIDIA TensorRT. An SDK for high-performance deep learning inference. TensorRT includes an ONNX parser and is used for optimized inference on NVIDIA GPUs.

- ONNX.js. A JavaScript library for running ONNX models on browsers and Node.js. It allows web-based ML applications to leverage ONNX models.

Understanding the Intricacies and Implications of ONNX Runtime in AI Deployments

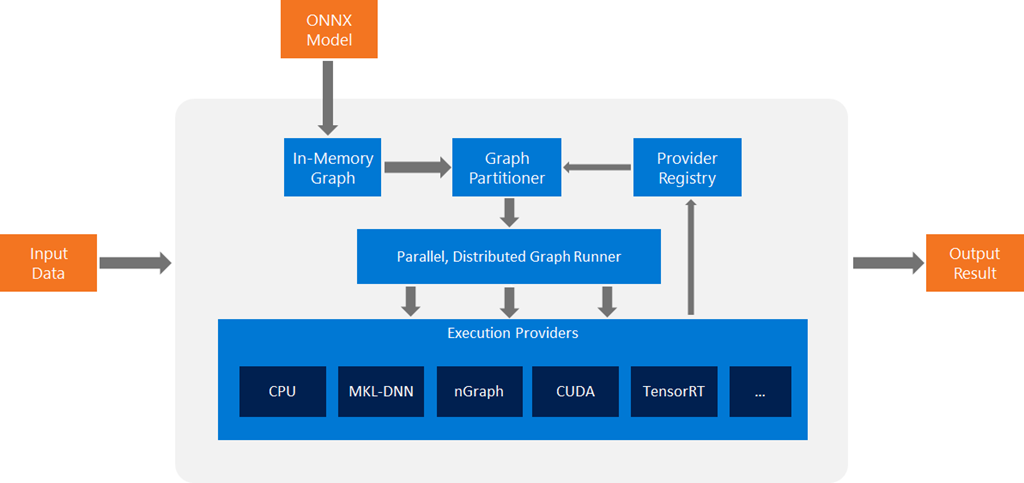

ONNX Runtime is a performance-focused engine for running models. It ensures efficient and scalable execution across a variety of platforms and hardware. A hardware-agnostic architecture allows for the deployment of AI models consistently across different environments.

This high-level system architecture begins with converting an ONNX model into an in-memory graph representation. This proceeds with provider-independent optimizations and graph partitioning based on available execution providers. Each subgraph is then assigned to an execution provider, ensuring that it can handle the given operations.

The design of ONNX Runtime facilitates multiple threads invoking the Run() method. This occurs on the same inference session, with all kernels being stateless. The design guarantees support for all operators by the default execution provider. It also allows execution providers to use internal tensor representations, requiring conversion at the boundaries of their subgraphs.

- Ability to execute models across various hardware and execution providers.

- Graph partitioning and optimizations improve efficiency and performance.

- Compatibility with multiple custom accelerators and runtimes.

- Supports a wide range of applications and environments, from cloud to edge.

- Multiple threads can simultaneously run inference sessions.

- Ensures support for all operators, providing reliability in model execution.

- Execution providers manage their memory allocators, optimizing resource usage.

- Easy integration with various frameworks and tools for streamlined AI workflows.

Benefits and Challenges of Adopting the ONNX Model

As with any new revolutionary technology, ONNX comes with its challenges and considerations.

Benefits

- Framework Interoperability. Facilitates the use of models across different ML frameworks, enhancing flexibility.

- Deployment Efficiency. Streamlines the process of deploying models across various platforms and devices.

- Community Support. Benefits from a growing, collaborative community contributing to its development and support.

- Optimization Opportunities. Offers model optimizations for improved performance and efficiency.

- Hardware Agnostic. Compatible with a wide range of hardware, ensuring broad applicability.

- Consistency. Maintains model fidelity across different environments and frameworks.

- Regular Updates. It is continuously evolving with the latest advancements in AI and machine learning.

Challenges

- Complexity in Conversion. Converting models to ONNX format can be complex and time-consuming. This can especially apply to models using non-standard layers or operations.

- Version Compatibility. Ensuring compatibility with different versions of ONNX and ML frameworks can be challenging.

- Limited Support for Certain Operations. It may not support some advanced or proprietary operations.

- Performance Overheads. In some cases, there can be performance overheads in converting and running.

- Learning Curve. It requires an understanding of the ONNX format and compatible tools, adding to the learning curve for teams.

- Dependency on Community. Some features may rely on community contributions for updates and fixes, which can vary in timeliness.

- Intermittent Compatibility Issues. Occasional compatibility issues with certain frameworks or tools can arise, requiring troubleshooting.

ONNX – An Open Standard Driven by a Thriving Community

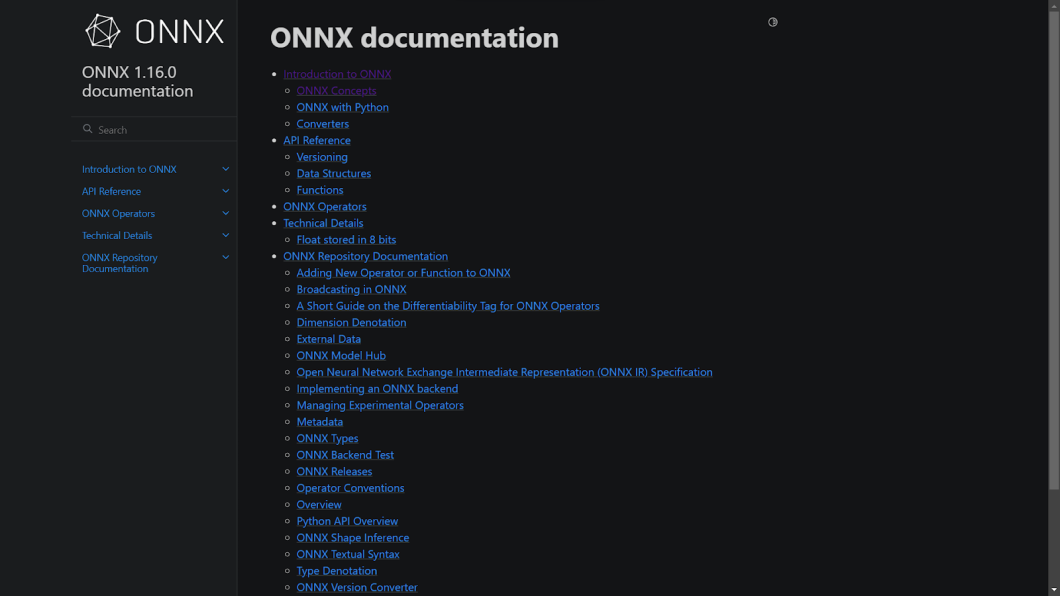

As a popular open-source framework, community involvement is central to ONNX’s continued development and success. Its GitHub project has nearly 300 contributors, and its current user base is over 19.4k.

With 27 releases and over 3.6k forks at the time of writing, it’s a dynamic and ever-evolving project. There have also been over 3,000 pull requests (40 still active) and over 2,300 resolved issues (268 active).

The involvement of many contributors and users has made it a vibrant and progressive project. And initiatives by ONNX, individual contributors, major partners, and other interested parties are keeping it that way:

- Wide Industry Adoption. ONNX is popular among individual developers and major tech companies for various AI and ML applications. Examples include:

- Microsoft. Utilizes ONNX in various services, including Azure Machine Learning and Windows ML.

- Facebook. Facebook, a founding member of the ONNX project, has integrated ONNX support in PyTorch, a leading deep learning framework.

- IBM. Uses ONNX in its Watson services, enabling seamless model deployment across diverse platforms.

- Amazon Web Services (AWS). It supports ONNX models in its machine learning services, such as Amazon SageMaker.

- Active Forum Discussions. The community participates in forums and discussions, providing support, sharing best practices, and guiding the project direction.

- Regular Community Meetings. ONNX maintains regular community meetings, where members discuss advancements, and roadmaps, and address community questions.

- Educational Resources. The community actively works on developing and sharing educational resources, tutorials, and documentation.

ONNX Case Studies

Numerous case studies have demonstrated ONNX’s effectiveness and impact in various applications. However, here are two of the most significant ones in recent years:

Optimizing Deep Learning Model Training

Microsoft’s case study showcases how ONNX Runtime (ORT) can improve the training of large deep-learning models like BERT. ORT optimizes memory usage by reusing buffer segments across a series of operations. These can include gradient accumulation and weight update computations.

Among its most important findings was how it enabled training BERT with double the batch size compared to PyTorch. Thus, leading to more efficient GPU utilization and better performance. Additionally, ORT integrates Zero Redundancy Optimizer (ZeRO) for GPU memory consumption reduction, further boosting batch size capabilities.

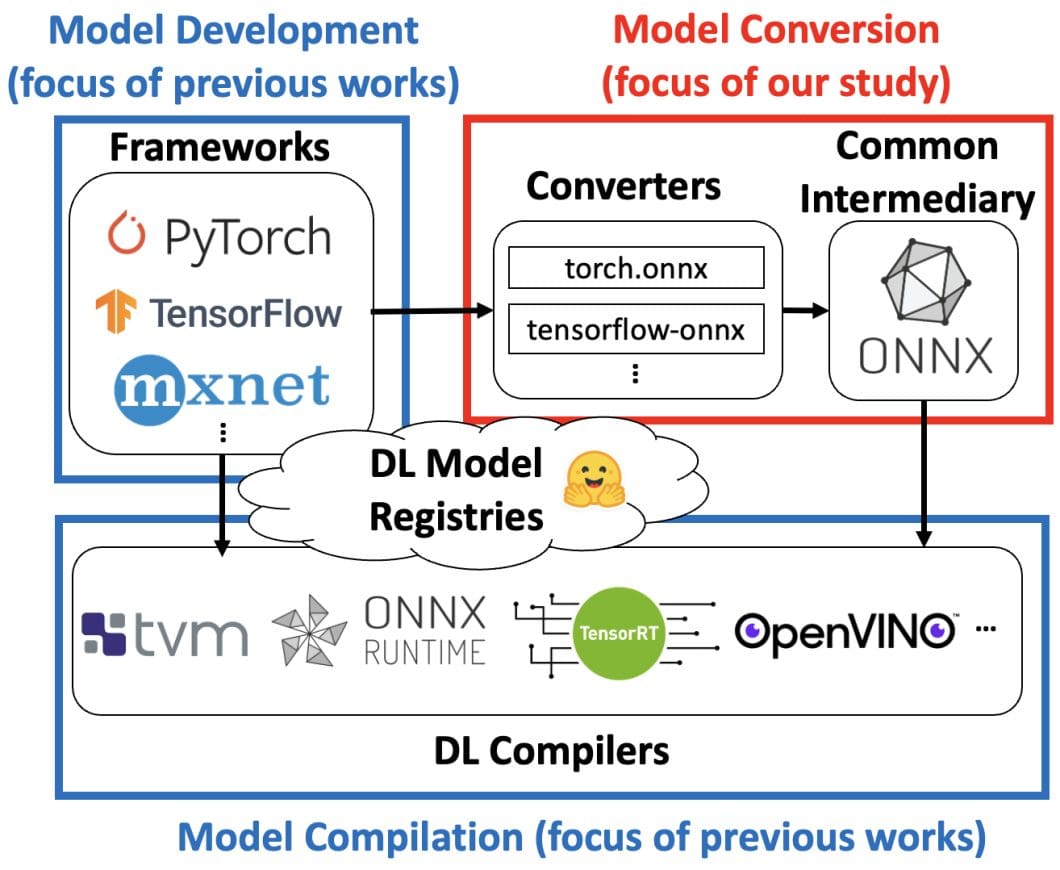

Failures and Risks in Model Converters

A study in the ONNX ecosystem focused on analyzing the failures and risks in deep learning model converters. This research highlights the growing complexity of the deep learning ecosystem. This included the evolution of frameworks, model registries, and compilers.

In the study, the data scientists point out the increasing importance of common intermediaries for interoperability as the ecosystem expands. It addresses the challenges in compatibility between frameworks and DL compilers, illustrating how ONNX aids in navigating these complexities.

The research also details the nature and prevalence of failures in DL model converters. Thus, providing insights into the risks and opportunities for improvement in the ecosystem.

What’s Next With ONNX?

ONNX is a pivotal framework in open-source machine learning, fostering interoperability and collaboration across various AI platforms. Its versatile structure, supported by an extensive array of frameworks, empowers developers to deploy models efficiently. ONNX offers a standardized approach to bridging the gaps and reaching new usability and model performance heights.

Learn More

To learn more about computer vision and machine learning, check out our other blogs:

- A Gentle Introduction to Pattern Recognition

- DeepFace: an Open-source Facial Recognition Library

- Getting Started with Object Recognition

- Real-time Computer Vision: AI on the Edge

- What is Machine Vision?