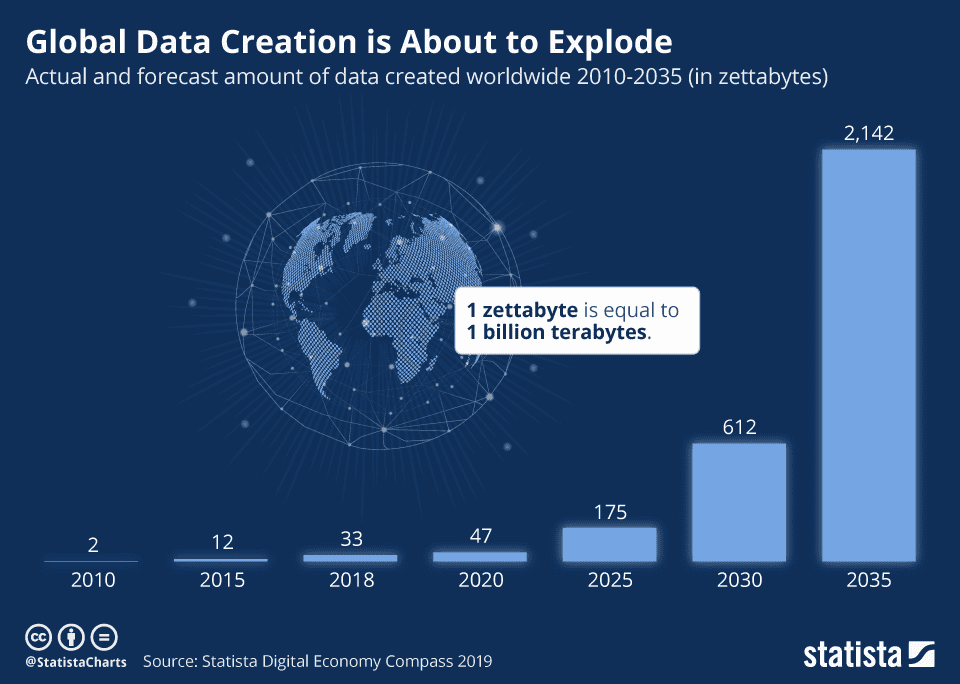

The explosive growth of the Internet of Things (IoT) is responsible for the interconnection of billions of devices. These devices generate massive amounts of data at the network edge. The collection of massive volumes of data in cloud data centers incurs extremely high latency and network bandwidth usage. Thus, requiring AI-edge technology.

We must push the AI frontier to the network edge to fully leverage the potential of big data. Edge AI is the combination of edge computing and AI, and it is a key concept for state-of-the-art AI applications.

What is Edge AI or AI on the Edge?

Edge AI combines Edge Computing and Artificial Intelligence to run machine learning tasks directly on connected edge devices. To understand edge AI, we must examine technological trends driving the need for transferring AI computing to the edge.

Big Data and IoT Drive Edge AI

The collection and analysis of massive volumes of data is a top priority in the Internet of Things (IoT). This leads to the generation of large quantities of data in real-time, requiring analysis by AI systems.

Traditionally, AI is Cloud-based

Initially, AI solutions were cloud-driven for two reasons:

- The need for high-end hardware capable of performing deep learning computing tasks

- The ability to effortlessly scale the resources in the cloud

This involves offloading data to external computing systems (Cloud) with an internet connection for further processing. However, this worsens latency, leading to increased communication costs and driving privacy concerns.

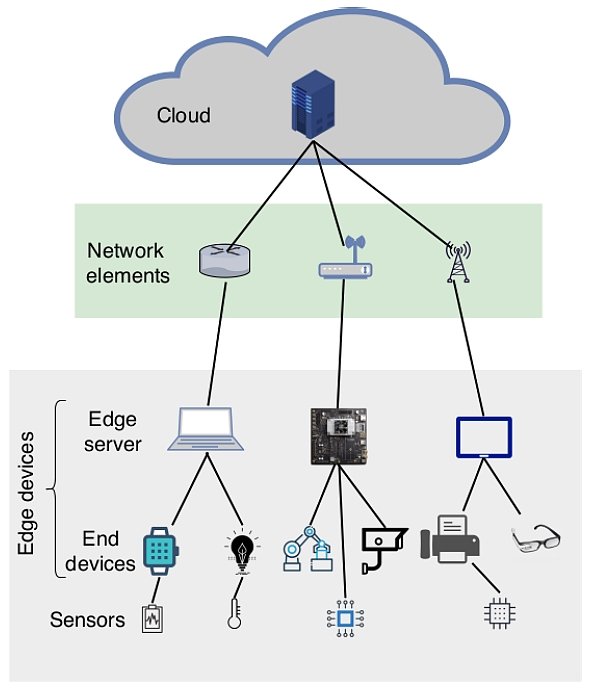

What is Edge Computing?

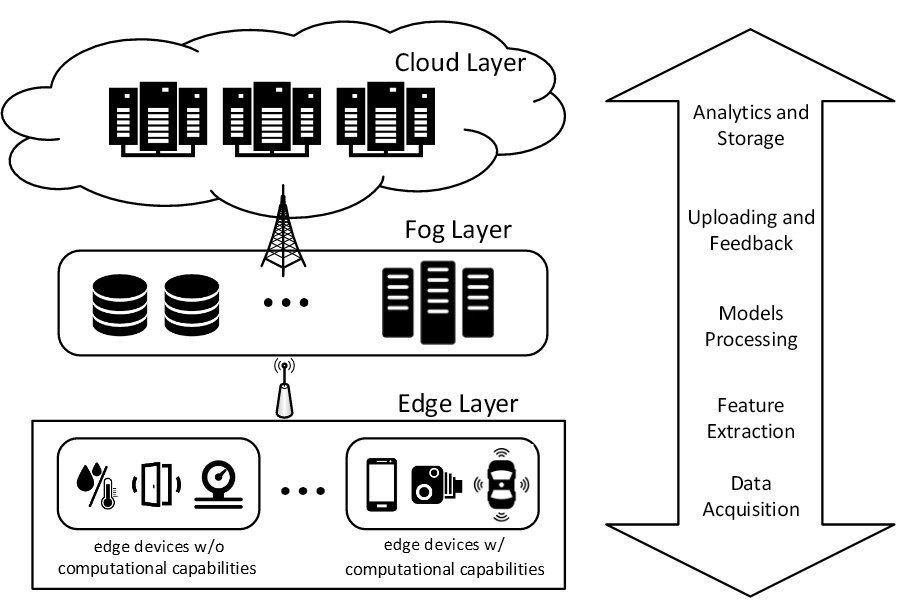

To address cloud limitations, computing tasks must move closer to the data generation location. Edge Computing performs computations as close to data sources as possible instead of in far-off, remote locations. Hence, edge computing extends the cloud due to its common implementation as an edge-cloud system. This is where decentralized edge nodes send processed data to the cloud.

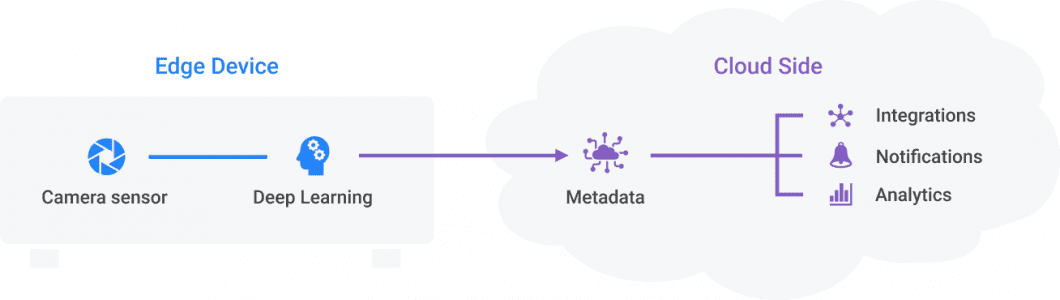

AI to Run Machine Learning on Edge Devices

Edge AI, or Edge Intelligence, is the combination of edge computing and AI. It runs AI algorithms processing data locally on hardware, so-called edge devices. Therefore, Edge AI provides a form of on-device AI to take advantage of rapid response times with:

- Low latency

- High privacy

- More robustness

- Better efficient use of network bandwidth

Data scientists continue to push emerging technologies such as machine learning, neural network acceleration, and reduction. These advancements influence the adoption of edge devices. ML edge computing opens up possibilities for new, robust, and scalable AI systems across multiple industries.

The entire field is very new and constantly evolving. We expect Edge to drive AI’s future by moving capabilities closer to the physical world.

What is an Edge AI Device?

An edge device is either an end device or an edge server able to perform computing tasks on the device itself. Hence, edge devices process the data of connected sensors, gathering data. For example, cameras that provide a live stream.

Examples of edge devices are any computers or servers of a wide range of platforms. Any computer of any form factor can serve as an edge device. This can include laptops, mobile phones, personal computers, embedded computers, or physical servers. Popular computing platforms for edge computing are x86, x64, or ARM.

For smaller prototypes, edge devices can be a System on a Chip (SoC). These devices can include a Raspberry Pi or the Intel NUC series. Such SOC devices integrate all basic components of a computer (CPU, memory, USB controller, GPU). More ruggedized and robust examples of edge devices include:

- NVIDIA Jetson TX2

- Jetson nano

- Google Coral platform

Compared to conventional computing, ML tasks require very powerful AI hardware. However, AI models tend to become lighter and more efficient.

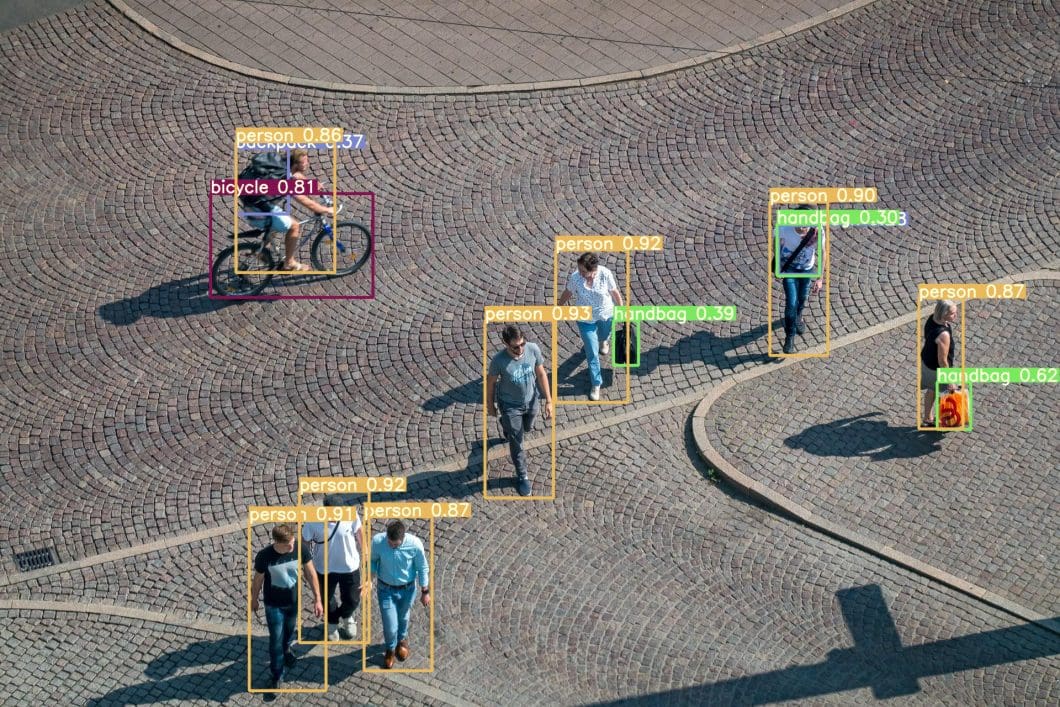

Examples of popular object detection algorithms for computer vision include YOLOv3, YOLOR, or YOLOv7. Modern neural networks have a particularly lightweight and optimized version for running ML at the edge. Tiny models work well in this context, e.g., YOLOv7-tiny.

Advantages of AI at the Edge

Edge computing brings AI processing tasks from the cloud to near-end devices. This overcomes the intrinsic problems of the traditional cloud, such as high latency and a lack of security.

Hence, moving AI computations to the network edge opens opportunities for new products and services with AI-powered applications.

1. Lower Data Transfer Volume

One of the main benefits of edge AI is that the device sends a significantly lower amount of processed data to the cloud. By reducing traffic between a small cell and the core network, we can increase connection bandwidth to prevent bottlenecks. Thus, reducing the amount in the core network.

2. Speed for Real-time Computing

Real-time processing is a fundamental advantage of Edge Computing. Edge devices’ physical proximity to data sources makes achieving lower latency possible. In turn, this improves real-time data processing performance. It supports delay-sensitive applications and services such as remote surgery, tactile internet, unmanned vehicles, and vehicle accident prevention.

Edge servers provide decision support, decision-making, and data analysis in a real-time manner.

3. Privacy and Security

While transferring sensitive user data over networks is more vulnerable, running AI at the edge keeps the data private. Edge computing makes it possible to guarantee that private data never leaves the local device (on-device machine learning).

When we must process data remotely, edge devices can discard personally identifiable information before data transfer. This enhances user privacy and security. Check out our privacy-preserving deep learning article to learn more about data security with AI.

4. High Availability

Decentralization and offline capabilities make Edge AI more robust by providing transient services during network failure or cyberattacks. AI task deployment at the edge ensures significantly higher availability and overall robustness. Mission-critical or production-grade AI applications (on-device AI) require this.

5. Cost Advantage

Edge AI processing is cheaper because the cloud receives only processed, highly valuable data. While sending and storing huge amounts of data is still expensive, small-edge devices have become more computationally powerful. A paradigm following Moore’s Law.

Overall, edge-based ML enables real-time data processing and decision-making without the natural limitations of cloud computing. With more regulatory emphasis on data privacy, Edge ML could be the only viable AI solution for businesses.

Edge AI and 5G

The urgent need for 5G in mission-critical applications drives edge computing and Edge AI innovation. As the next-generation cellular network, 5G aspires to achieve substantial improvement in the quality of service. This includes higher throughput and lower latency, offering 10x faster data rates than existing 5G networks.

To understand the need for fast data transmission and local on-device computing, we consider autonomous vehicles. Consider real-time packet delivery requiring an end-to-end delay of less than 10 ms. The minimum end-to-end delay for cloud access is >80 ms, intolerable for many real-world applications.

Edge computing fulfills the sub-millisecond requirement of 5G applications and reduces energy consumption by around 30-40%. This contributes up to 5x less energy consumption as compared to accessing the cloud. Edge computing and 5G improve network performance to support and deploy different real-time AI applications.

For example, real-time video analytics for intelligent surveillance and security, industrial manufacturing automation, or smart farming. These use cases are applicable where low latency data transmission is a level-zero requirement.

Multi-Access Edge Computing (AI on the Edge)

Multi-Access Edge Computing (MEC), or Mobile Edge Cloud, is an extension of cloud computing leveraging 5 G. The MEC architecture provides computation, storage, and networking capabilities at the network edge, close to end devices and users.

Advantages of MEC

5G and IoT applications drive rapid technological advancements. This is because Multi-Access Edge Computing supports applications and services that bridge cloud computing and end-users. MEC architecture includes interconnected, layered, and flexibly deployed devices and systems.

MEC technologies leverage 5G wireless systems to provide real-time, low-latency, and high-bandwidth access to connected devices. Thus, MEC allows network operators and telecom providers to open 5G networks to a wide range of innovative services. Thereby giving rise to a brand-new ecosystem and value chain.

Using MEC in Computer Vision Applications

Multi-Access Edge Computing enables the coherent integration of the Internet of Things (IoT) with 5G and AI architectures. In MEC, virtual devices replace physical edge computers to process video streams through a 5G connection.

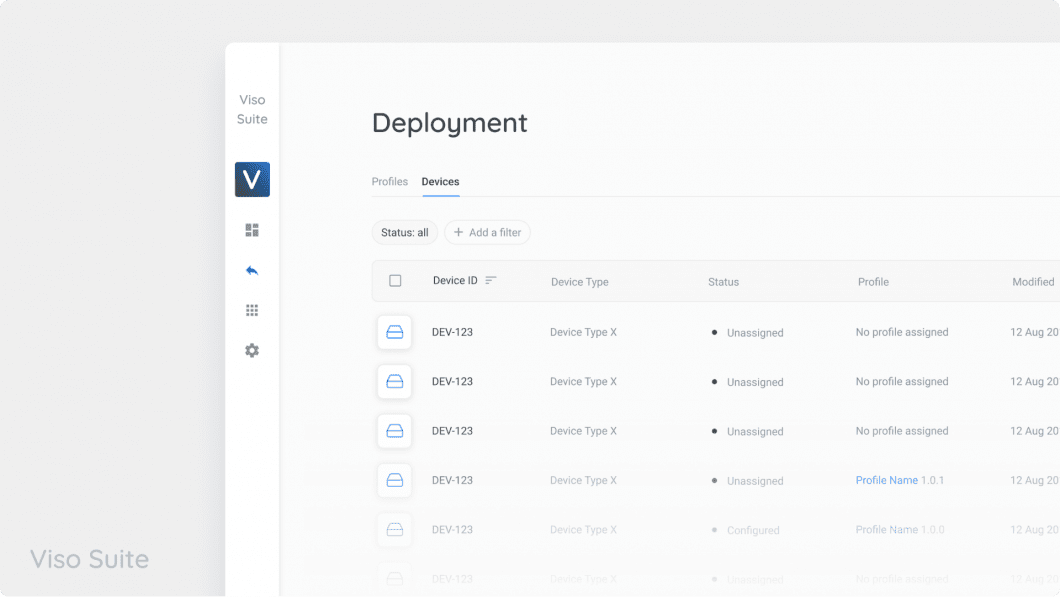

Together with Intel, we implemented the development and operation of MEC computer vision applications using Viso Suite. Viso Suite allows teams to enroll, deploy to, and manage virtual endpoints in the cloud or using MEC systems.

Edge Computing vs. Fog Computing

Cisco initially introduced the team Fog Computing, which is closely related to Edge computing. Fog computing extends the cloud to be closer to the IoT end devices. This improves latency and security by performing computations near the network edge.

The main difference between fog and edge computing pertains to the data processing location. In edge computing, the data processing center is either directly on:

- The sensors attached to the devices

- Gateway devices that are physically very close to the sensors

Fog models process data further away from the edge to connected devices on a local area network (LAN).

Deep Learning at the Edge AI

Performing deep learning tasks typically requires a lot of computational power and a massive amount of data. Low-power IoT devices, such as typical cameras, are continuous sources of data. However, their limited storage and computing capabilities make them unsuitable for the training and inference of deep learning models. Edge AI technology provides a solution by combining Deep Learning and Edge Computing.

Therefore, we place edge devices or servers near those end devices. We use these devices for deploying deep learning models that operate on IoT-generated data. ML edge computing is vital for applications involving heavy visual data, Natural Language Processing (NLP), and real-time processing.

Edge AI Use Cases

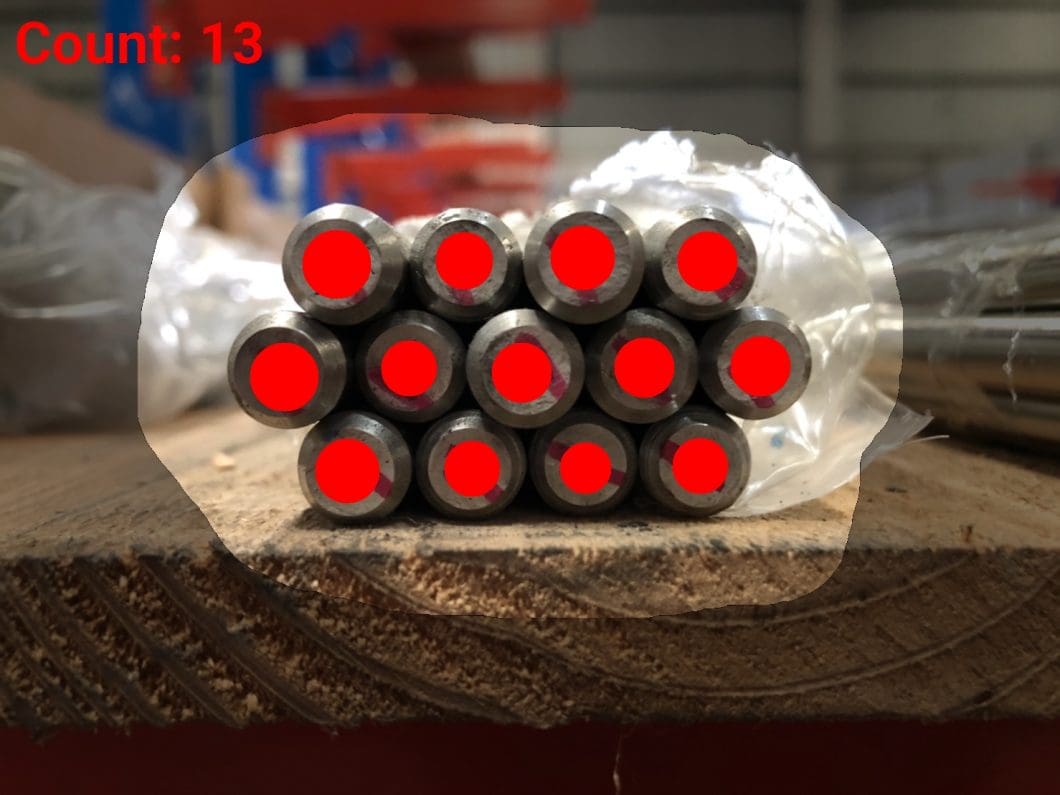

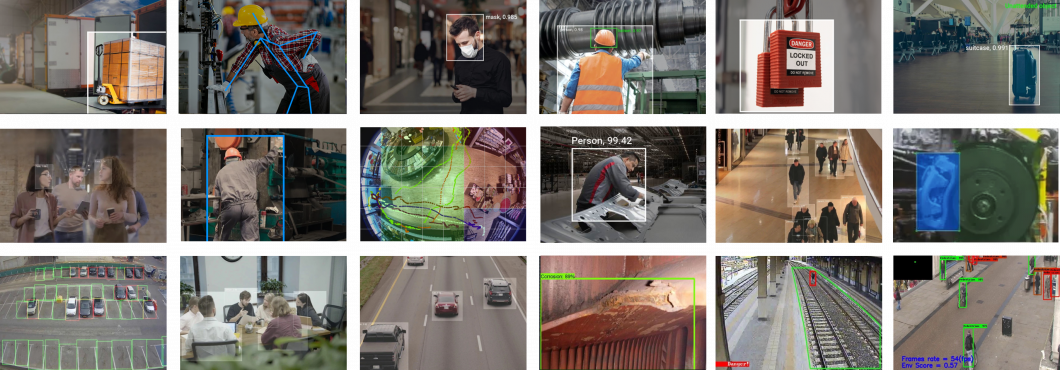

Smart AI Vision

Edge AI applications, such as live video analytics, power computer vision systems in multiple industries. Intel developed special co-processors named Visual Processing Units to power high-performance computer vision applications on edge devices.

Smart Energy

A study examined a remote wind farm’s data management and processing costs using a cloud-only system. They compared this to a combined edge-cloud system. The wind farm uses several data-producing sensors and devices. These include video surveillance cameras, security sensors, access sensors for employees, and sensors on wind turbines.

The edge-cloud system turned out to be 36% less expensive as opposed to the cloud-only system. At the same time, the system reduced the volume of data requiring transfer by 96%.

AI Healthcare

AI healthcare applications such as remote surgery, diagnostics, and vitals monitoring rely on AI at the edge. Doctors can use a remote platform to operate surgical tools from a distance where they feel safe and comfortable.

Entertainment

Applications include virtual, augmented, and mixed reality, such as streaming video content to virtual reality glasses. We can reduce the size by offloading computation from the glasses to edge servers near the end device. Microsoft recently introduced HoloLens, a holographic computer built onto a headset for an augmented reality experience. Microsoft aims to design standard computing, data analysis, medical imaging, and gaming-at-the-edge tools using the HoloLens.

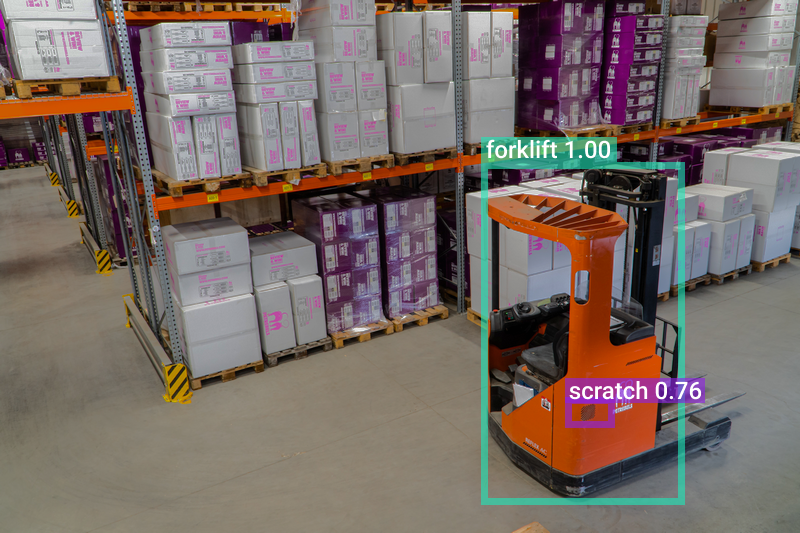

Smart Factories

Applications, such as smart machines, aim to improve safety and productivity. For example, operators can use a remote platform to operate heavy machines. This is particularly true for those located in hard-to-reach and unsafe places, from a safe and comfortable place.

Intelligent Transportation Systems

Drivers can share or gather information from traffic information centers to avoid vehicles in danger or stopping abruptly. Real-time performance can help avoid accidents. In addition, a self-driving car can sense its surroundings and move safely autonomously.

Edge AI Software Platforms

The complexity increases with machine learning moving from the cloud to the edge. Cloud technology traditionally relies on APIs for the connection. However, edge AI requires IoT capabilities to manage physical edge devices needing cloud connection.

Edge AI software usually involves the cloud to orchestrate the edge side with multiple clients connecting to the cloud. The edge-to-cloud connection manages many endpoints, rolls out updates, and provides security patches.

Edge architecture requires:

- Offline capabilities

- Remote deployment and updating

- Edge device monitoring

- Secure data at rest and in transit

On-device machine learning requires AI hardware and optimized edge computing chips to process sensor and camera data. At viso.ai, we have built an Edge AI software platform to power Computer Vision applications.

What’s Next for AI at the Edge

Edge computing is necessary for real-world AI applications. Traditional cloud computing models are not suitable for computationally intensive and data-heavy AI applications.

We recommend you read the following articles that cover related topics:

- Learn about Privacy-Preserving Deep Learning for Computer Vision

- An easy-to-understand guide to Deep Face Recognition

- What you need to know about Self-Supervised Learning

- Examples and Methods of Deep Reinforcement Learning