Artificial Intelligence (AI) for the blind and visually impaired, specifically using Computer Vision (CV), has the potential to improve the lives of visually impaired individuals significantly. Every day we do tasks that we take for granted. Identifying objects or navigating busy streets, pose unique challenges for those with vision loss. Advancements in computer vision are making way for a new generation of assistive technologies that can enhance independence and enrich the lives of visually impaired people.

In this comprehensive guide, we will explore the various types of AI for the blind. Let’s delve into the process of building custom computer vision models tailored to their needs, and examine the real-world impact of these innovative technologies.

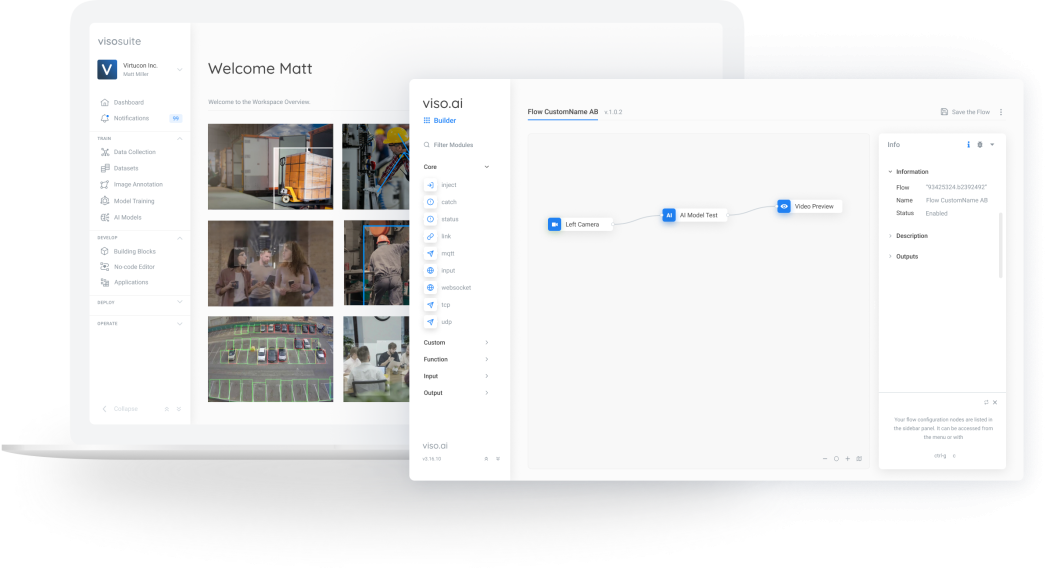

About us: We’ve built the Viso Suite infrastructure for enterprise computer vision applications. In a single interface, we deliver the full process of application development, deployment, and management. To learn more, book a demo with our team.

Types of AI for the Blind

Visually impaired people often use canes or guide dogs to navigate, but AI solutions can assist with other daily tasks like reading, scene understanding, and object detection.

Recent advancements in computer vision allow us to create AI for the blind. This technology raises the degree of autonomy for the visually impaired through smart applications that can do various tasks such as reading books, describing scenes, and identifying objects.

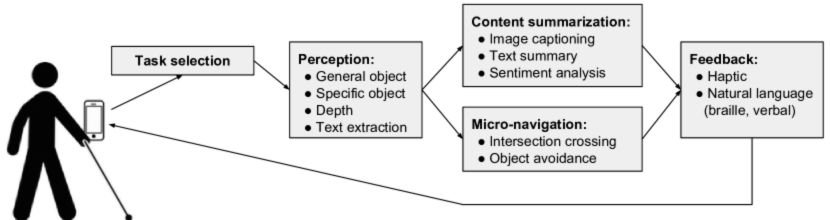

This level of autonomy can be of great help to visually impaired people. Such software would give the visually impaired abilities that rival those of the sighted. Thus, the software will need to have various features like object detection, image captioning, navigation, and more.

We can categorize the types of AI for the blind and their functions. We will see how each category can help and be used for each application.

Object Recognition

The process of detecting objects is necessary for daily activities. Empowering blind people with this ability through Computer Vision (CV) applications and assistive technologies can greatly benefit them.

Let’s break down the different ways AI can assist the blind with object recognition.

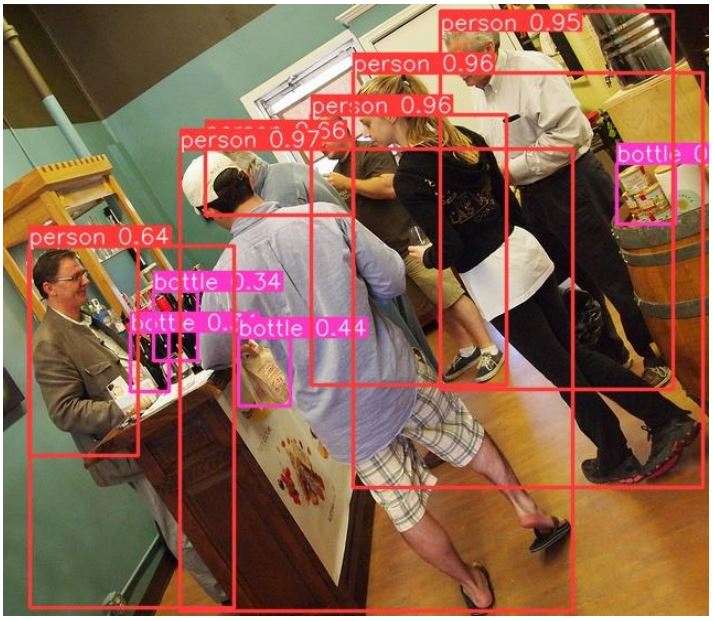

- Object Detection: This technology identifies and localizes objects within images or video frames. Models like YOLOv10 (You Only Look Once) are particularly good at real-time object detection. Real-time object detection is necessary for the visually impaired, allowing them to identify the objects around them with barely any delay. Those models suit more complex applications including currency identification, navigation, and more.

A complex scenario can pose a huge challenge to object detection models as too many objects can confuse the individual. Also, generalization and environment settings are big challenges, as object detection models may not be general enough to identify all objects and environment settings making accurate and reliable object detection a complex task.

- Object Localization: While similar to detection, localization focuses on pinpointing the precise position of a single object within an image. This is crucial for tasks like guiding a user’s hand toward a specific item on a table but still faces the challenge of generalization.

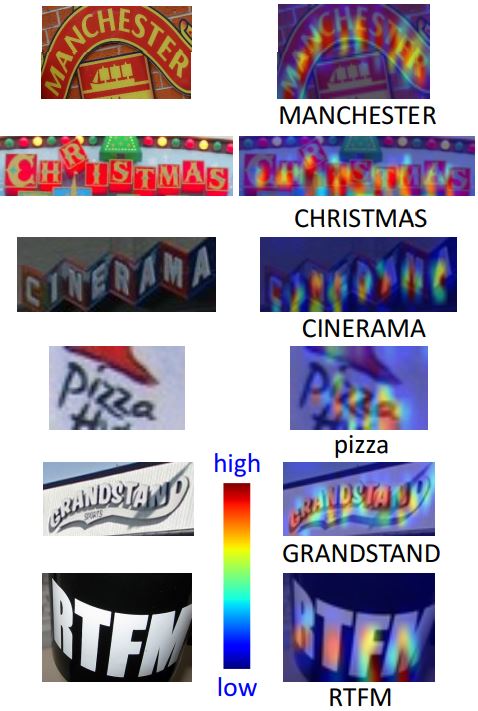

- Optical Character Recognition (OCR): OCR technology converts text within images into machine-readable text, which then can be converted into human-readable text or audio. This is essential for reading signs, labels, menus, and documents, giving visually impaired individuals access to critical information.

OCR technology is constantly developing, being able to read and identify more complex text scenarios. This technology can be used within more complex applications creating AI for the blind. However, those models still hold drawbacks, things like font, language, and format are big challenges for OCR models.

Content Summarization

Computer vision (CV) and Natural Language Processing can provide further abilities to the visually impaired. With content summarization, we can describe scenes, explain text, and give sentiment analysis.

Let’s see how these technologies can be used within AI solutions for the blind.

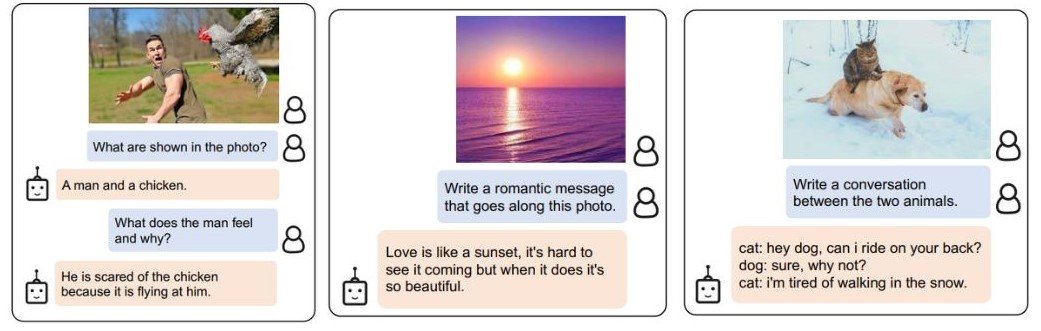

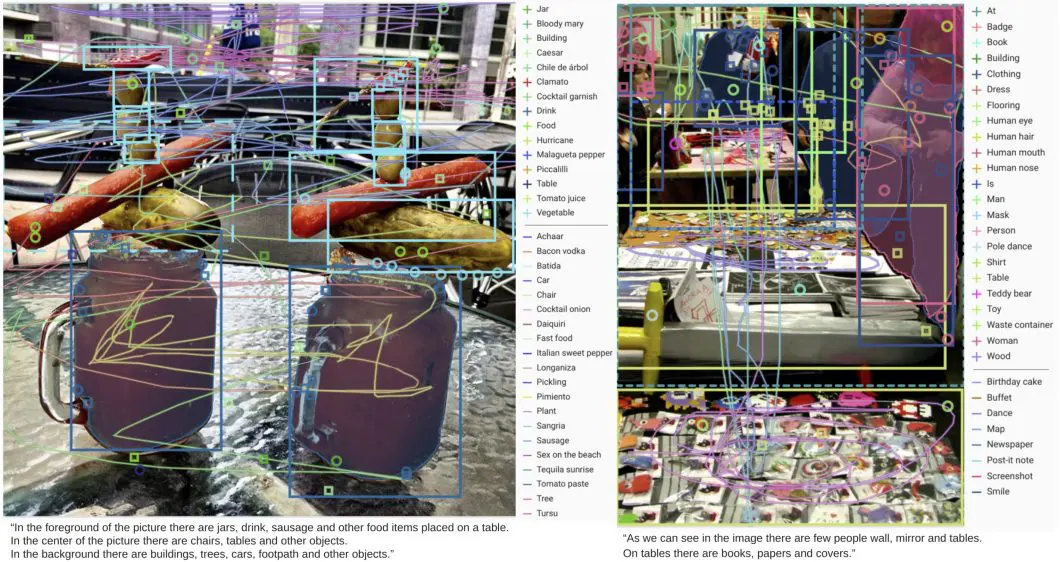

- Image Captioning: This technology usually combines vision and language models (VLMs). In this task, the models extract information from an image and generate an appropriate caption that describes the image. This could be useful in creating AI for the blind that can describe scenes, phone screens, and much more. Those models are not limited to generating captions, they can also do visual question answering (VQA) and generate text based on an image.

However, image captioning models face some limitations regarding real-time performance, accuracy, generalization, and data requirements.

- Emotion Recognition: Those AI models can analyze a person’s emotions through images, written text, or voice. When it comes to the visually impaired, knowing the emotions of the person in front of them can be very helpful, or even through pictures.

Although, speech and text have their own set of models, images and videos have another. These models usually use a classification algorithm like a Convolutional Neural Network (CNN) or a multimodal architecture. Human emotions are already hard to recognize and get right, which is one limitation of these models. - Text-to-speech (TTS): One of the simplest assistive technologies is TTS, it offers a great help in reading text. This technology is useful in making AI solutions for the blind. TTS utilizes generative models to generate speech based on text patterns. Combined with other models TTS can have many applications as an assistive technology.

Navigation Assistance (Micro-Navigation)

Navigation assistance refers to the task of crossing a path to the destination. This task can help the visually impaired avoid obstacles, localize objects, and cross intersections. It requires robust technologies to plan the path for the blind.

Some technologies such as AI glasses for the blind or smart glasses can be useful, but with big limitations such as price, limited battery life, and generalization. Other services like video calls from professionals can also be helpful but those can be pricey.

Fortunately, AI is constantly developing, with innovations and tech coming out every day. Let’s look at some of these technologies that can help with micro-navigation.

- Smart Cities: Using AI we can have smart roads that lead to safer intersection crossing especially for the blind. The Internet of Things (IoT) is a big technology that can help us make safer environments, especially for the impaired.

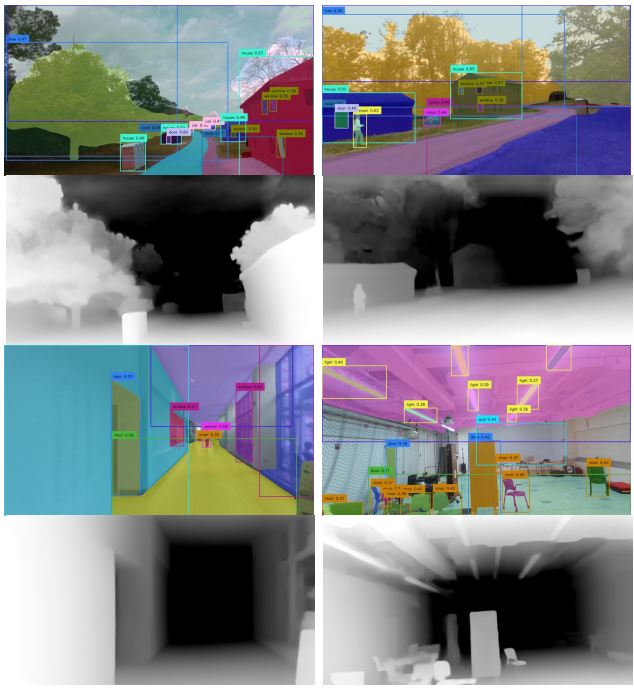

IoT devices can be placed on roads to monitor traffic, street lighting, and the condition of the road. This type of AI can track road users around intersections, and detect bicycles, pedestrians, and vehicles. Connecting this to some speech or notification system can warn pedestrians of road safety conditions. - Localization and Mapping: Other technologies offer a complex AI architecture that allows a device or a robot to construct a map of the place and localize itself within it. Simultaneous localization and mapping (SLAM) is a popular technology in robotics and other autonomous devices that can be used in smart ways to build better AI for the blind.

Using techniques like semantic segmentation, classification, and depth maps, a robot can be more efficient in navigating its environment. When using such technology in assistive devices, we can reach higher levels of safety and independence for the visually impaired.

Building an AI for the Blind

To build an AI solution that is particularly helpful for the blind, we need to consider a few aspects that can differ from normal AI developments. First, accuracy should be the top priority, this means better data and model training or fine-tuning. Secondly, training data must be comprehensive to generalize well with the visually impaired environments.

Training a model from scratch will probably not be necessary as transfer learning is a powerful technique that allows us to use pre-trained models to match our purpose. Other solutions include using the comprehensive Viso suite which can give an end-to-end infrastructure to easily build, deploy, and scale computer vision applications.

From collecting and annotating your data, utilizing a wide range of pre-trained models, or even training your own model, to deploying, monitoring, and securing your application, it can all be done in one place with Viso.ai.

Let’s explore the steps of building an AI for the blind one by one and go through some examples.

Data Collection and Annotation

Deep learning models are highly dependent on data quality and volume. Data collection and cleaning are critical steps in developing effective deep-learning models. While tools like the Viso suite can make it easier, those tasks are still time-consuming.

However, public datasets are available for a variety of tasks, whether it is captioning, segmentation, detection, NLP, or even video data. We have huge datasets that have been collected and worked on by researchers like the examples below.

- COCO: A well-known large-scale dataset for multiple tasks: Segmentation, object detection, and captioning.

- Open Images: A large dataset that has around ~9M images with image-level labels, object bounding boxes, object segmentation masks, visual relationships, and localized narratives.

However, these datasets are not designed for the specific needs of the visually impaired. An AI for the blind requires a more focused and detailed approach to data collection and annotation, considering this user group’s unique challenges and requirements.

For example, a dataset tailored for the blind might prioritize the following.

- Diverse Scenarios: Include a variety of real-world environments and situations that visually impaired individuals encounter daily. This means capturing various lighting conditions, and diverse backgrounds.

- Specific Objects: Focus on objects and obstacles commonly encountered by the visually impaired. This could include household items, street signs, public transportation elements, and personal belongings.

- Data Types: Use other sources of data like audio and text or other sensory information alongside visual data. This can provide a richer context for understanding the environment.

- Minimizing Bias: Bias in computer vision datasets is important to avoid, reducing this bias is essential to improve AI for the blind.

However, instead of starting from scratch, filtering and enhancing existing datasets can still give promising results.

Model Training and Evaluation

Computer vision models have many training techniques depending on the specific task. For example, object detection models often use CNNs, utilizing techniques like backpropagation and gradient descent for training.

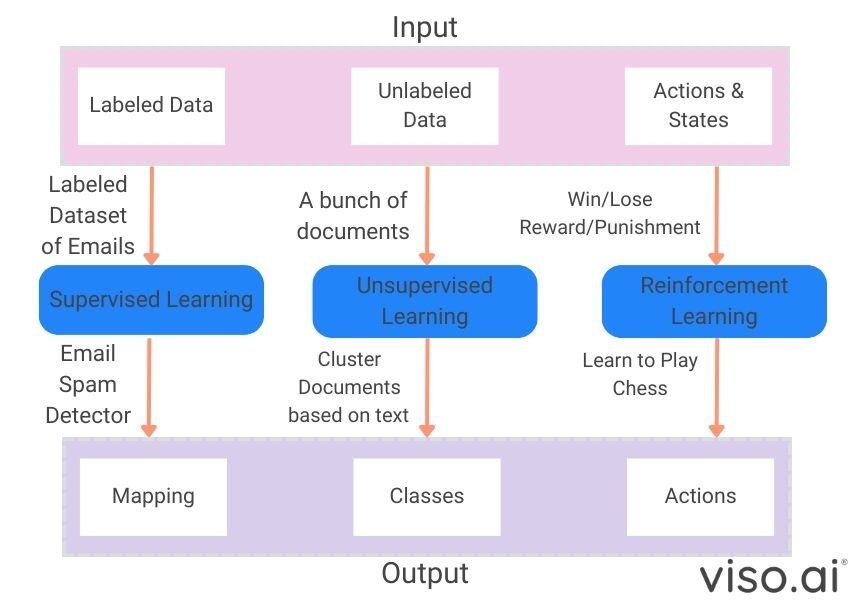

While those CV fundamentals apply to AI for the blind, there are some unique considerations. This field requires a focus on accuracy, real-time performance, and generalization across different scenarios. Generally, for training AI models there are 3 approaches mentioned below.

- Supervised Learning

- Self-Supervised and Unsupervised Learning

- Reinforcement Learning

Evaluation is just as important as model training. We need to make sure our AI models are accurate, reliable, and helpful to the targeted group. This means using the right metrics to test how well they perform.

- Object Detection: For models like YOLOs, Faster R-CNN, and MobileNet, we often use metrics like mean Average Precision (mAP). Which in simple terms measures how well the model finds objects and draws accurate bounding boxes around them.

- Image Captioning: We measure how well the generated text matches the ground truth for captioning models like BLIP. This can be done with metrics like BLEU.

However, those metrics are not everything, building an AI for the blind has other special considerations.

- Real-World: It’s necessary to test a model in actual scenarios that a blind person would face daily. Busy streets, dimly lit rooms, or cluttered environments.

- Feedback: Feedback from visually impaired users will tell us the real measure of how well this AI is improving their lives.

- Accuracy and Generalization: It is important to have great accuracy scores according to our metrics, and it’s equally important to be aware of potential biases, this can help the model generalize better.

Remember, the goal of an AI for the blind is to make life easier. So, a thorough evaluation and training help ensure that happens.

Model Development

Let us now explore how we can develop and use AI models that power assistive technologies. Luckily, we don’t need to start from scratch, many open-source models are available with a wide variety of tasks.

Pre-trained models, which have been trained on massive datasets with appropriate equipment for tasks like object recognition or image captioning and more, offer a valuable starting point. We can use these pre-trained models and adapt them to the specific needs of visually impaired users.

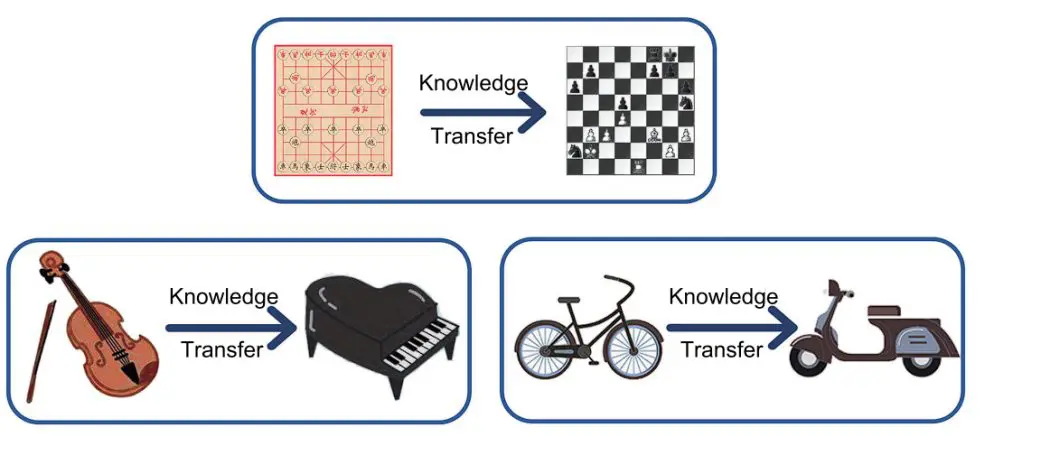

This is where transfer learning is useful. Transfer learning allows us to take a model trained on one task like recognizing objects in the ImageNet dataset and fine-tune it for a new, related task like recognizing common household items.

This is especially useful for AI for the blind because it allows us to use the huge knowledge already learned by these models and drastically reduce the amount of data needed for training.

However, even with pre-trained models, fine-tuning is crucial. When applying transfer learning methods we must consider adjusting the model’s parameters on the smaller dataset specifically tailored to the target application. The open-source community has developed a variety of pre-trained models that can be adapted for assistive technologies.

BLIP and YOLO are good examples of an open-source model, the developers include options to do inference and to fine-tune using pre-trained checkpoints.

The fine-tuning code includes tasks like image-text retrieval, image captioning, VQA for BLIP, and detection or segmentation for YOLO. The full instructions on those are available on their GitHub repos. For a demo inference, you can try the Colab notebook or the HuggingFace space. Next, let’s take a look at some real-world examples of AI for the blind.

Real-World AI For The Blind Examples

AI-powered assistive technologies for people who are blind are making a real-world impact, transforming lives and opening up new possibilities. Big companies are leading this transformation and providing accurate and helpful solutions.

Seeing AI by Microsoft

One notable example is Seeing AI, a free IOS and Android mobile app developed by Microsoft. It harnesses the power of computer vision to describe the world around the user. Using object recognition, scene description, and text-to-speech, Seeing AI can read text aloud, identify products, describe people and scenes, and even detect emotions in facial expressions.

With a wide range of capabilities Seeing AI is a useful AI for the blind, empowering individuals with visual impairments to navigate their surroundings and access information more independently. Although Seeing AI is not open-source, technology like this can lead to a brighter future with equal opportunities for everyone.

OrCam MyEye

OrCam MyEye is a wearable AI-powered camera that attaches to glasses. uses sophisticated algorithms for object recognition, text reading, and facial recognition. It can read text from books, menus, or signs, identify faces, and even recognize products. The device discreetly communicates information through an earpiece, providing users with real-time audio feedback and assistance.

What’s Next For AI For The Blind?

AI for the blind is transforming the lives of the visually impaired, opening up new possibilities for independence and engagement with their world. Whether it is recognizing objects, navigating environments, or understanding documents and emotions, AI has immense potential to bridge the gap between the sighted and the blind.

However, many challenges remain going forward. To create AI systems that are truly helpful, we need to address issues like data bias, accessibility, and trust between users and technology. Additionally, the complexity of the visual world requires ongoing research and development.

Despite this, the future of AI for the blind is promising. As technology advances and our understanding of human-computer interaction deepens, we can expect even better solutions to emerge. The journey towards truly accessible and empowering AI is ongoing, and the progress made thus far is a testament to the transformative power of technology when harnessed for good.

Find how AI can be used for various applications in different industries by reading our blogs below:

- 7 Cutting-Edge Applications of AI in Mining

- The 10 Top Applications of Computer Vision in Retail

- Computer Vision In Manufacturing: The Most Popular Applications

- Top Applications of Computer Vision in Agriculture

- Computer Vision in Environmental Conservation Applications